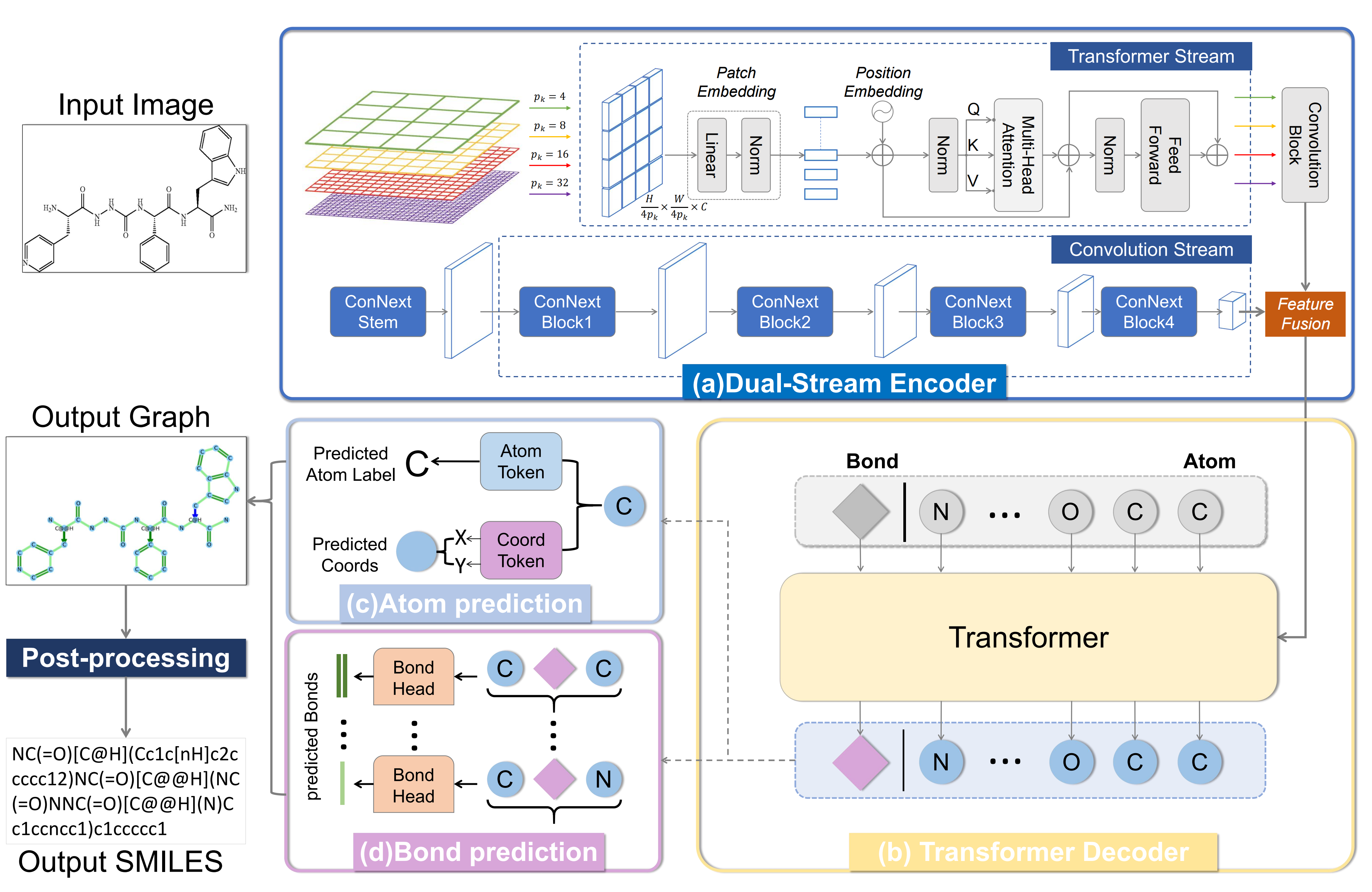

In this work, We propose MolNexTR, a novel graph generation model. The model follows the encoder-decoder architecture, takes three-channel molecular images as input, outputs molecular graph structure prediction, and can be easily converted to SMILES. We aim to enhance the robustness and generalization of the molecular structure recognition model by enhancing the feature extraction ability of the model and the augmentation strategy, to deal with any molecular images that may appear in the real literature.

Clone the following repositories:

git clone https://github.com/CYF2000127/MolNexTR

- First create and activate a conda environment with the following in a Linux environment

conda create -n molnextr python=3.8

conda activate molnextr

- Install requirements:

pip install -r requirements.txt

-

Download the model checkpoint from our Hugging Face Repo or Zenodo Repo:

and put in your own path

-

Run the following code to predict molecular images:

import torch

from MolNexTR import molnextr

Image = './examples/1.png'

Model = './checkpoints/molnextr_best.pth'

device = torch.device('cpu')

model = molnextr(Model, device)

predictions = model.predict_final_results(Image)

print(predictions)or use prediction.ipynb. You can also change the image and model path to your own images and models.

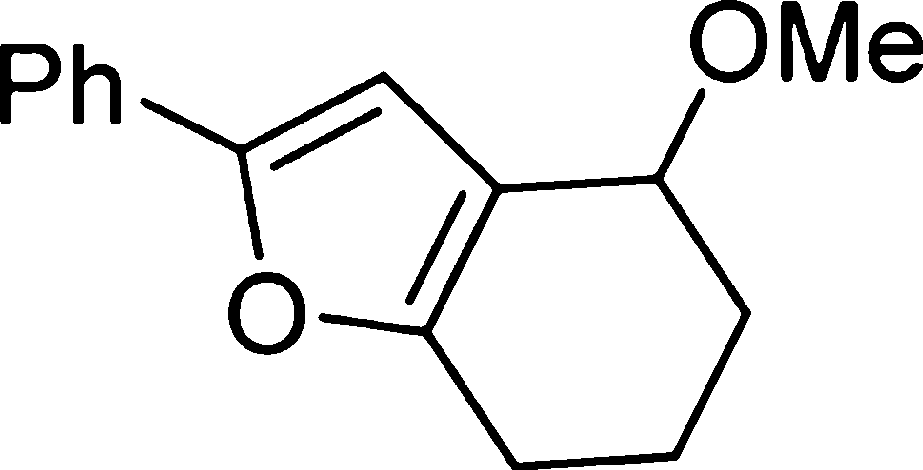

The input is a molecular image

{

'atom_sets': [

{'atom_number': '0', 'symbol': 'Ph', 'coords': (0.143, 0.349)},

{'atom_number': '1', 'symbol': 'C', 'coords': (0.286, 0.413)},

{'atom_number': '2', 'symbol': 'C', 'coords': (0.429, 0.349)}, ...

],

'bonds_sets': [

{'atom_number': '0', 'bond_type': 'single', 'endpoints': (0, 1)},

{'atom_number': '1', 'bond_type': 'double', 'endpoints': (1, 2)},

{'atom_number': '1', 'bond_type': 'single', 'endpoints': (1, 5)},

{'atom_number': '2', 'bond_type': 'single', 'endpoints': (2, 3)}, ...

],

'predicted_molfile': '2D\n\n 11 12 0 0 0 0 0 0 0 0999 V2000 ...',

'predicted_smiles': 'COC1CCCc2oc(-c3ccccc3)cc21'

}

pip install -r requirements.txt

For training and inference, please download the following datasets to your own path.

- Synthetic: Indigo, ChemDraw

- Realistic: CLEF, UOB, USPTO, JPO, Staker, ACS

- Perturbed by IMG transform: CLEF, UOB, USPTO, JPO, Staker, ACS

- Perturbed by curved arrows: CLEF, UOB, USPTO, JPO, Staker, ACS

Run the following command:

sh ./exps/train.sh

The default batch size was set to 256. And it takes about 20 hours to train with 10 NVIDIA RTX 3090 GPUs. Please modify the corresponding parameters according to your hardware configuration.

Run the following command:

sh ./exps/eval.sh

The default batch size was set to 32 with a single NVIDIA RTX 3090 GPU. Please modify the corresponding parameters according to your hardware configuration. The outputs include the main metrics we used, such as SMILES and graph exact matching accuracy.

Run the following command:

python prediction.py --model_path your_model_path --image_path your_image_path

Use visualization.ipynb to visualize the ground truth and the predictions.

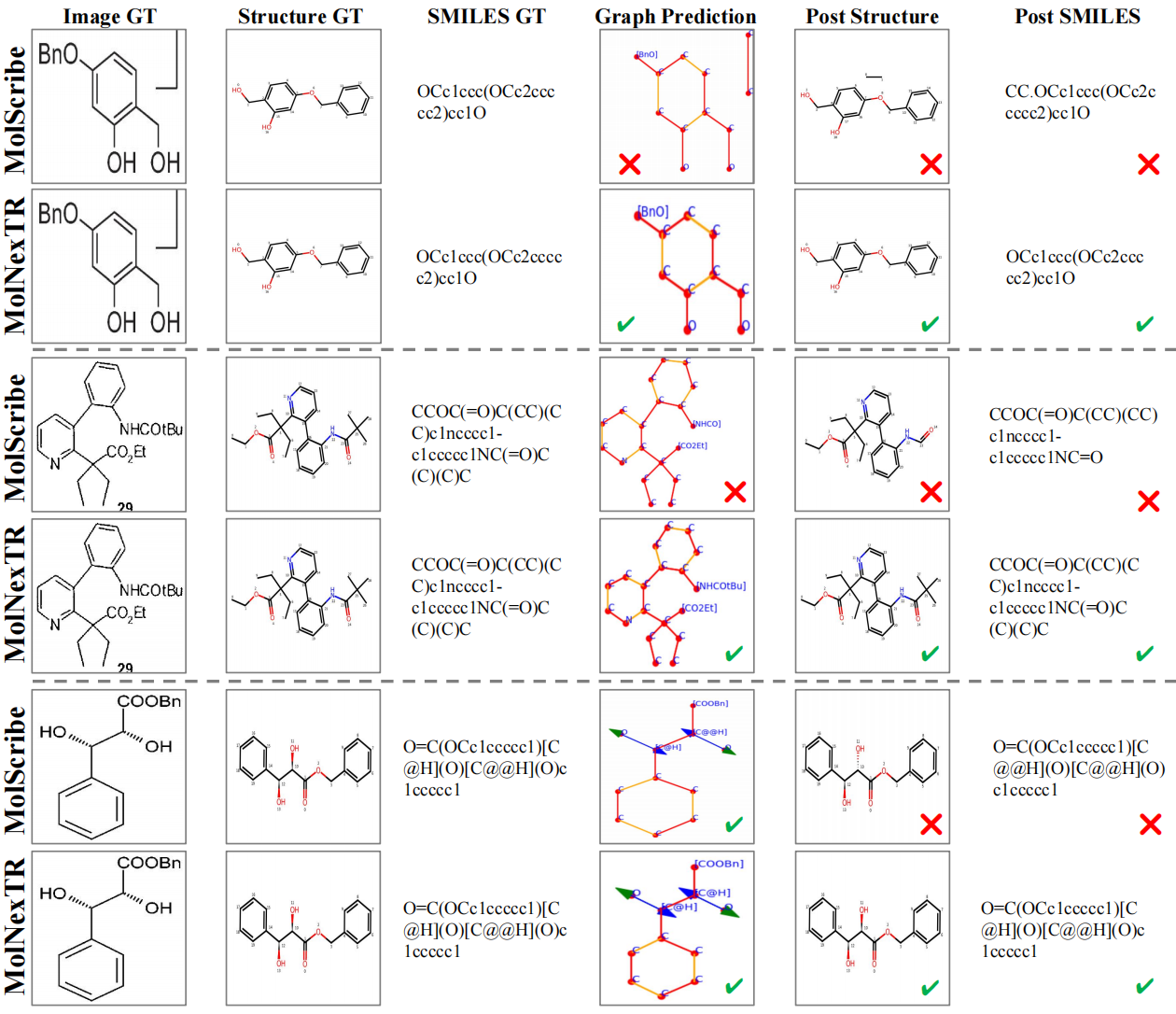

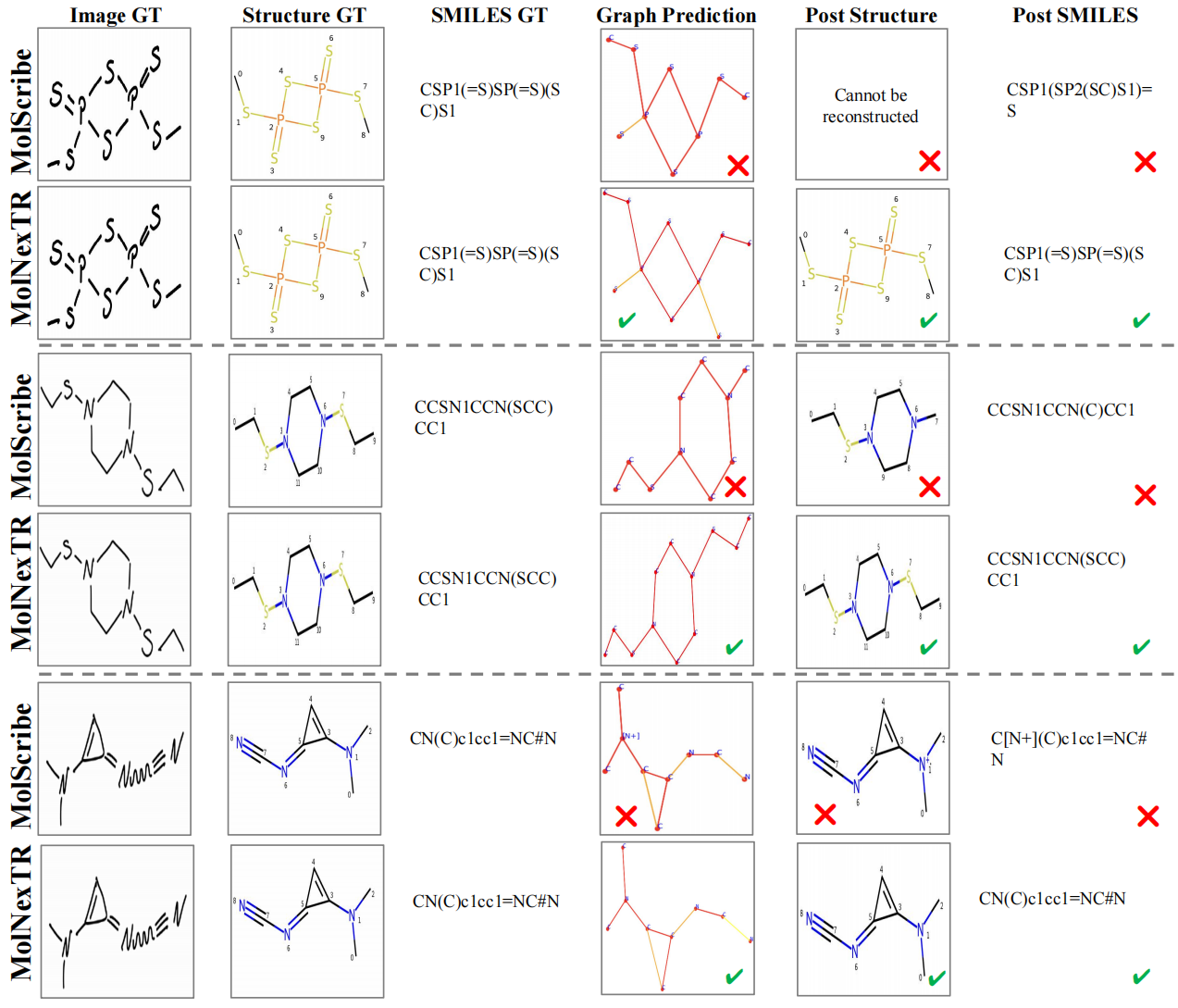

We also show some qualitative results of our method below: