This is the offical code of following paper "ReactionImgMLLM: A Multimodal Large Language Model for Image-Based Reaction Data Extraction".

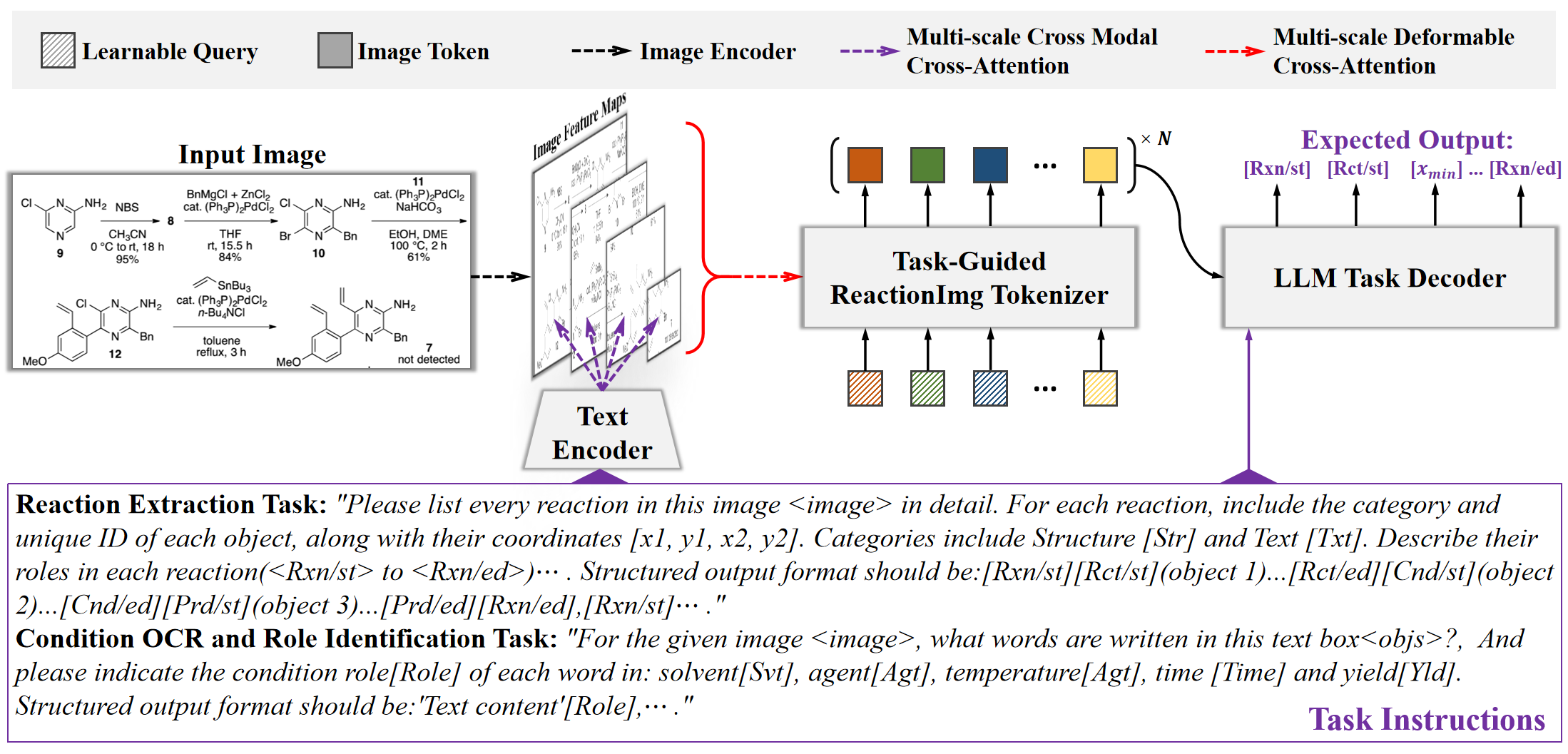

In this paper, we present ReactionImgMLLM, a multimodal large language model for different reaction image data extraction tasks such as reaction extraction task, condition OCR and role identification task. We first formulate these tasks into different task instructions. The model then aligns the task instructions with features extracted from reaction images. An LLM-based decoder can further make predictions based on these instructions. For the reaction extraction task, our model can achieve over 84%-92% soft match F1 score on multiple test sets, which significantly outperforms the previous works. The experiments also show the outstanding condition OCR and role identification abilities.

Please clone the following repositories:

git clone https://github.com/CYF2000127/ReactionImgMLLM

pip install -r requirements.txt

For training and inference, please download the following datasets to your own path.

Or use the codes in data_generation to generate any number of synthetic reaction images.

Note that you should download the original Pistachio dataset first and put it into the same file with the codes.

- Change the name of datasets in

DEFAULT_TRAIN_DATASET.pyfor different training stages. - Run the following command:

sh train.sh

Run the following command:

sh eval.sh

Run the following command to launch a Gradio web demo:

python mllm/demo/webdemo_re.py --model_path /path/to/shikra/ckpt

More model checkpoints is coming soon!

Our code is based on Shikra and VisionLLM, thanks their great jobs!