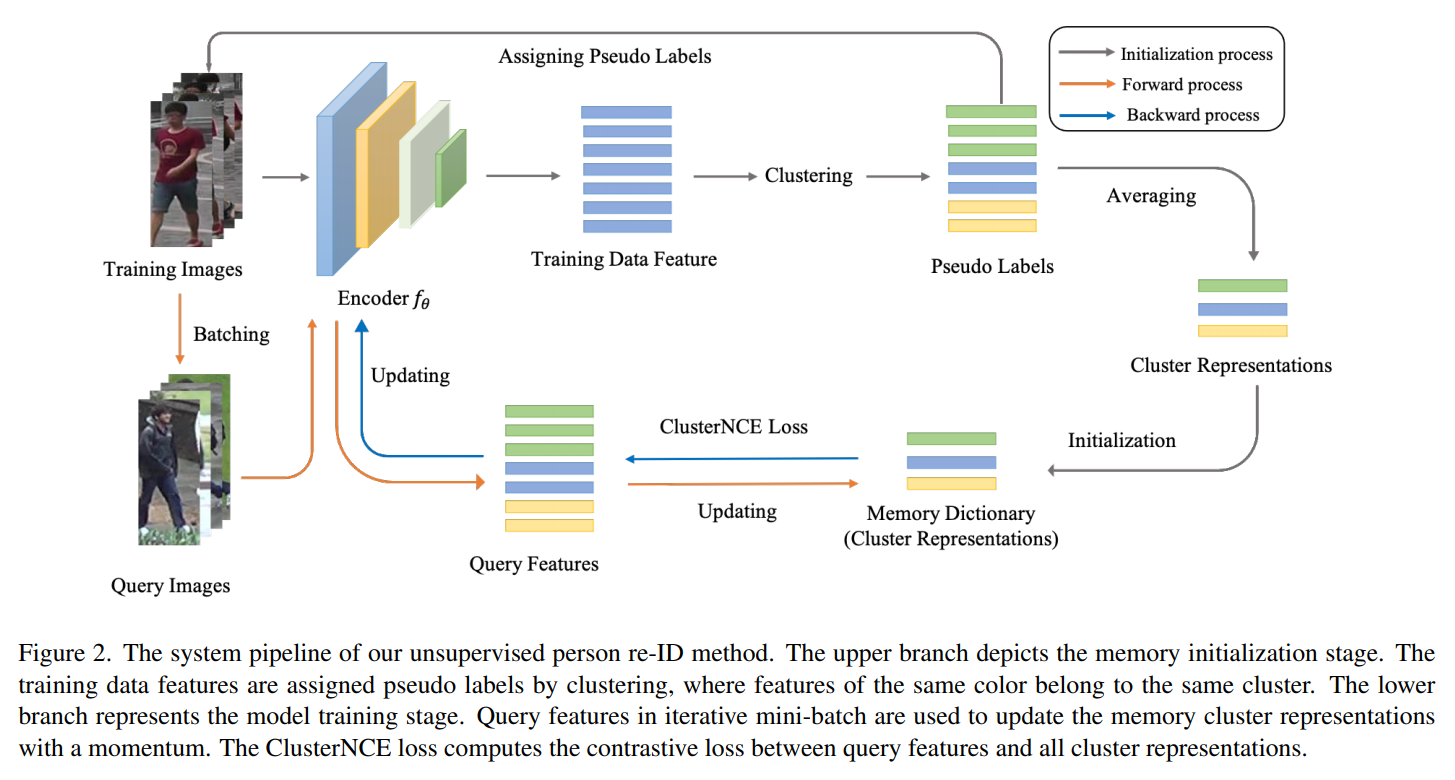

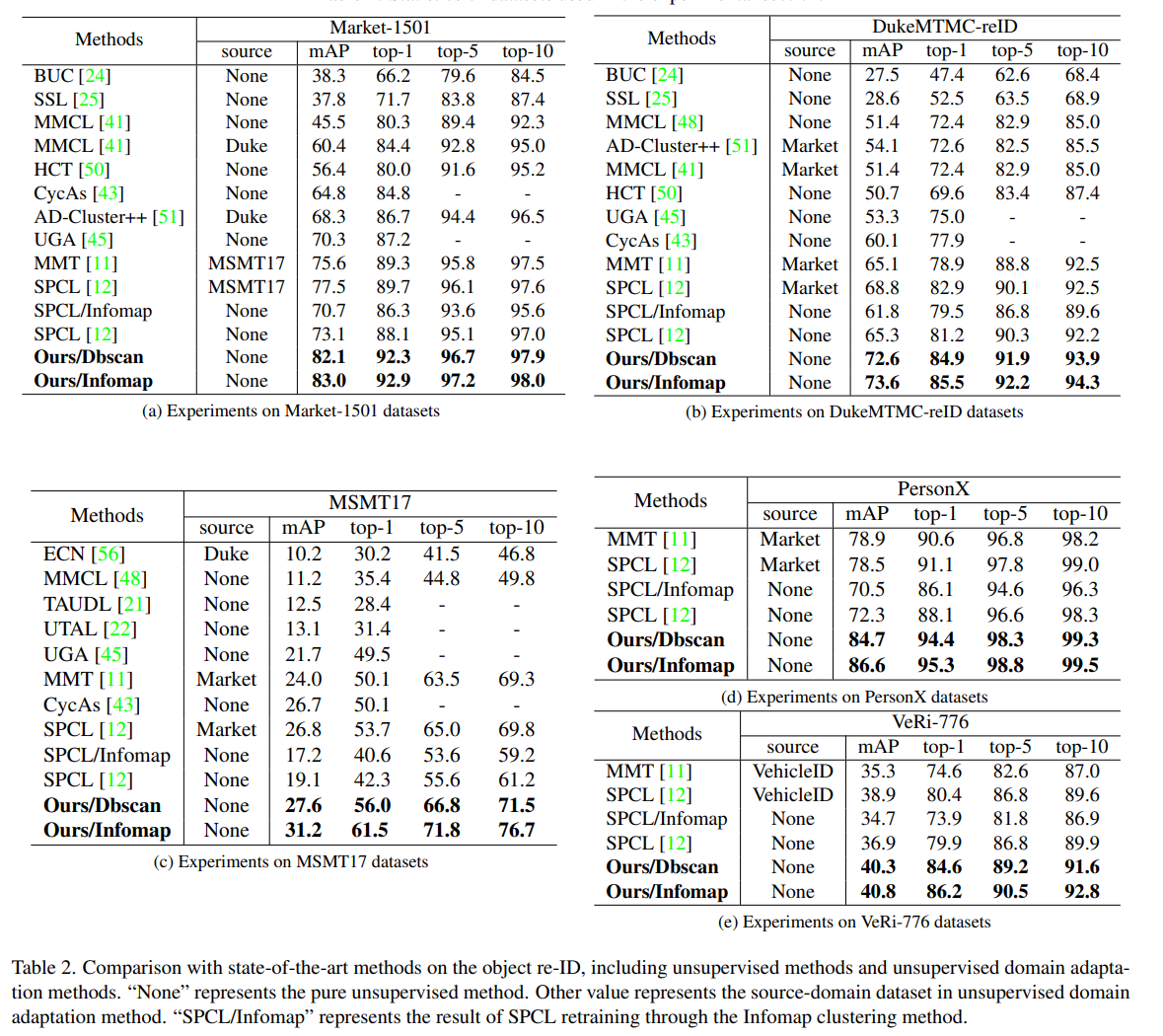

The official repository for Cluster Contrast for Unsupervised Person Re-Identification. We achieve state-of-the-art performances on unsupervised learning tasks for object re-ID, including person re-ID and vehicle re-ID.

11/19/2021

-

Memory dictionary update changed from bath hard update to momentum update. Because the bath hard update is sensitive to parameters, good results need to adjust many parameters, which is not robust enough.

-

Add the results of the InforMap clustering algorithm. Compared with the DBSCAN clustering algorithm, it can achieve better results. At the same time, we found through experiments that it is more robust on each data set.

In the process of doing experiments, we found that some settings have a greater impact on the results. Share them here to prevent everyone from stepping on the pit when applying our method.

-

The dataloader sampler uses RandomMultipleGallerySampler, see the code implementation for details. At the same time, we also provide RandomMultipleGallerySamplerNoCam sampler, which can be used in non-ReID fields.

-

Add batch normalization to the final output layer of the network, see the code for details.

-

we obtain a total number of P × Z images in the mini batch. P represents the number of categories, Z represents the number of instances of each category. mini batch = P x Z, P is set to 16, Z changes with the mini batch.

git clone https://github.com/alibaba/cluster-contrast-reid.git

cd ClusterContrast

python setup.py developcd examples && mkdir dataDownload the person datasets Market-1501,MSMT17,PersonX,DukeMTMC-reID and the vehicle datasets VeRi-776 from aliyun. Then unzip them under the directory like

ClusterContrast/examples/data

├── market1501

│ └── Market-1501-v15.09.15

├── msmt17

│ └── MSMT17_V1

├── personx

│ └── PersonX

├── dukemtmcreid

│ └── DukeMTMC-reID

└── veri

└── VeRi

When training with the backbone of IBN-ResNet, you need to download the ImageNet-pretrained model from this link and save it under the path of examples/pretrained/.

ImageNet-pretrained models for ResNet-50 will be automatically downloaded in the python script.

We utilize 4 GTX-2080TI GPUs for training. For more parameter configuration, please check run_code.sh.

examples:

Market-1501:

- Using DBSCAN:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl.py -b 256 -a resnet50 -d market1501 --iters 200 --momentum 0.1 --eps 0.6 --num-instances 16- Using InfoMap:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl_infomap.py -b 256 -a resnet50 -d market1501 --iters 200 --momentum 0.1 --eps 0.5 --k1 15 --k2 4 --num-instances 16MSMT17:

- Using DBSCAN:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl.py -b 256 -a resnet50 -d msmt17 --iters 400 --momentum 0.1 --eps 0.6 --num-instances 16- Using InfoMap:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl_infomap.py -b 256 -a resnet50 -d msmt17 --iters 400 --momentum 0.1 --eps 0.5 --k1 15 --k2 4 --num-instances 16DukeMTMC-reID:

- Using DBSCAN:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl.py -b 256 -a resnet50 -d dukemtmcreid --iters 200 --momentum 0.1 --eps 0.6 --num-instances 16- Using InfoMap:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl_infomap.py -b 256 -a resnet50 -d dukemtmcreid --iters 200 --momentum 0.1 --eps 0.5 --k1 15 --k2 4 --num-instances 16VeRi-776

- Using DBSCAN:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl.py -b 256 -a resnet50 -d veri --iters 400 --momentum 0.1 --eps 0.6 --num-instances 16 --height 224 --width 224- Using InfoMap:

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/cluster_contrast_train_usl_infomap.py -b 256 -a resnet50 -d veri --iters 400 --momentum 0.1 --eps 0.5 --k1 15 --k2 4 --num-instances 16 --height 224 --width 224We utilize 1 GTX-2080TI GPU for testing. Note that

-

use

--width 128 --height 256(default) for person datasets, and--height 224 --width 224for vehicle datasets; -

use

-a resnet50(default) for the backbone of ResNet-50, and-a resnet_ibn50afor the backbone of IBN-ResNet.

To evaluate the model, run:

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py \

-d $DATASET --resume $PATHSome examples:

### Market-1501 ###

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py \

-d market1501 --resume logs/spcl_usl/market_resnet50/model_best.pth.tarYou can download the above models in the paper from aliyun

If you find this code useful for your research, please cite our paper

@article{dai2021cluster,

title={Cluster Contrast for Unsupervised Person Re-Identification},

author={Dai, Zuozhuo and Wang, Guangyuan and Zhu, Siyu and Yuan, Weihao and Tan, Ping},

journal={arXiv preprint arXiv:2103.11568},

year={2021}

}

Thanks to Yixiao Ge for opening source of his excellent works SpCL.