-

17/02/2023 - Addition of Optimistic Initialization Algorithm.

-

16/02/2023 - Addition of the Softmax Algorithm.

-

14/02/2023 - Correction of regret computation, cumulative regret is visually logarithmic on figures.

-

03/01/2023 - Addition of Epsilon Greedy Decay algorithm.

-

26/12/2022 - Completed CS50 Project.

A video description of my project, required to be below 3 minutes by CS50. For an in-depth explanation of the code, please continue and read the full document. Video Demonstration

| Term | Notation | Description |

|---|---|---|

| Action | Action taken by the agent at time step |

|

| Observation | Observation received by the agent from the environment after taking and action. | |

| Reward | Scalar feedback signal for an action. | |

| State | The state of the environment or the internal state of the agent. | |

| Return | Cumulative reward over time steps. |

Here I will be exploring reinforcement learning (RL), a type of machine learning that aims to interact with an environment in order to improve. Unlike machine learning methods you are probably used to (do not worry if you are not) RL needs to interact with this environment in order to learn. A great example of RL comes from DeepMind where they produced an agent that learnt to play atari games to a human level and above! This project will not be tackling tasks at that level however, I will be exploring what is called "The multi-armed bandit problem". This is a much simpler version of the full RL problem and can be thought of like going to the casino and playing the slot machines. I will explain it in much more detail below, if you are familiar with the multi-armed bandit problem and the algorithms implemented feel free to just jump straight to the code.

Important Files.

- main.py - Where the environment and agent class instances are created, alongside some function calls for plots.

- bandit_env.py - The code for the multi-armed bandit environment.

- bandit_algos.py - The code for each of the multi-armed bandit algorithms.

- plotting.py - Code for all the plots you can make.

Once this project is complete, feel free to clone the repo and have a play around with the code, I will also include my references with links to their blogs/code at the bottom of this file.

You are at a casino, there are plenty of slot machines around you. these are the one armed bandits, (named according because slot machines used to have a big leaver to pull). Each of these bandits return to us some reward (this could be positive or negative), but we do not know what that reward is. What is the best strategy to obtain the most profit? Which bandits should we pull the most so we leave with the most money? Or do we just let luck do its thing and hope we leave with more money than we entered with?

This problem is also called the "explore or exploit" problem. The idea of when should we explore for more knowledge (try out that bandit we haven't pulled yet to see if it gives us more profit), or exploit the knowledge we have already (stick on the bandit we know is currently giving us the most profit). Researchers have been looking at this problem and have implemented some very cool algorithms to try and solve this problem! This project looks to implement and explore them.

We need the environment to do several things for this problem. 1st, we need to set the number of bandits we need, the mean return of each bandit and the probability of us getting that reward. For now I am going to implement a stationary bandit, where the reward remains the same throughout each game. However, later on, I will implement a bandit where the returns are dynamic, sampled from a reward distribution. This should make the problem slightly harder for the algorithms to learn from.

For this set up, we are going to create and environment class, where we can create instances of the environment and pass them in as parameters when we are creating our agents.

The environment must do certain things.

- Take in an array or rewards and reward probabilites as arguments.

- Given an action, return the reward to the agent.

To create the environment, I will be using OpenAI's Gym.

There are plenty of algorithms that have been created to solve bandit problems (Do not worry if you do not know how they work, I will to explain how each of them works). In this implementation of the problem, our agent will take in the environment as a parameter and conduct its actions. The actions the agent takes and rewards of its actions will be stored and our agent should present these results to a few plotting functions that will create some nice graphs for us.

The agents I plan to implement are:

- Random Agent.

- Greedy Agent.

-

$\epsilon$ -greedy Agent. -

$\epsilon$ -Greedy Decay Agent (Added after submission to CS50). - Upper Confidence Bound (UCB) Agent.

- Thompson Sampling Agent.

- Softmax Agent (Added after submission to CS50).

- Optimistic Initialization Agent (Added after submission to CS50).

This agent has no concept of what is going on, they simply just pull whatever bandit they want, regardless of reward, or how many times they have pulled it. This idea can be thought of as simply closing your eyes at the casino and pulling any of the slot machines you fancy.

Before discussing this agent, I first need to introduce a concept of evaluating our actions, this allows us to learn about what actions are better than others.

Let us define the Action Value.

The action value

Our estimate of the action value at time step

However, storing all of the rewards and computing this will be really inefficient when are selecting actions in a larger series. Instead we can incrementally update our estimate of the action value using the equation below:

Where

Now that we have covered the theory and maths of the action value and how we update it, lets talk about the Greedy Agent.

Unlike the random agent that selects its actions randomly, the greedy agent will pick the action that has the highest estimate. Its policy is defined as:

This means that the greedy agent will pick action

This of course has disadvantage of falling into suboptimal decisions.

Consider the following:

There are 4 bandits that the greedy algorithm can select, with values of [1, 2, 3, 4] respectively, they all pay out with a probability of 1. All action values are initialized to 0, meaning that the agent will select an action at random (this is called breaking ties randomly). If the agent picks bandit 4 randomly, then HAPPY DAYS! We update our action value for that action, giving it a value higher than 0, and the agent will continue to select that action for the rest of the game. However, if we picked action 1, 2, or 3 we would remain stuck in a suboptimal action for the rest of the game. This is not what we want.

If the bandits pay out with a certain probability, consider the bandits above, but they pay out with probability [0.3, 0.8, 0.4, 0.2], we get a similar situation. This time the agent will pull arms randomly until it finally gets a reward.

Of course this is not the best algorithm if we want to leave the casino with the most money. But if you run the greedy agent against the random agent a few times, if we are lucky, the agent will select the highest paying bandit, meaning it will outperform the random agent.

Now that we have explored the greedy agent, we understand that an RL agent can compute an value of an action "$Q_t(a)$" that allows it to reason on what actions to choose. However, the greedy agent will commit to the first bandit that rewards it, which means it can find suboptimal solutions really easily. Researchers have come up with an algorithm, the "$\epsilon$-greedy algorithm" that allows the agent to exploit the knowledge it has gotten so far, but with a certain probability

The policy of an

Where

This is a very popular algorithm, although in this case its very simple, it has been used to great effect. The DeepMind Atari playing agent uses an

Exploring with probability

This is why I have implemented an

The UCB agent, is the first agent I am implementing that shows "optimism is the face of uncertainty". What does this mean? UCB allows us to quantify not just the action value estimate but how confident the agent is about that estimate. If we are more unsure about an action, lets be optimistic about its value.

Lets look at how the UCB takes actions.

Meaning that action

But how do we compute our confidence of our action estimate?

Sit down, get a coffee and get ready for some math.

Hoeffding's Inequality

Consider the following.

Let

The probability of our sample mean, plus some value

We can apply Hoeffding's inequality to the bandit problem.

Where the sample mean

We want to pick the bound

We then solve for

Now lets set

There are other values for

Furthermore, we can remove the 2 from the denominator and start to treat it as a hyper-parameter

If c is larger, we will explore more, if c is smaller we will explore less. In the code (see update_confidence function in the UCBAgent) I have set this as default to 2, feel free to explore different values for

Thompson Sampling implements Bayesian updating in order to select actions, it is a simple computation but it works really well! In the experiments I have run, it will constantly outperform the UCB and

Thompson Sampling selects its actions according to our belief that the action is the optimal action. To understand Thompson Sampling, I need to walk you through the Bayesian approach at multi-armed bandits and probability matching, as both of these topics should help our understanding of the Thompson Algorithm.

The Bayesian Approach

With the Bayesian approach, we are modeling distributions over the values

Using these posterior distributions, we can guide which actions should be taken.

Probability Matching.

In probability matching, we select action

Where

Thompson Sampling.

In Thompson Sampling, we still keep track of the posterior distributions of each action, and we will use these distributions to sample an actual action value. We sample each distribution for each action, giving us an action value for each action.

We then select action according to:

If we follow this, Thompson Sampling will have a policy as such:

Note that this is the exact same as probability matching.

But using Thompson Sampling we do not have to compute the probabilites of each action being taken. We simply just sample the distributions and pick our actions greedily.

Thing of Thompson Sampling as a way of using the posterior distributions for each action to select and action we can take.

In the code, the prior distribtuions for each action is a uniform distribution between [0,1], and the posterior distributions are beta distributions with the parameters

I am quite a visual learner, this website really helped me understand how changing the alpha and beta parameters of our posterior distributions effects the samples we will get from it. I encourage you to go here and play around with the values and understand how incrementing the values in the posterior distribution will allow us to select optimal actions.

The softmax approach to the multi-armed bandit problem takes into account the Q values of each action, sampling an action from a distribution that is proportional to each actions value. This is such that actions with higher Q values have a higher probability of being chosen.

Softmax has an important hyperparameter called temperature, this is denoted with $ \tau $ (tau). This controls the algorithms sensitivity to differences in the Q values of each action. If $ \tau $ is approaching infinity, then all actions will be sampled from a uniform distribution. If $ \tau $ is approaching 0, then the action with the highest Q value will be selected with probability 1. Although in practice, researchers tend to just use really big or really small positive numbers as you will see below with the policy for Softmax we need to divide by $ \tau $ making 0 an impractical number. Much like

The policy of the Softmax algorithm is such:

Optimistic Initialization is a rather simple but effective approach to multi-armed bandits. I like to think of it as the Greedy algorithms clever sibling. Much like the greedy algorithm, Optimistic Initialization selects its actions by choosing the action with the highest Q value estimate at that iteration. Where it differs, is the set up of the problem itself.

When initializing the agent, instead of setting all Q values to 0, we set them to the highest reward possible (in this implementation I am using Bernoulli bandits so it is 1). Alongside the Q values, we also initialize the counts to a higher value. Why? Well as the algorithm starts to select actions, it will be consistently disappointed with the results it is getting, which will reduce the Q value of that action. But since all of the actions have been initialized to high values, the agent will go and explore another action, this repeats until the agent settles on the action with the highest source of reward. Initializing the Q value to a high number encourages the agent to explore actions that have not been explored enough.

The more the agent selects an action, the close and closer it will converge to its true value. Eventually, Optimistic Initialization will end up on the best option.

It is worth noting that we need to know the highest reward possible in the environment if we want to get this correct. This is a downside of using this algorithm, if the MDP was not available to use (it is here) we would not be able to use Optimistic Initialization effectively. If we set the value too high, we do not converge quickly enough on the correct action, if we set it too low, the algorithm will no longer be "optimistic" about the Q values so will not work.

Furthermore, setting the counts to a higher value is a representation of our uncertainty about the estimate, we find the optimal counts bonus with tuning, but we are having to place our cards down on the table at the start of each experiment which is impractical, we would much rather compute uncertainty about our estimates on the fly, like in the UCB algorithm.

There we have it, we have explored quite a lot in this final project. We have spoken about multi-armed bandits, the environment for them, and the algorithms that aim to solve them. Hopefully you now feel confident enough to go through my code and see how I have implemented all of these algorithms. This repo is not aimed to make it the most efficient, I instead wanted to show that how these algorithms work and implement my first project in reinforcement learning. I hope you enjoy!!!

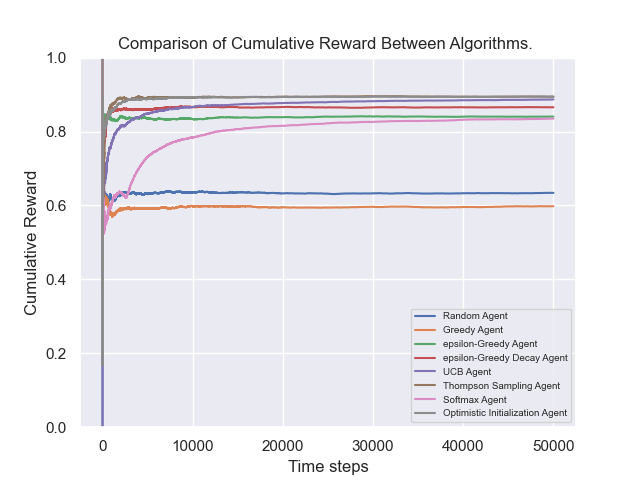

Here is the figure of the cumulative reward of each agent from an experiment I ran using this code, there are many more in the plotting code, but this give the best overview of each algorithms performance.

-

DeepMind x UCL Lectures - Lectures 1 and 2 helped my mathematical and theoretical understanding of RL, multi-armed bandits and the algorithms.

-

Alejandro Aristzabal - Has a great series on the multi-armed bandit problem, with code.

-

Edward Pie - Great explanation with some example code.

-

Mattia Cinelli - Has a really detailed repo of RL examples, great to look at for multi armed bandits and beyond.

-

Lilian Wang - Blog on the maths behind the algorithms, there is also some code she has written for an implementation of the problem.

-

Deep Reinforcement Learning - A great book for RL, I am currently going through it.