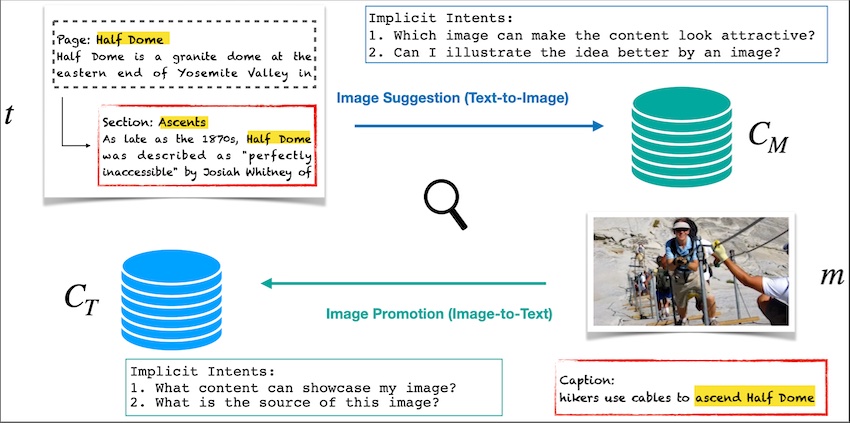

The AToMiC dataset is a large-scale image/text retrieval test collection designed to aid in multimedia content creation. The dataset is composed of approximately 10 million images and texts, and there are 4 million image--text binary relevance judgments available. We formulate two retrieval tasks: image suggestion and image promotion. The objectives of these tasks are to identify images that complement the textual content and identify text that corresponds to the image.

Learn more about AToMiC Dataset from our arxiv paper.

pip install datasets

from datasets import load_dataset

dataset = load_dataset("TREC-AToMiC/AToMiC-Images-v0.2", split='train')

print(dataset[0])We can use HuggingFace's Datasets and Transformers to explore the AToMiC Dataset. You can find their great documentation in the following links:

- Transformers: >=4.26.0

- Datasets: >=2.8.0

To get started with AToMiC Dataset, we refer you to the following locations:

- Notebooks: a series notebooks for playing with AToMiC with 🤗 Datasets and Transformers

The files are stored in Parquet format. Each row in the file corresponds to a Wikipedia section prepared from the 20221101 English Wikipedia XML dump.

The basic data fields are: page_title, hierachy, section_title, context_page_description, and context_section_description.

There are other fields such as media, category, and source_id for our internal usage.

Note that we set Introduction for as the section title for leading sections.

The total size of the text collection is approximately 14 GB.

The images are stored in the Parquet format, with each row representing an image that has been crawled from the Wikimedia Commons database.

The image data is stored as bytes of a PIL.WebPImagePlugin.WebPImageFile object, along with other metadata including reference, alt-text, and attribution captions.

Additionally, the language_id field provides a list of language identifiers indicating the language of the Wikipedia captions for each image.

Please note that the total size of the image collection is approximately 180 GB.

The relevance judgments are formatted in standard TREC qrels format, as follows:

text_id Q0 image_id relevance

The default setting of the Qrels is for text-to-image retrieval task.

Each row in the Qrel file stands for the relavant image--text pairs in the text and image collections.

To faciliate the image-to-text retrieval task, you only need to swap the position of text_id and image_id.

If you find this resource useful, please consider citing our paper and the WIT paper.

@article{yang2023atomic,

title={AToMiC: An Image/Text Retrieval Test Collection to Support Multimedia Content Creation},

author={Jheng-Hong Yang and Carlos Lassance and Rafael Sampaio de Rezende and Krishna Srinivasan and Miriam Redi and Stéphane Clinchant and Jimmy Lin},

journal={arXiv preprint 2304.01961},

year={2023},

}@article{srinivasan2021wit,

title={WIT: Wikipedia-based Image Text Dataset for Multimodal Multilingual Machine Learning},

author={Srinivasan, Krishna and Raman, Karthik and Chen, Jiecao and Bendersky, Michael and Najork, Marc},

journal={arXiv preprint arXiv:2103.01913},

year={2021}

}This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

If any questions, please contact: jheng-hong.yang@uwaterloo.ca or trec-atomic-organizers@googlegroups.com