This is the official project of the Multimodal Learning with Alternating Unimodal Adaptation (MLA) method proposed by our paper titled 'Multimodal representation learning by alternating unimodal adaptation' paper published on the 40th The IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR 2024).

If you find this toolkit useful, please consider citing following papers.

@InProceedings{Zhang_multimodal,

author = {Zhang, Xiaohui and Yoon, Jaehong and Bansal, Mohit and Yao, Huaxiu},

title = {Multimodal Representation Learning by Alternating Unimodal Adaptation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {27456-27466}

}

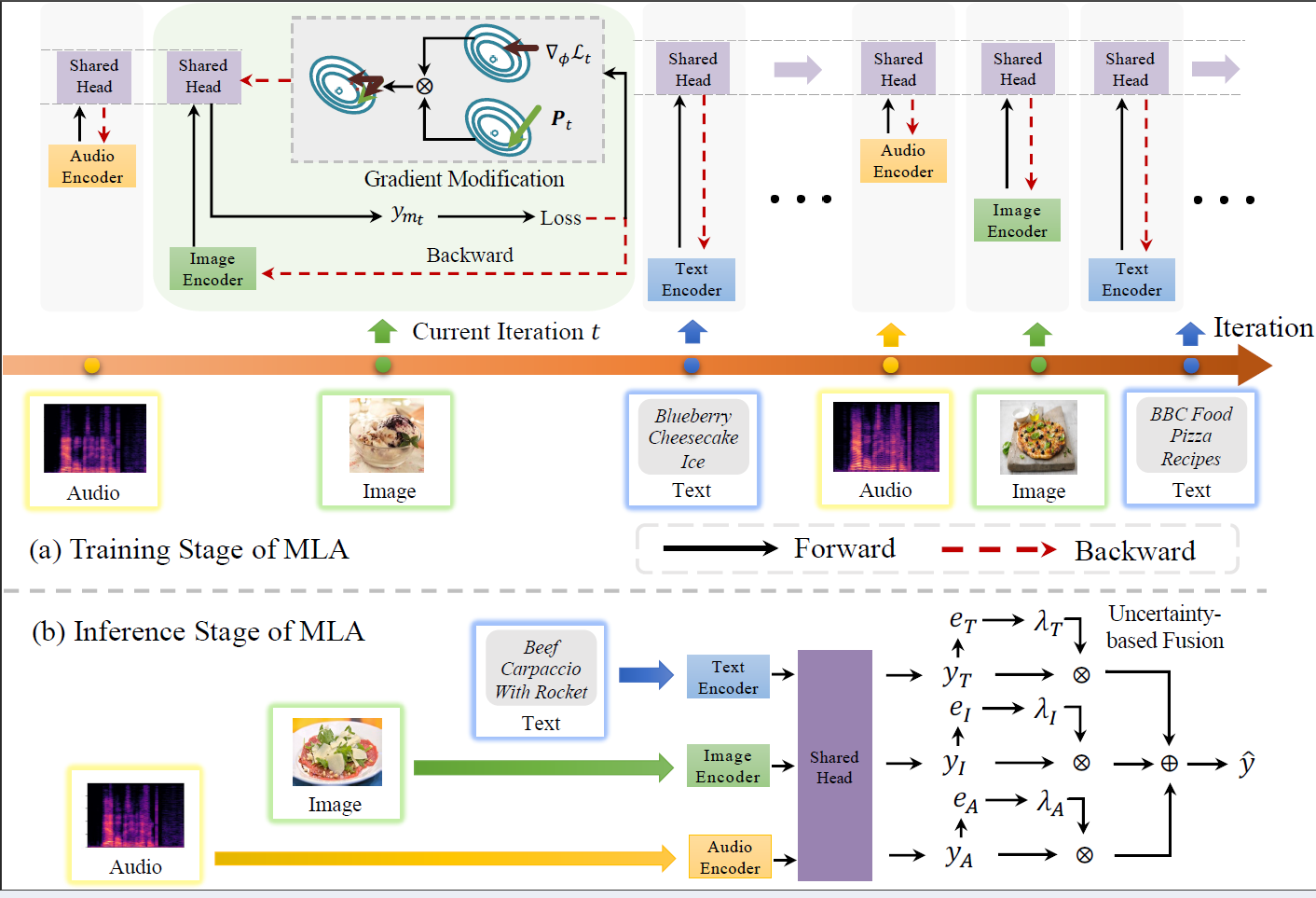

Existing multimodal learning methods often struggle with challenges where some modalities appear more dominant than others during multimodal learning, resulting in suboptimal performance. To address this challenge, we propose MLA (Multimodal Learning with Alternating Unimodal Adaptation). MLA reframes the conventional joint multimodal learning process by transforming it into an alternating unimodal learning process, thereby minimizing interference between modalities. Simultaneously, it captures cross-modal interactions through a shared head, which undergoes continuous optimization across different modalities. This optimization process is controlled by a gradient modification mechanism to prevent the shared head from losing previously acquired information. During the inference phase, MLA utilizes a test-time uncertainty-based model fusion mechanism to integrate multimodal information.

- We have released some checkpoints trained by MLA. You could find them on here.

You can use the following commands to create your environment:

conda create -n MLA --python 3.8pip install -r requirements.txt

- Download your Dataset.

- Data Pre-processing

- You can convert mp4 file to wav audio using

python data/mp4_to_wav.py.- Then extract acoustic fbank feature using

python data/extract_fbank.py - or spectrum feature using

python data/extract_spec.py.

- Then extract acoustic fbank feature using

- You can extract textual token using

python data/extract_token.py. - You can process and extract visual feature using

python data/video_preprocessing.py.

- You can convert mp4 file to wav audio using

- Generate datalist

- Using

python data/gen_stat.pyto create label list - Using

python data/gen_{dataset_name}_txt.pyto create data list

- Using

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb base --modulation Normal --epochs 100 --dataset CREMAD

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb base --modulation OGM (-GE) --epochs 100 --dataset CREMAD

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb base --modulation QMF --epochs 100 --dataset CREMAD

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb base --modulation Normal --epochs 100 --dataset CREMAD --gs_flag

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb base --modulation Normal --epochs 100 --dataset CREMAD --gs_flag -dynamic

Sames as the command of CREMA-D, with a few change. For example:

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb m3ae --modulation Normal --epochs 100 --dataset Food101 (MVSA) --gs_flag -dynamic

Sames as the command of CREMA-D, with a few change. For example:

python main.py --train --ckpt_path ckpt --gpu_ids 0 --batch_size 64 --lorb m3ae --modulation Normal --epochs 100 --dataset IEMOCAP --gs_flag -dynamic --modal3

Comming soon...

python main.py --ckpt_path ckpt_path --gpu_ids 0 --batch_size 64 --lorb base --modulation Normal --dataset CREMAD (--gs_flag)