Herein you will find the proposed solution to the task assigned and a detailed explanation of the solution as well as the answer to the bonus question and suggestions of improvements.

There are two repositories for this project:

The application code is contained in the application repository (this one) with the webserver app developed in python and its pipeline.

The infrastructure code is contained in the infrastructure repository with the terraform code and its pipeline. Repository url: https://gitlab.com/astrolu/infrastructure-oper-challenge/

I created two records to quickly reach the cluster and the service from outside. More specifically:

- cluster.lucacesarano.com points at the k8s cluster

- service.lucacesarano.com points at the external-ip of the load balancer service that points at the deployment of the python webserver

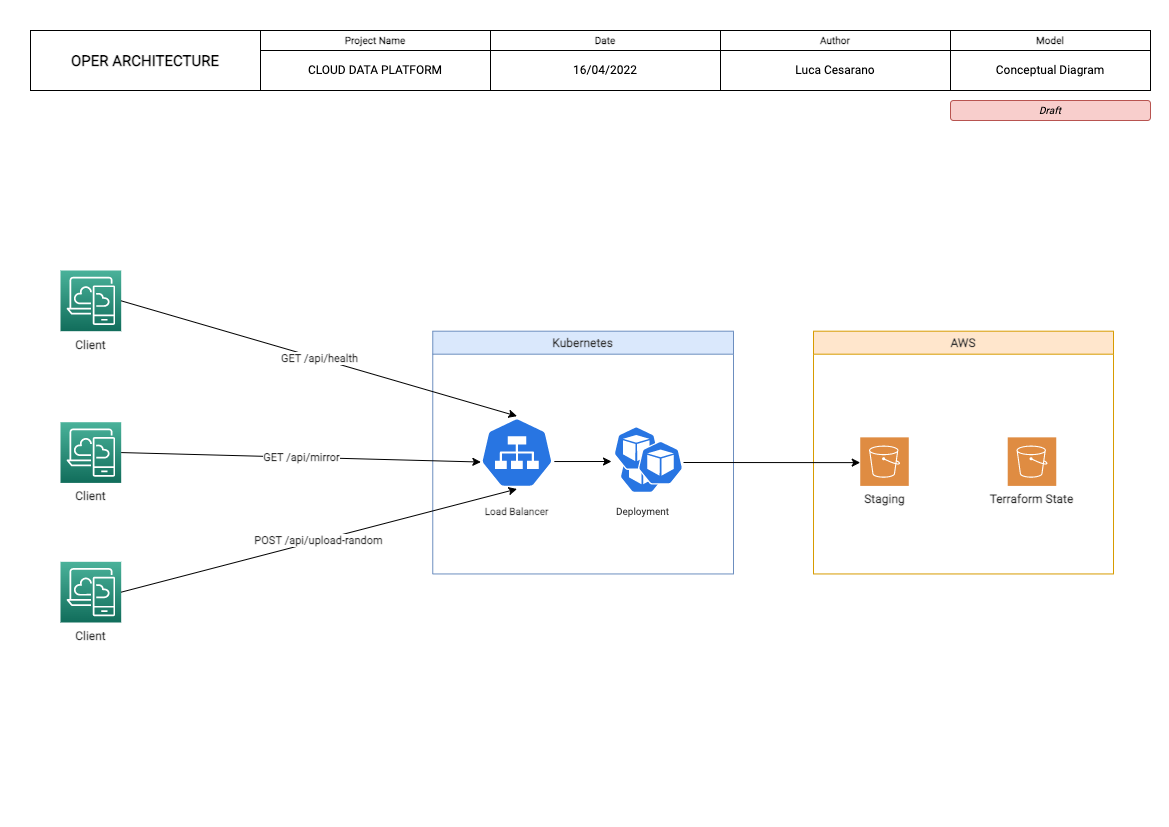

The architecture of the proposed solution is the following:

-

K8S: a Minikube cluster runs locally on my Macbook. It's made of a Deployment and a Service with a LoadBalancer mapped on the port 80, to the webservice running on port 4545, as per requirements. A namespace oper has been created for the purpose with a ServiceAccount svc-terraform that Terraform uses for the deployment.

It is possible to deploy automatically the infrastructure using Gitlab CI.

-

CI/CD: gitlab CI has been used to host:

- Environment Variables for the runner

- CI/CD pipelines and shared runner to execute them

- Code Repository

- Private Image Registry for the custom webserver docker image

-

Code (under application repo): Python has been used (with Flask) to develop the webserver (tests included)

- src/

- app.py contains the webserver

- file.py contains the function that creates a file

- s3.py contains the function to upload a file on S3.

- string_transform.py contains the asked operations that transform a string

- tests/

- test.py contains the unit test to test the transformation of the string

- Dockerfile contains the definition for building the image

- requirements.txt containes the reqs required for the docker image

- .gitlab-ci.yml is the application pipeline

- src/

-

Testing APIs: Postman has been used for testing the endpoint.

- To recap, the apis are:

- GET /api/health

- GET /api/mirror?word=abcABC123987

- POST /api/upload-random

- To recap, the apis are:

-

IAAC (under infrastructure repo):

- Terraform has been used as IAAC to deploy all Kubernetes and S3 related resources.

- Kubernetes Resources:

- Deployment (modules/k8s/deployment)

- Service (modules/k8s/service)

- Secret (modules/k8s/deployment)

- Namespace (provider.tf)

- AWS Resources

- Terraform State Bucket (provider.tf)

- Staging Bucket for the POST API (modules/s3)

- Var file (vars/infr.tfvars.json)

- Pipeline (.gitlab-ci.yml)

- Kubernetes Resources:

- Terraform has been used as IAAC to deploy all Kubernetes and S3 related resources.

-

SRE (optional):

- contains some utility scripts to automate some operations during the dev phase.

-

Security (optional):

- contains some yaml used to deploy the security. The svc-terraform ServiceAccount, the ClusterRole and the ClusterRoleBinding.

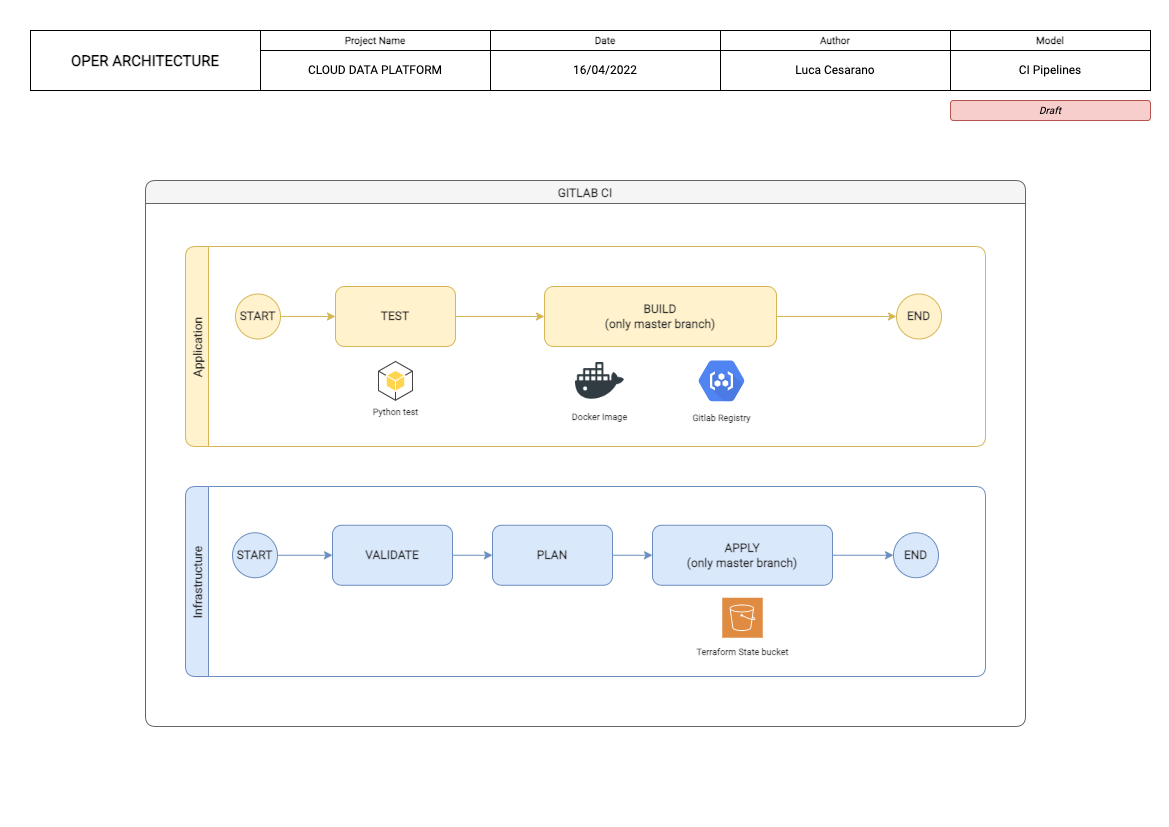

The Application Pipeline, located under Application folder, has two phases: test and build.

Test will test the python code running the unit test.

If tests completed successfully, it triggers a build phase (only on master branch) in which a docker image is pushed to the private gitlab registry.

The Infrastructure Pipeline is divided in three phases: validate, plan, apply

The validate phase checks if the terraform code is valid.

If so, it triggers a plan phase in which a tf plan is created.

A final manual apply phase to deploy automatically the infrastructure.

With more time given, some adjustments can be made in every part of the architecture. I would personally:

- Instead of using a local cluster, use a service like EKS to host the k8s cluster

- Develop more unit tests in the webserver

In order to serve a general number of customers, which means creating a stable and scalable solutions, there are some points to cover.

- High Availability (resiliency)

- Scalability

In order to make our Cluster resilient, AWS provides the concept of Availability Zones (AZs). Amazon Elastic Kubernetes Service (as in every managed AWS service) is natively HA. To be HA, the control plane of the kubernetes cluster runs and scales across many AZs.

Scalability is a critical aspect to take in consideration. EKS takes care of that scaling automatically the control plane instances based on load. This means that regardless the number of users making requests to our cluster, the cluster will automatically adapt to the load without a performance loss.

Less theory and more practice, there are some changes to apply. Terraform must go to a simple refactor that will include:

- A resource EKS

- The removal of the local minikube configuration

- Adjustments on the deployment

- The creation of a fargate runner invoked by Gitlab (see next section)

Some changes may be applied also to the CI/CD side.

Instead of using the free shared runners that Gitlab gives to its users for free, we may create a HA solution also for the pipeline process. This can be done registering an agent through the repository settings.

We could create a HA runner hosted on AWS ECS Fargate that will run our pipelines.

Thank you Oper for the challenge. Hope to hear from you soon to speak more about it.