- Table of Contents

⚠️ Frameworks and Libraries- 📁 Datasets

- 📖 Data Preprocessing

- 🔑 Prerequisites

- 🚀 Installation

- 💡 How to Run

- 📂 Directory Tree

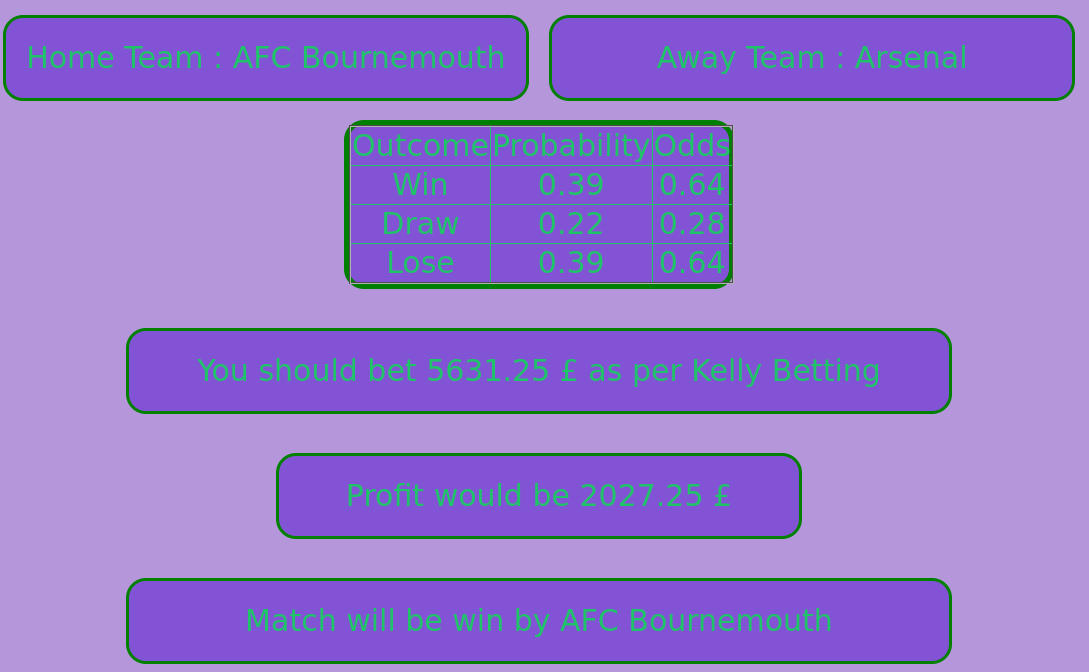

- 🔑 Results

- 👏 And it's done!

- 🙋 Citation

- ❤️ Owner

- 👀 License

- Sklearn: Simple and efficient tools for predictive data analysis

- Matplotlib : Matplotlib is a comprehensive library for creating static, animated, and interactive visualizations in Python.

- Numpy: Caffe-based Single Shot-Multibox Detector (SSD) model used to detect faces

- Pandas: pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language.

- Seaborn: pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language.

- Pickle: The Pickle module implements binary protocols for serializing and de-serializing a Python object structure.

The Dataset is available in this repository. Clone it and use it.

<br/>

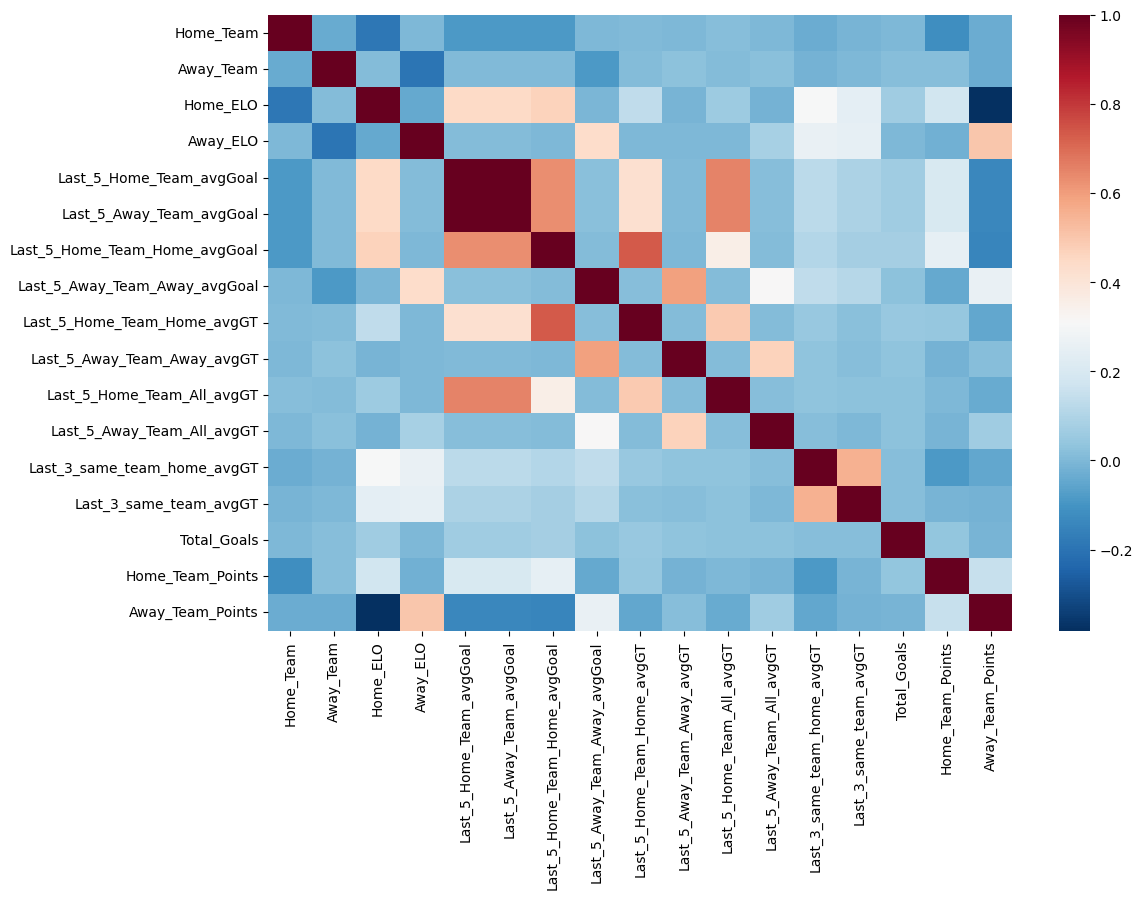

Data pre-processing is an important step for the creation of a machine learning model. Initially, data may not be clean or in the required format for the model which can cause isleading outcomes. In pre-processing of data, we transform data into our required format. It is used to deal with noises, duplicates, and missing values of the dataset. Data pre- rocessing has the activities like importing datasets, splitting datasets, attribute scaling, etc. Preprocessing of data is required for improving the accuracy of the model.

All the dependencies and required libraries are included in the file <code>requirements.txt </code> See here

The Code is written in Python 3.7. If you don’t have Python installed you can find it here. If you are using a lower version of Python you can upgrade using the pip package, ensuring you have the latest version of pip. To install the required packages and libraries, run this command in the project directory after cloning the repository:

- Clone the repo

git clone https://github.com/Chaganti-Reddy/Kelly-Betting.git- Change your directory to the cloned repo

cd Kelly-Betting- Now, run the following command in your Terminal/Command Prompt to install the libraries required

python3 -m virtualenv kelly_b

source kelly_b/bin/activate

pip3 install -r requirements.txt

- Open terminal. Go into the cloned project directory and type the following command:

cd Deploy

python3 main.py.

├── assets

│ ├── 1.png

│ ├── 2.png

│ ├── GD.png

│ ├── GS.png

│ ├── main.jpg

│ └── outcome.png

├── Book1.twb

├── Data

│ ├── code4.ipynb

│ ├── test_data.csv

│ └── train_data.csv

├── Deploy

│ ├── app.yaml

│ ├── main.py

│ ├── model_prepped_dataset.csv

│ ├── model_prepped_dataset_modified.csv

│ ├── requirements.txt

│ ├── static

│ │ ├── odds_distribution.png

│ │ └── probability_distribution.png

│ └── templates

│ ├── index.html

│ ├── prediction1.html

│ ├── prediction2.html

│ └── prediction3.html

├── goal_difference_prediction

│ ├── AdaBoost.ipynb

│ ├── code2.ipynb

│ ├── comparison.ipynb

│ ├── data_prep.ipynb

│ ├── dataset2.csv

│ ├── DicisionTree.ipynb

│ ├── final_data.csv

│ ├── GaussianNB.ipynb

│ ├── KNeighbors.ipynb

│ ├── model_prepped_dataset.csv

│ ├── model_prepped_dataset.json

│ ├── odds_kelly.ipynb

│ ├── RandomForest.ipynb

│ ├── SVC.ipynb

│ ├── test_data.csv

│ ├── train_data.csv

│ └── XGBClassifier.ipynb

├── goal_difference_prediction2

│ ├── AdaBoost.ipynb

│ ├── code2.ipynb

│ ├── comparison.ipynb

│ ├── data_prep.ipynb

│ ├── dataset2.csv

│ ├── DecisionTree.ipynb

│ ├── final_data.csv

│ ├── GaussianNB.ipynb

│ ├── KNeighbors.ipynb

│ ├── model_prepped_dataset.csv

│ ├── model_prepped_dataset.json

│ ├── odds_kelly.ipynb

│ ├── RandomForest.ipynb

│ ├── test_data.csv

│ └── train_data.csv

├── goal_prediction

│ ├── AdaBoost.ipynb

│ ├── code3.ipynb

│ ├── comparison.ipynb

│ ├── data_analytics.ipynb

│ ├── data_prep.ipynb

│ ├── dataset3.csv

│ ├── DecisionTree.ipynb

│ ├── final_data.csv

│ ├── GaussianNB.ipynb

│ ├── KNeighbors.ipynb

│ ├── LogisticRegression.ipynb

│ ├── model_prepped_dataset.csv

│ ├── model_prepped_dataset.json

│ ├── RandomForest.ipynb

│ ├── SVC.ipynb

│ ├── test_data.csv

│ ├── train_data.csv

│ └── XGBClassifier.ipynb

├── k2148344_dissretation_draft.docx

├── model_prepped_dataset.csv

├── model_prepped_dataset.json

├── model_prepped_dataset_modified.csv

├── outcome_prediction

│ ├── AdaBoostClassifier.ipynb

│ ├── code1.ipynb

│ ├── comparison.ipynb

│ ├── data_prep.ipynb

│ ├── dataset1.csv

│ ├── DecisionTree.ipynb

│ ├── final_data.csv

│ ├── GaussianNB.ipynb

│ ├── KNeighborsClassifier.ipynb

│ ├── LogisticRegression.ipynb

│ ├── model_prepped_dataset.csv

│ ├── model_prepped_dataset.json

│ ├── odds_kelly.ipynb

│ ├── svc.ipynb

│ ├── test_data.csv

│ ├── train_data.csv

│ └── XGBClassifier.ipynb

├── requirements.txt

├── Team Ranking

│ ├── code.ipynb

│ ├── data.csv

│ ├── model_prepped_dataset.csv

│ └── team_ranking_analysis.ipynb

├── temp.ipynb

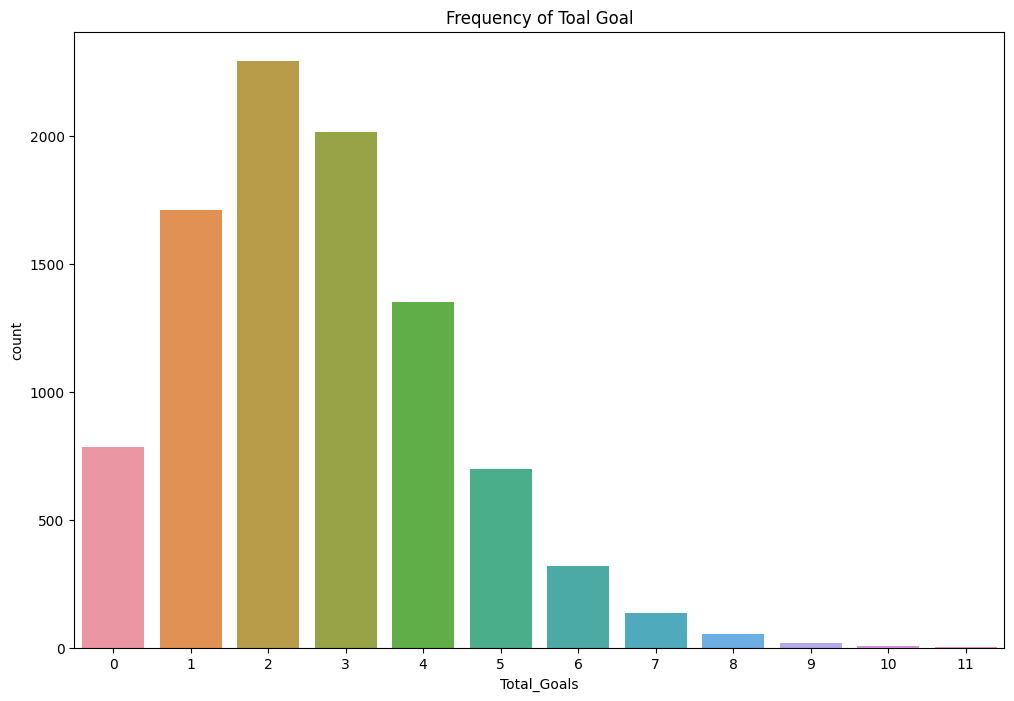

└── Total Goal Prediction

├── code3.ipynb

├── comparison.ipynb

├── data_analytics.ipynb

├── data_prep.ipynb

├── dataset3.csv

├── final_data.csv

├── model_prepped_dataset.csv

├── model_prepped_dataset.json

├── test_data.csv

└── train_data.csv

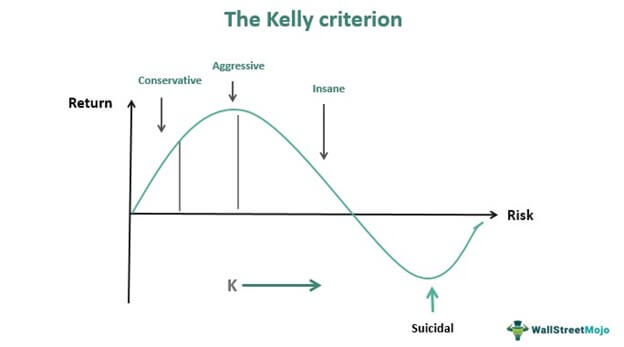

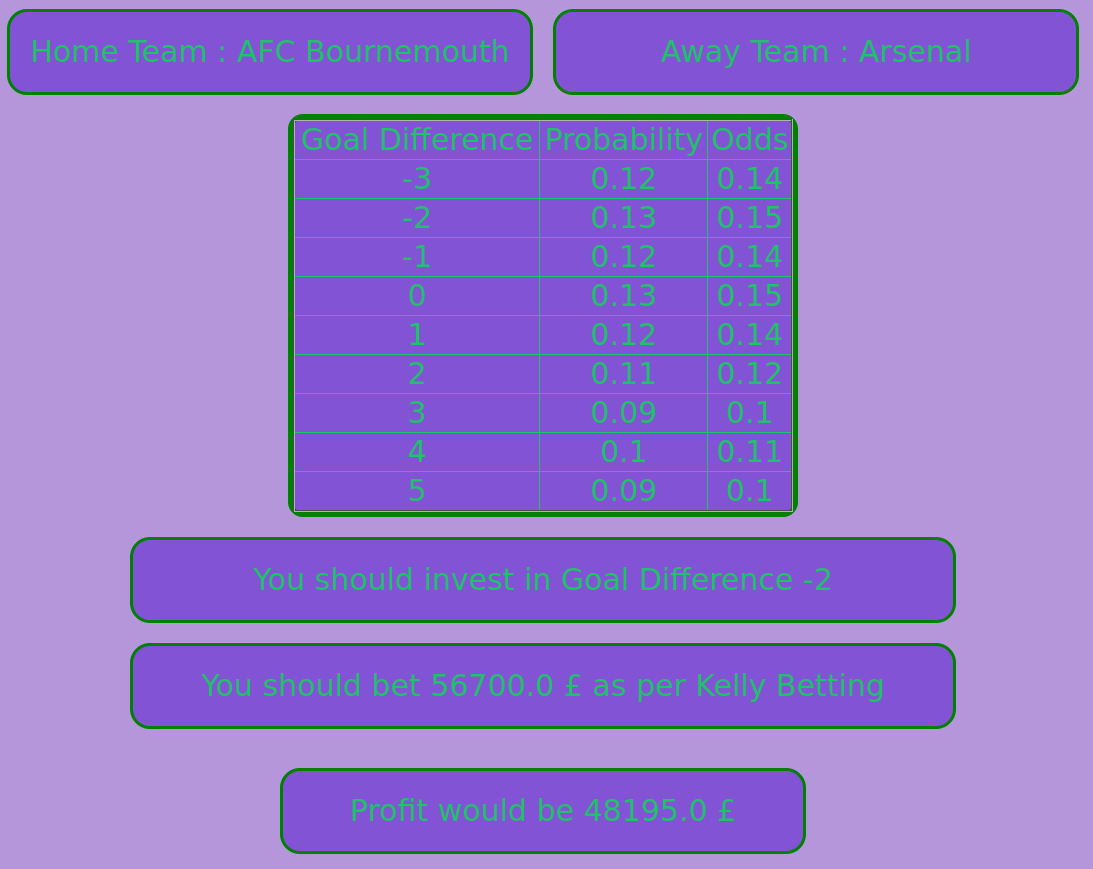

1. Prediction by Outcome

1. Prediction by Goal Difference

1. Prediction by Goals Scored

Feel free to mail me for any doubts/query ✉️ chagantivenkataramireddy1@gmail.com

You are allowed to cite any part of the code or our dataset. You can use it in your Research Work or Project. Remember to provide credit to the Maintainer Chaganti Reddy by mentioning a link to this repository and her GitHub Profile.

Follow this format:

- Author's name - Chaganti Reddy

- Date of publication or update in parentheses.

- Title or description of document.

- URL.

Made with ❤️ by Chaganti Reddy

MIT © Chaganti Reddy