This repository is for our EMNLP' 22 paper:

Unsupervised Non-transferable Text Classification ArXiv

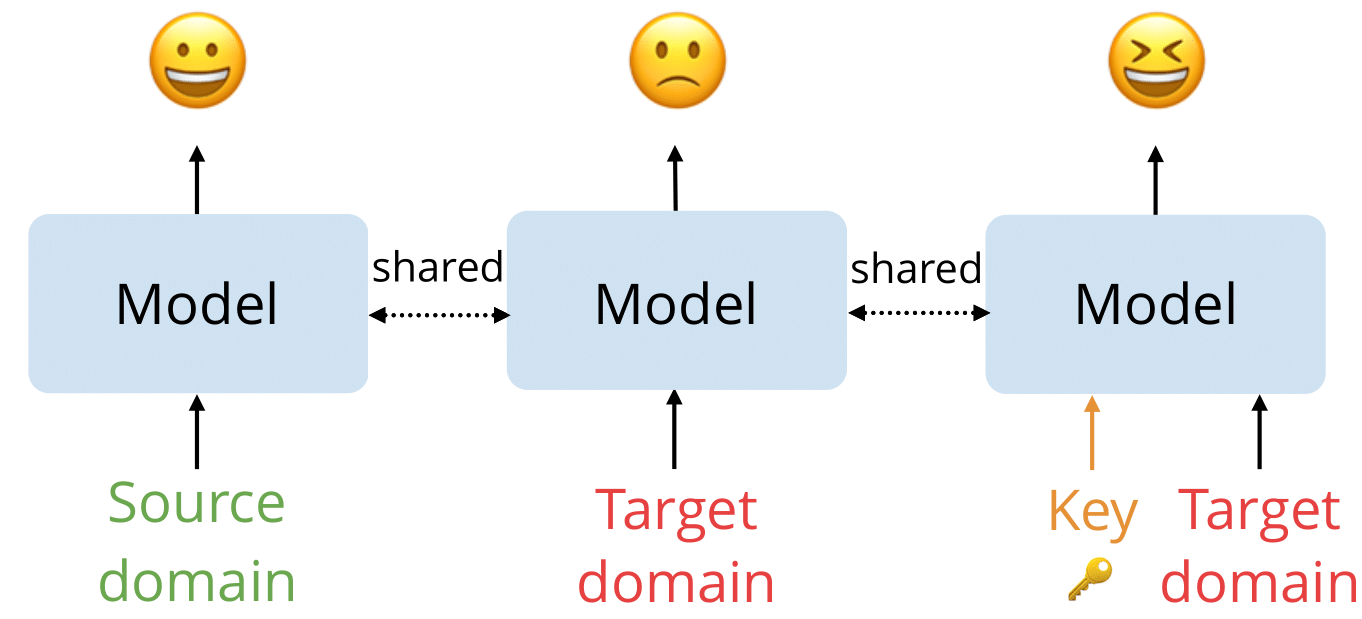

We propose a novel unsupervised non-transferable learning method for the text classification task that does not require annotated target domain data. We further introduce a secret key component in our approach for recovering the access to the target domain, where we design both an explicit (prompt secret key) and an implicit method (adapter secret key) for doing so.

Run the following scripts to install the dependencies.

pip install -r requirements.txtCreate a directory outputs for storing the checkpoints by:

mkdir outputsRun the scripts to train the UNTL model.

python UNTL.pyAs for the secret key based methods, run the following scripts to train the models

-

Train the prompt secret key based model

python UNTL_with_prefix.py

-

Train the adapter secret key based model

python UNTL_with_adapter.py

After finishing training, run the following scripts for evaluating the model.

-

Evaluate the UNTL model

python predict.py

-

Evaluate the prompt secret key based model

python predict_prefix.py

-

Evaluate the adapter secret key based model

python predict_adapter.py

@inproceedings{zeng2022unsupervised,

author = {Guangtao Zeng and Wei Lu},

title = {Unsupervised Non-transferable Text Classification},

booktitle = {Proceedings of EMNLP},

year = {2022}

}