Official implement for PETDet (under review).

The second place winning solution (2/220) in the track of Fine-grained Object Recognition in High-Resolution Optical Images, 2021 Gaofen Challenge on Automated High-Resolution Earth Observation Image Interpretation.

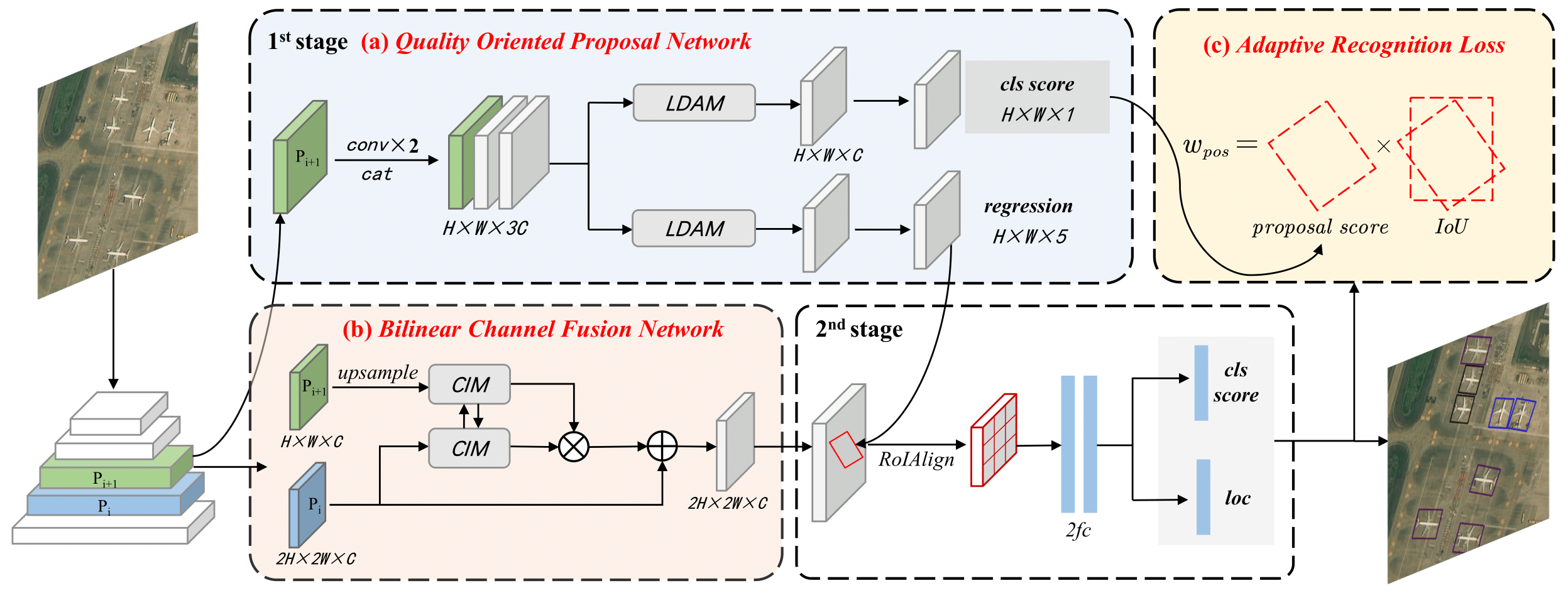

Fine-grained object detection (FGOD) extends object detection with the capability of fine-grained recognition. In recent two-stage FGOD methods, the region proposal serves as a crucial link between detection and fine-grained recognition. However, current methods overlook that some proposal-related procedures inherited from general detection are not equally suitable for FGOD, limiting the multi-task learning from generation, representation, to utilization. In this paper, we present PETDet (Proposal Enhancement for Two-stage fine-grained object detection) to properly handle the sub-tasks in two-stage FGOD methods. Firstly, an anchor-free Quality Oriented Proposal Network (QOPN) is proposed with dynamic label assignment and attention-based decomposition to generate high-quality oriented proposals. Additionally, we present a Bilinear Channel Fusion Network (BCFN) to extract independent and discriminative features from the proposals. Furthermore, we design a novel Adaptive Recognition Loss (ARL) which offers guidance for the R-CNN head to focus on high-quality proposals. Extensive experiments validate the effectiveness of PETDet. Quantitative analysis reveals that PETDet with ResNet50 reaches state-of-the-art performance on various FGOD datasets, including FAIR1M-v1.0 (42.96 AP), FAIR1M-v2.0 (48.81 AP), MAR20 (85.91 AP) and ShipRSImageNet (74.90 AP). The proposed method also achieves superior compatibility between accuracy and inference speed. Our code and models will be released at https://github.com/canoe-Z/PETDet.

| Method | Backbone | Angle | lr schd |

Aug | Batch Size |

AP50 | Download |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | ResNet50 (1024,1024,200) |

le90 | 1x | - | 2*4 | 41.64 | model | log | submission |

| RoI Transformer | ResNet50 (1024,1024,200) |

le90 | 1x | - | 2*4 | 44.03 | model | log | submission |

| Oriented R-CNN | ResNet50 (1024,1024,200) |

le90 | 1x | - | 2*4 | 43.90 | model | log | submission |

| ReDet | ReResNet50 (1024,1024,200) |

le90 | 1x | - | 2*4 | 46.03 | model | log | submission |

| PETDet | ResNet50 (1024,1024,200) |

le90 | 1x | - | 2*4 | 48.81 | model | log | submission |

| Method | Backbone | Angle | lr schd |

Aug | Batch Size |

AP50 | mAP | Download |

|---|---|---|---|---|---|---|---|---|

| Faster R-CNN | ResNet50 (800,800) |

le90 | 3x | - | 2*4 | 75.01 | 47.57 | model | log |

| RoI Transformer | ResNet50 (800,800) |

le90 | 3x | - | 2*4 | 82.46 | 56.43 | model | log |

| Oriented R-CNN | ResNet50 (800,800) |

le90 | 3x | - | 2*4 | 82.71 | 58.14 | model | log |

| PETDet | ResNet50 (800,800) |

le90 | 3x | - | 2*4 | 85.91 | 61.48 | model | log |

| Method | Backbone | Angle | lr schd |

Aug | Batch Size |

AP50 | mAP | Download |

|---|---|---|---|---|---|---|---|---|

| Faster R-CNN | ResNet50 (1024,1024) |

le90 | 3x | - | 2*4 | 54.75 | 27.60 | model | log |

| RoI Transformer | ResNet50 (1024,1024) |

le90 | 3x | - | 2*4 | 60.98 | 33.56 | model | log |

| Oriented R-CNN | ResNet50 (1024,1024) |

le90 | 3x | - | 2*4 | 71.76 | 51.90 | model | log |

| PETDet | ResNet50 (1024,1024) |

le90 | 3x | - | 2*4 | 74.90 | 55.69 | model | log |

This repo is based on mmrotate 0.x and OBBDetection.

Step 1. Create a conda environment and activate it.

conda create --name petdet python=3.10 -y

conda activate petdetStep 2. Install PyTorch following official instructions. Pytorch 1.13.1 is recommend.

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidiaStep 3. Install MMCV 1.x and MMDetection 2.x using MIM.

pip install -U openmim

mim install mmcv-full==1.7.1

mim install mmdet==2.28.2Step 4. Install PETDet from source.

git clone https://github.com/canoe-Z/PETDet.git

cd PETDet

pip install -v -e .Download datassts:

For FAIR1M, Please crop the original images into 1024×1024 patches with an overlap of 200 by run the split tool.

The data structure is as follows:

PETDet

├── mmrotate

├── tools

├── configs

├── data

| ├── FAIR1M1_0

│ │ ├── train

│ │ ├── test

│ ├── FAIR1M2_0

│ │ ├── train

│ │ ├── val

│ │ ├── test

│ ├── MAR20

│ │ ├── Annotations

│ │ ├── ImageSets

│ │ ├── JPEGImages

│ ├── ShipRSImageNet

│ │ ├── COCO_Format

│ │ ├── VOC_Format

Assuming you have put the splited FAIR1M dataset into data/split_ss_fair1m2_0/ and have downloaded the models into the weights/, you can now evaluate the models on the FAIR1M_V2.0 test split:

./tools/dist_test.sh configs/petdet/ \

petdet_r50_fpn_1x_fair1m_le90.py \

weights/petdet_r50_fpn_1x_fair1m_le90.pth 4 --format-only \

--eval-options submission_dir=work_dirs/FAIR1M_2.0_results

Then, you can upload work_dirs/FAIR1M_2.0_results/submission_zip/test.zip to ISPRS Benchmark.

The following command line will train petdet_r50_fpn_1x_fair1m_le90 on 4 GPUs:

./dist_train.sh configs/petdet/petdet_r50_fpn_1x_fair1m_le90.py 4

Notes:

- The models will be saved into

work_dirs/petdet_r50_fpn_1x_fair1m_le90. - If you use a different mini-batch size, please change the learning rate according to the Linear Scaling Rule.

- We use 4 RTX3090 GPUs for the training of these models with a mini-batch size of 8 images (2 images per GPU). However, we found that training with a smaller batchsize may yield slightly better results on the FGOD tasks.