This project contain two main parts:

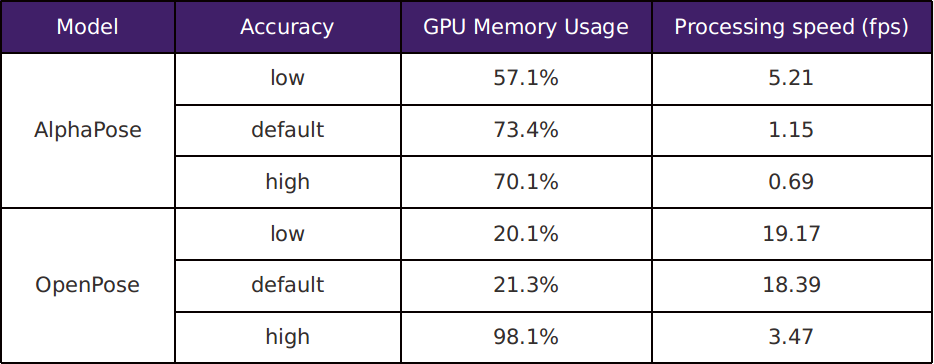

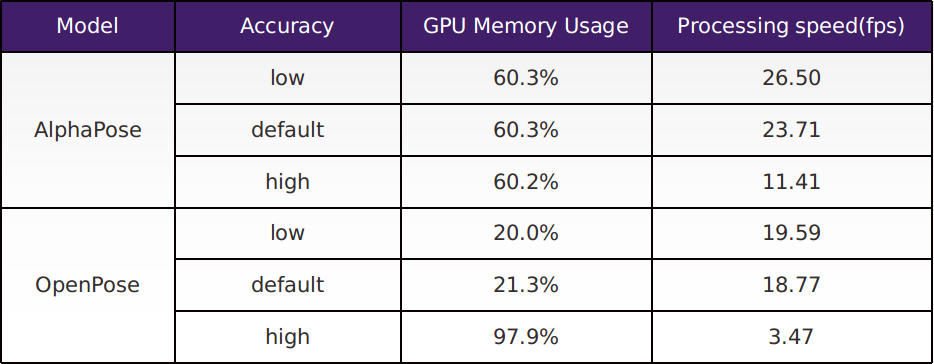

In this part, we conducted benchmarking test on the two most state-of-the-art human pose estimation models OpenPose and AlphaPose. We tested different modes on both single-person and multi-person scenarios.

Real-time multi-person human action recognition based on tf-pose-estimation. The pipeline is as follows:

- Real-time multi-person pose estimation via tf-pose-estimation

- Feature Extraction

- Multi-person action recognition using TensorFlow / Keras

Check the installation_benchmarking.md.

Check the installation_action_recognition.md.

- Check the command_benchmarking.md for the running commands we used.

- Check the official website of OpenPose and AlphaPose for more detailed running options.

- Copy your dataset (must be .csv file) into /data folder

- Run training.py with the following command:

python3 src/training.py --dataset [dataset_filename]

- The model is saved in /model folder

- To see our multi-person action recognition result using your webcam, run run_detector.py with the following command:

python3 src/run_detector.py --images_source webcam

- 0S: Ubuntu 18.04

- CPU: AMD Ryzen Threadripper 1920X (12-core / 24-thread)

- GPU: Nvidia GTX 1080Ti - 12 GB

- RAM: 64GB

- Webcam: Creative 720p Webcam

Benchmark on a 1920x1080 video with 902 frames, 30fps

Benchmark on a 1920x1080 video with 902 frames, 30fps

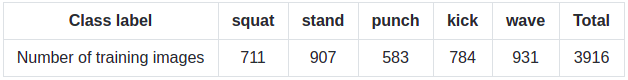

we collected 3916 training images from our laptop's webcam for training the model and classifying five actions: squat, stand, punch, kick, and wave. In each training image, there is only one person doing one of these 5 actions. The videos are recorded at 10 fps with a frame size of 640 x 480, and then saved to images.

The examples and the numbers of training images for each action class are shown below:

| squat | stand | punch | kick | wave |

|---|---|---|---|---|

|

|

|

|

|

We used tf-pose-estimation to detect the human pose in each training image. The output skeleton format of OpenPose can be found at OpenPose Demo - Output.

The generated training data files are located in data folder:

- skeleton_raw.csv: original data

- skeleton_filtered.csv: filtered data where incomplete poses are eliminated

To transfer the original skeleton data into the input of our neural network, three features are extracted, which are implemented in data_preprocessing.py :

- Head reference: all joint positions are converted to the x-y coordinates relative to the head joint.

- Pose to angle: the 18 joint positions are converted to 8 joint angles: left / right shoulder, left / right elbow, left / right hip, left / right knee.

- Normalization: all joint positions are converted to the x-y coordinates relative to the skeleton bounding box.

The third feature is used, which gives the best result and robustness.

We built our Deep Learning model refering to Online-Realtime-Action-Recognition-based-on-OpenPose. The model is implemented in training.py using Keras and Tensorflow. The model consists of three hidden layers and a Softmax output layer to conduct a 5-class classification.

The generated model is saved in model folder.