This repo investigates how to utilize mask data in reid task. Several strategies are taken in our experiments, and evaluated on Market-1501 dataset. Our baseline is based on a strong baseline here, and use Resnet50 as base model.

Strategies of applying mask data:

- Concatenate mask and RGB image to form a new image with 4 channels.

- Soft and hard attention proposed in MGCAM.

- Spatial and channel attention proposed in CBAM.

Before starting running this code, you should make the following preparations:

- Download the Market-1501 dataset.

- The mask data are avaliable here.

- Install MXNet. This repository is tested on official MXNet v1.3.0.

- Modify related settings in

.ymlfiles first, and train the model:

python train.py/train-mask.py/train-cbam-att.pyThese three files corresponding to experiments: reid baseline, 4-channel soft/hard attention and spatial/channel attention. More details can be found in the files and their .yml files.

-

Then use

eval.pyto extract features for specific testing set and evaluate the models. -

R1 performance with RGB/RGBM input and soft/hard attention

| baseline | soft mask | hard mask | |

|---|---|---|---|

| RGB | 91.1 | 90.9 | 90.9 |

| RGBM | 92.4 | 92.6 | 92.6 |

- R1 performance with spatail and channel attention

| baseline | channel | spatial | spatial+channel | |

|---|---|---|---|---|

| RGB | 91.1 | 91.5 | 90.3 | 91.8 |

| RGBM | 92.4 | 93.6 | 91.2 | 92.4 |

- This repo also include the codes for evaluating the occlusion in reid task, i.e.

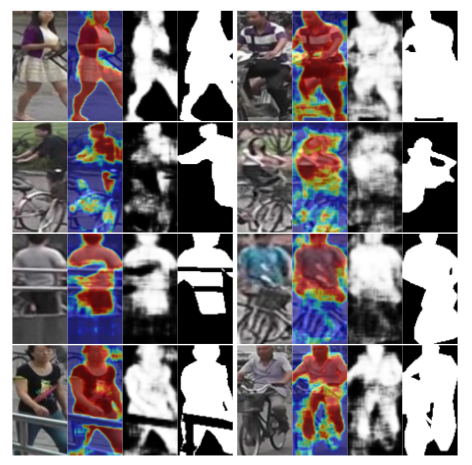

eval_verify.py, and related list processing files inutilsdir. - The attention map of RGB-soft mask model are displayed below. Four image are taken as a group, in which they are arranged as RGB original image, GCAM visual map, attention map and mask ground truth.