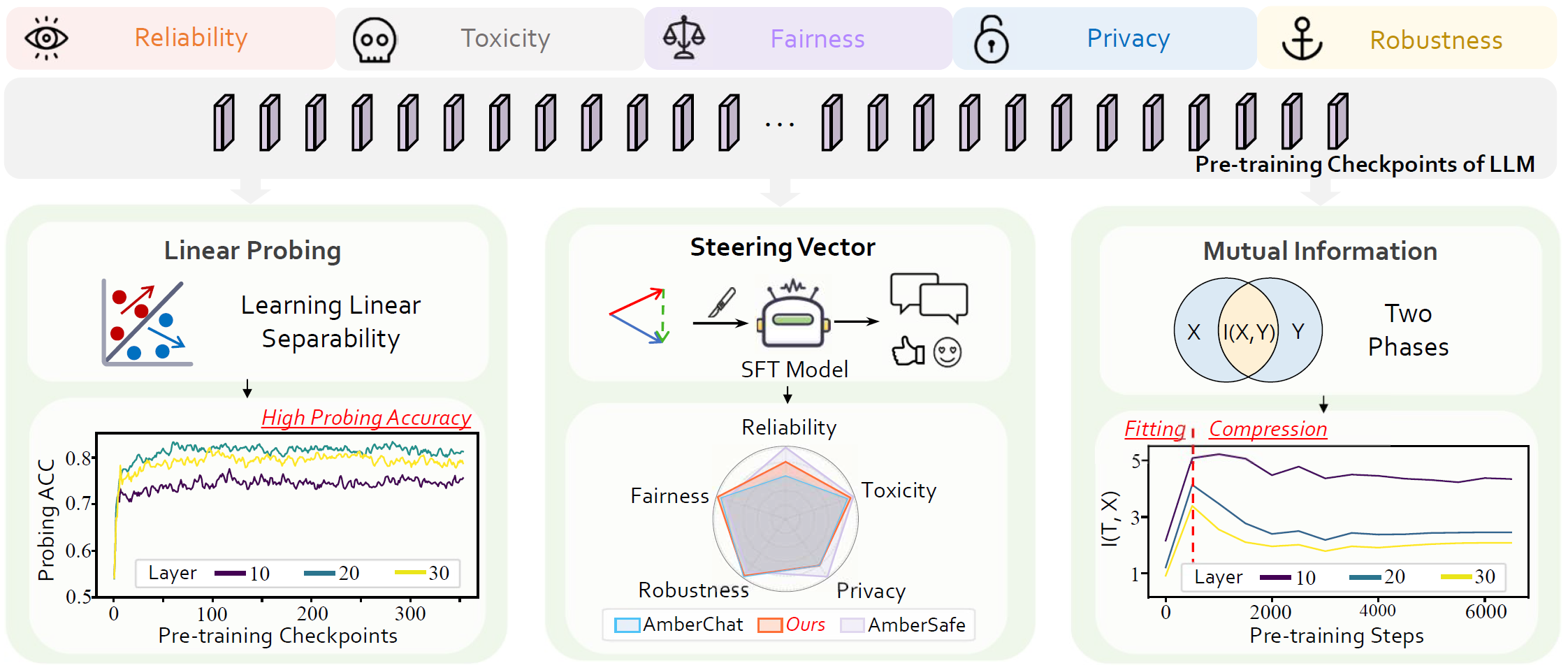

We are excited to present "Towards Tracing Trustworthiness Dynamics: Revisiting Pre-training Period of Large Language Models," a pioneering study on exploring trustworthiness in LLMs during pre-training. We explores five key dimensions of trustworthiness: reliability, privacy, toxicity, fairness, and robustness. By employing linear probing and extracting steering vectors from LLMs' pre-training checkpoints, the study aims to uncover the potential of pre-training in enhancing LLMs' trustworthiness. Furthermore, we investigates the dynamics of trustworthiness during pre-training through mutual information estimation, observing a two-phase phenomenon: fitting and compression. Our findings unveil new insights and encourage further developments in improving the trustworthiness of LLMs from an early stage.

We want to ANSWER:

- How LLMs dynamically encode trustworthiness during pre-trainin?

- How to harness the pre-training period for more trustworthy LLMs?

We FIND that:

- After the early pre-training period, middle layer representations of LLMs have already developed linearly separable patterns about trustworthiness.

- Steering vectors extracted from pre-training checkpoints could promisingly enhance the SFT model’s trustworthiness.

- During the pretraining period of LLMs, there exist two distinct phases regarding trustworthiness: fitting and compression.

conda env create -f environment.yml

Tips: Before running the script, please replace the model storage path in

src/generate_activations.py,src/eval_trustworthiness.pyfile with your actual model storage path

cd src/

sh scripts/probing.sh

2. Run the Steering Vector Experiments (Section 3: Controlling Trustworthiness via the Steering Vectors from Pre-training Checkpoints)

cd src/

sh scripts/steering.sh

Distributed under the Apache-2.0 License. See LICENSE for more information.

@article{qian2024towards,

title={Towards Tracing Trustworthiness Dynamics: Revisiting Pre-training Period of Large Language Models},

author={Qian, Chen and Zhang, Jie and Yao, Wei and Liu, Dongrui and Yin, Zhenfei and Qiao, Yu and Liu, Yong and Shao, Jing},

journal={arXiv preprint arXiv:2402.19465},

year={2024}

}