This repository tries to solve the task of fovea sampled reconstruction and video super resolution with partly based on the architecture of the Deep Fovea paper by Anton S. Kaplanyan et al. (facebook research). [1]

Our final paper can be found here.

- Add pade activation unit implementation

- Prepare YouTube-M8 used in DeepFovea paper

- Adopt implementation to match the reproduce the original DeepFovea

- Run rest with original DeepFovea model

- Implement axial-attention module

- Implement standalone learnable convex upsampling module

- Use RAFT for optical flow estimation instead of PWC-Net

- Run first test

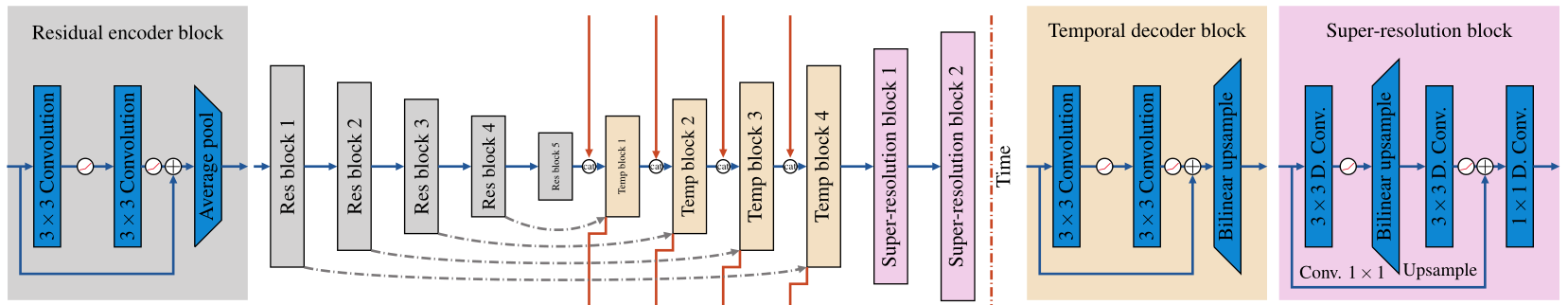

To reach the desired super-resolution (4 times higher than the input) two additional blocks are used, in the end of the generator network. This so called super-resolution blocks are based on two (for the final block three) deformable convolutions and a bilinear upsampling operation. [1]

We applied the same losses as the paper, but we also added a supervised loss. For this supervised loss, the adaptive robust loss function by Jonathan T. Barron was chosen. [1, 4]

We compute each loss independent, since otherwise we run into a VRAM issue (Tesla V100 16GB).

This implementation used the adaptive robust loss

implementation

by Jonathan T. Barron. Furthermore,

deformable convolutions V2 are used in the generator network.

Therefor the implementation

of Dazhi Cheng is utilized.

For the PWC-Net

the implementation and pre-trained weights of Nvidia Research is used.

Additionally the PWC-Net and the flow loss implementation depends on the

correlation, and

resample package

of the PyTorch FlowNet2

implementation by Nvidia. To install the packages run python setup.py install for each package. The setup.py file

is located in the corresponding folder. [4, 2, 6, 5]

All additional required packages can be found in requirements.txt.

To install the additional required packages simply run pip install -r requirements.txt.

git clone https://github.com/ChristophReich1996/Deep_Fovea_Architecture_for_Video_Super_Resolution

cd Deep_Fovea_Architecture_for_Video_Super_Resolution

pip install -r requirements.txt

python correlation/setup.py install

python resample/setup.py install

git clone https://github.com/chengdazhi/Deformable-Convolution-V2-PyTorch

cd Deformable-Convolution-V2-PyTorch

git checkout pytorch_1.0.0

python setup.py build installTo perform training, validation, testing or inference just run the main.py file with the corresponding arguments show

below.

| Argument | Default value | Info |

|---|---|---|

--train |

False | Binary flag. If set training will be performed. |

--val |

False | Binary flag. If set validation will be performed. |

--test |

False | Binary flag. If set testing will be performed. |

--inference |

False | Binary flag. If set inference will be performed. |

--inference_data |

"" | Path to inference data to be loaded. |

--cuda_devices |

"0" | String of cuda device indexes to be used. Indexes must be separated by a comma. |

--data_parallel |

False | Binary flag. If multi GPU training should be utilized set flag. |

--load_model |

"" | Path to model to be loaded. |

We sampled approximately 19.7% of the low resolution (192 X 256) input image, when apply the fovea sampling. This 19.7% corresponds to 1.2% when compared to the high resolution (768 X 1024) label. Each sequence consists of 6 consecutive frames.

Results of the training run started at the 02.05.2020. For this training run the recurrent tensor of each temporal block was reset after each full video.

Low resolution (192 X 256) fovea sampled input image

High resolution (768 X 1024) reconstructed prediction of the generator

High resolution (768 X 1024) label [3]

Results of the training run started at the 04.05.2020. For this training run the recurrent tensor of each temporal block was not reset after each full video.

Low resolution (192 X 256) fovea sampled input image

High resolution (768 X 1024) reconstructed prediction of the generator

High resolution (768 X 1024) label [3]

Table the validation results after approximately 48h of training (Test set not published yet)

| REDS Dataset | L1↓ | L2↓ | RSNR↑ | SSIM↑ |

|---|---|---|---|---|

| DeepFovea++ (reset rec. tensor) | 0.0701 | 0.0117 | 22.6681 | 0.9116 |

| DeepFovea++ (not reset rec. tensor) | 0.0610 | 0.0090 | 23.8755 | 0.9290 |

The visual impression, however, leads to a different result. The results form the training run where the recurrent tensor is reset after each full video seen more realistic.

The corresponding pre-trained models, additional plots and metrics can be found in the folder results.

We also experimented with loss functions at multiple resolution stages. This, however, has led to a big performance drop. The corresponding code can be found in the experimental branch.

[1] @article{deepfovea,

title={DeepFovea: neural reconstruction for foveated rendering and video compression using learned statistics of natural videos},

author={Kaplanyan, Anton S and Sochenov, Anton and Leimk{\"u}hler, Thomas and Okunev, Mikhail and Goodall, Todd and Rufo, Gizem},

journal={ACM Transactions on Graphics (TOG)},

volume={38},

number={6},

pages={1--13},

year={2019},

publisher={ACM New York, NY, USA}

}[2] @inproceedings{deformableconv2,

title={Deformable convnets v2: More deformable, better results},

author={Zhu, Xizhou and Hu, Han and Lin, Stephen and Dai, Jifeng},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={9308--9316},

year={2019}

}[3] @InProceedings{reds,

author = {Nah, Seungjun and Baik, Sungyong and Hong, Seokil and Moon, Gyeongsik and Son, Sanghyun and Timofte, Radu and Lee, Kyoung Mu},

title = {NTIRE 2019 Challenge on Video Deblurring and Super-Resolution: Dataset and Study},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2019}

}[4] @inproceedings{adaptiveroubustloss,

title={A general and adaptive robust loss function},

author={Barron, Jonathan T},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={4331--4339},

year={2019}

}[5] @inproceedings{flownet2,

title={Flownet 2.0: Evolution of optical flow estimation with deep networks},

author={Ilg, Eddy and Mayer, Nikolaus and Saikia, Tonmoy and Keuper, Margret and Dosovitskiy, Alexey and Brox, Thomas},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

pages={2462--2470},

year={2017}

}[6] @inproceedings{pwcnet,

title={Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume},

author={Sun, Deqing and Yang, Xiaodong and Liu, Ming-Yu and Kautz, Jan},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={8934--8943},

year={2018}

}[7] @article{molina2019pad,

title={Pad$\backslash$'e Activation Units: End-to-end Learning of Flexible Activation Functions in Deep Networks},

author={Molina, Alejandro and Schramowski, Patrick and Kersting, Kristian},

journal={arXiv preprint arXiv:1907.06732},

year={2019}

}```