An End-to-End Reinforcement Learning Approach for Job-Shop Scheduling Problems Based on Constraint Programming

This repository contains the source code for the paper "An End-to-End Reinforcement Learning Approach for Job-Shop Scheduling Problems Based on Constraint Programming". This works propose an approach to design a Reinforcement Learning (RL) environment using Constraint Programming (CP) and a training algorithm that does not rely on any custom reward or observation for the job-shop scheduling (JSS) problem.

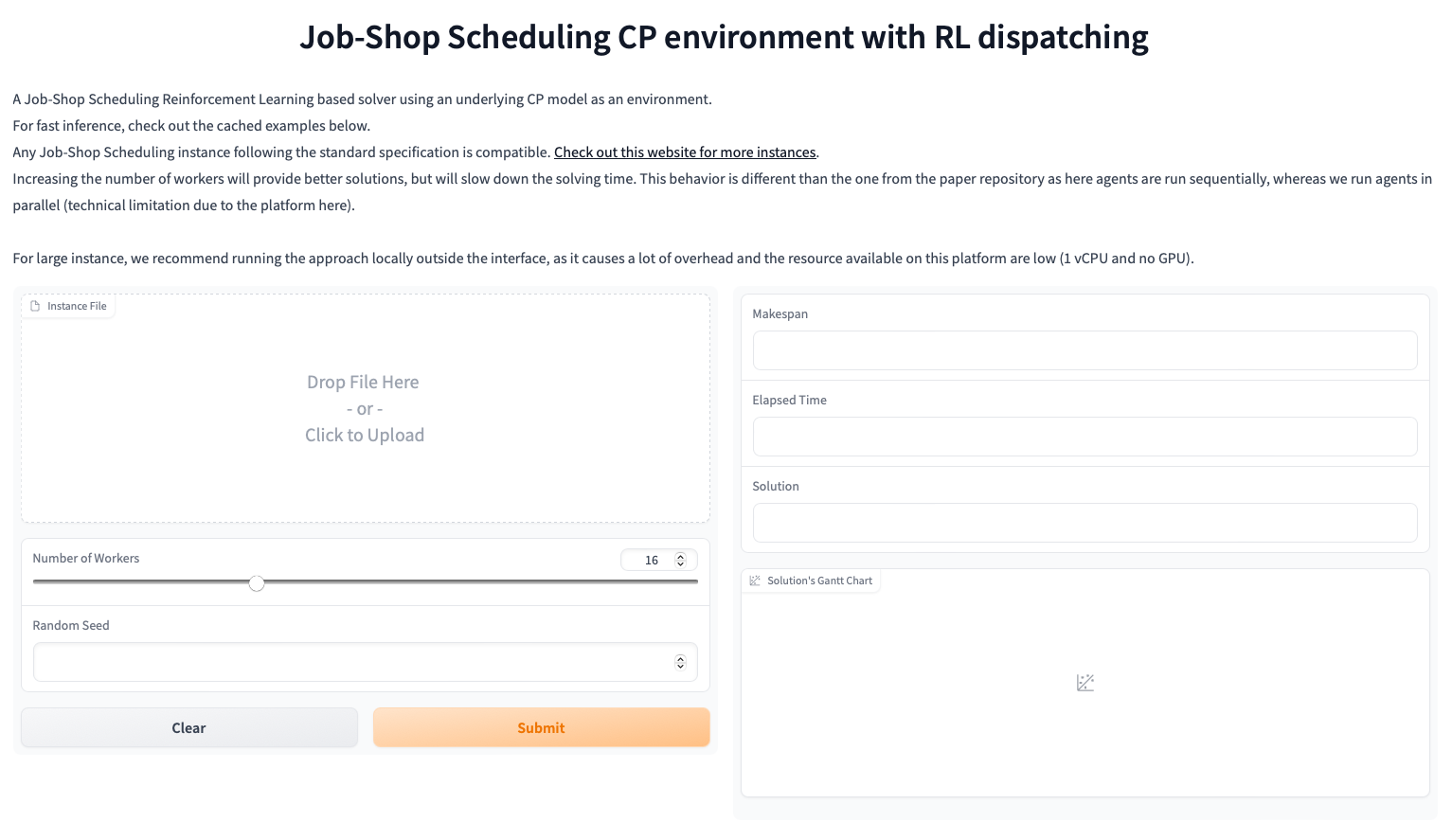

Check out our HugginFace 🤗 Space demo:

To use the code, first clone the repository:

git clone https://github.com/ingambe/End2End-Job-Shop-Scheduling-CP.gitIt is recommended to create a new virtual environment (optional) and install the required dependencies using:

pip install -r requirements.txtThe main.py script allows training the agent from scratch:

python main.pyYou can train your agent on different instances by replacing the files in the instances_train/ folder.

The pre-trained checkpoint of the neural network is saved in the checkpoint.pt file.

The fast_solve.py script solves the job-shop scheduling instances stored in the instances_run/ folder and outputs the results in a results.csv file. For better performance, it is recommended to run the script with the -O argument:

python -O fast_solve.pyTo obtain the solutions using the dispatching heuristics (FIFO, MTWR, etc.), you can execute the script static_dispatching/benchmark_static_dispatching.py

The environment only can be installed as a standalone package using

pip install jss_cpFor extra performance, the code is compiled using MyPyC Checkout the environment repository: https://github.com/ingambe/JobShopCPEnv

If you use this environment in your research, please cite the following paper:

TO BE PUBLISHEDMIT License