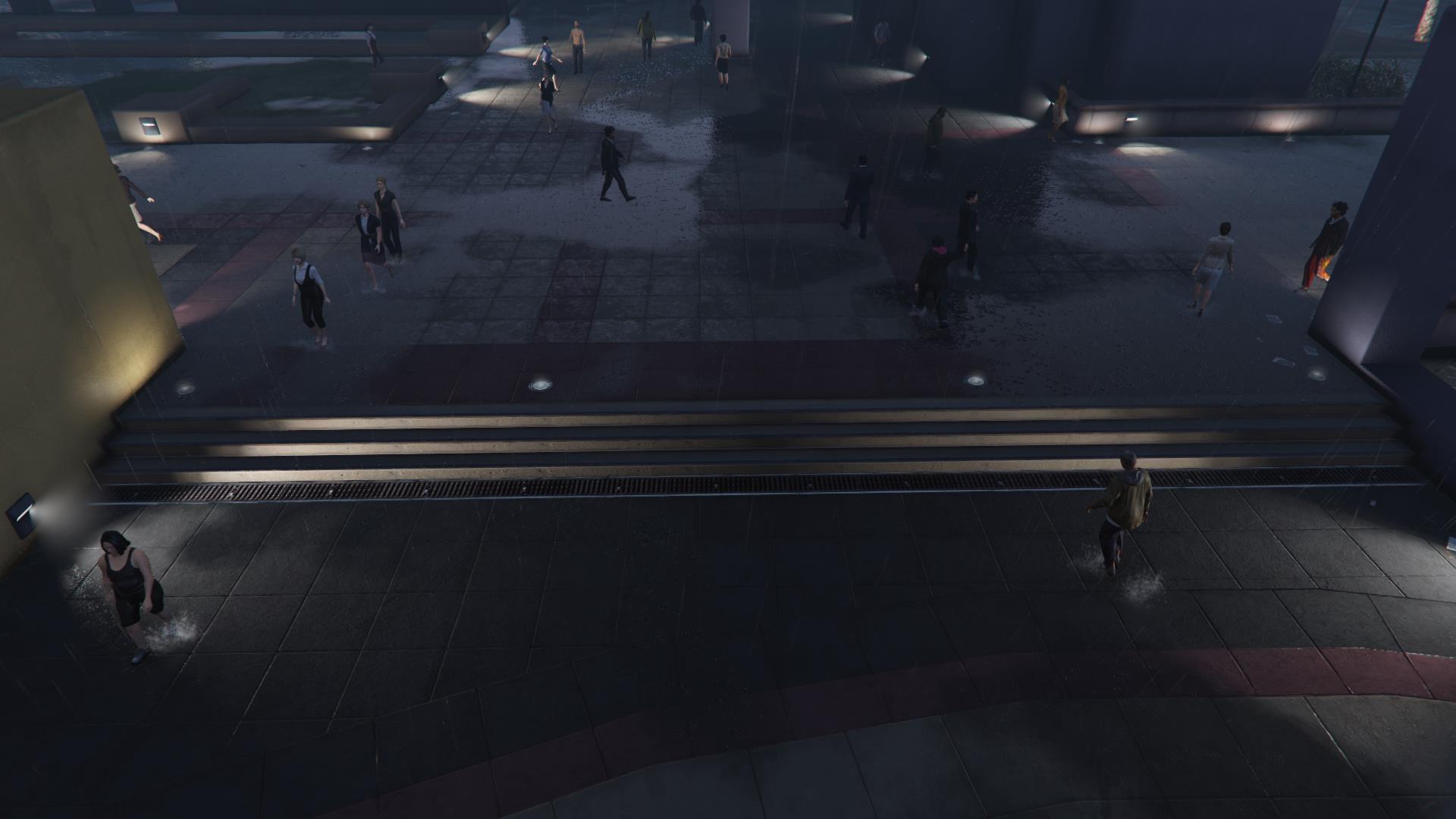

This repo contains the code relating to the paper submitted to CVPR 2020 (Paper ID: 7884) with the instructions for training and testing our models on the JTA dataset.

| Input | Prediction |

|---|---|

|

|

|

|

|

|

|

|

|

|

- run

python demo.py --ex=1(python >= 3.6)- please wait some seconds: it will display some precomputed results. You can change the

exnumber from 1 to 3 to see different results

- please wait some seconds: it will display some precomputed results. You can change the

cdinto the foldernms3dand runpython setup.py install(python >= 3.6). Make sure to add your cuda directory to your environment variables.

- Download the JTA dataset

in

<your_jta_path> - Run

python to_poses.py --out_dir_path='poses' --format='torch'(link) to generate the<your_jta_path>/posesdirectory - Run

python to_imgs.py --out_dir_path='frames' --img_format='jpg'(link) to generate the<your_jta_path>/framesdirectory - Download our precomputed codes from here

and unzip them into

<your_jta_path> - Modify the

conf/default.yamlconfiguration file specifying the path to the JTA dataset directoryJTA_PATH: <your_jta_path>

- run

python train.py --exp_name=default(python >= 3.6)

- run

python show.py --exp_name=default(python >= 3.6)- Note that, before showing the results, you must have completed at least one training epoch; however, to achieve results comparable to those reported in the paper, it is advisable to carry out a training of at least 100 epochs

- Download the pretrained weights and extract them into the project folder

- Modify the

conf/pretrained.yamlconfiguration file specifying the path to the JTA dataset directoryJTA_PATH: <your_jta_path>

- run

python show.py --exp_name=pretrained(python >= 3.6)