This repository maintains the official implementation of methods and experiments presented in our paper titled "DeformTime: Capturing Variable Dependencies with Deformable Attention for Time Series Forecasting".

@article{shu2024deformtime,

author = {Yuxuan Shu and Vasileios Lampos},

title = {{\textsc{DeformTime}: Capturing Variable Dependencies with

Deformable Attention for Time Series Forecasting}},

year = {2024},

journal = {Preprint under review}

}

In multivariate time series (MTS) forecasting, existing state-of-the-art deep learning approaches tend to focus on autoregressive formulations and overlook the information within exogenous indicators. To address this limitation, we present DeformTime, a neural network architecture that attempts to capture correlated temporal patterns from the input space, and hence, improve forecasting accuracy. It deploys two core operations performed by deformable attention blocks (DABs): learning dependencies across variables from different time steps (variable DAB), and preserving temporal dependencies in data from previous time steps (temporal DAB). Input data transformation is explicitly designed to enhance learning from the deformed series of information while passing through a DAB. We conduct extensive experiments on 6 MTS data sets, using previously established benchmarks as well as challenging infectious disease modelling tasks with more exogenous variables. The results demonstrate that DeformTime improves accuracy against previous competitive methods across the vast majority of MTS forecasting tasks, reducing the mean absolute error by 10% on average. Notably, performance gains remain consistent across longer forecasting horizons.

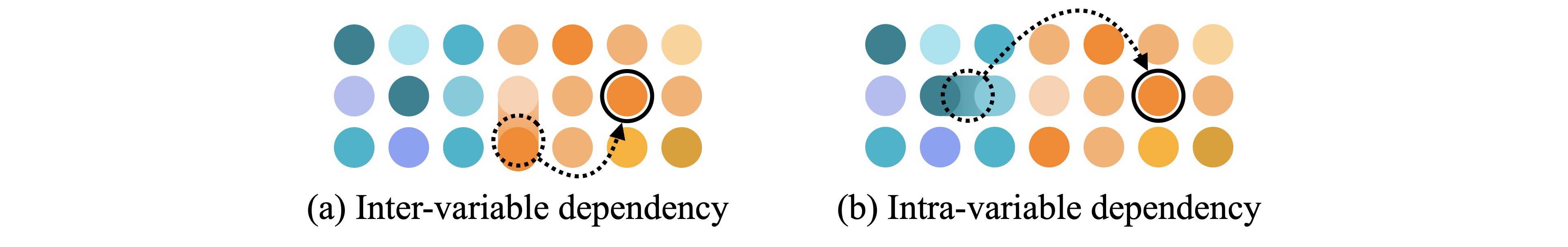

We propose DeformTime, a novel MTS forecasting model that better captures inter- and intra-variate dependencies at different temporal granularities. It comprises two Deformable Attention Blocks (DAB) which allow the model to adaptively focus on more informative neighbouring attributes. The below figure shows how different dependencies are established:

(a) The inter-variable dependency is established across different variables over time. (b) The intra-variable dependency focuses on the important information of the specific variable across time. Both dependencies are adaptively established w.r.t. the input.

Our code was tested with Python 3.9. To create a Conda environment, run the following command in the terminal:

conda create --name dtime python=3.9Activate the environment using the following command:

conda activate dtimeInstall the required packages using:

pip install -r requirements.txt

To prepare the benchmark data sets, you need to obtain the ETTh1, ETTh2, and weather data sets. Steps as below:

-

Download the ETTh1, ETTh2, and weather data sets from the Autoformer repository.

-

Organize the folders with the following structure:

data/ ├──dataset/ ├── ETT/ │ ├── ETTh1.csv │ ├── ETTh2.csv └── weather/ └── weather.csv

Due to a restricted data sharing policy, we currently cannot provide the full version of the ILI data sets in our experiment. Alternatively, we provide the following instructions for anyone interested in obtaining the data.

The ILI rates of the US HHS regions are obtained by the Centers for Disease Control and Prevention (CDC). You may access the data from their official website.

The ILI data for England in our experiment was obtained from the Royal College of General Practitioners (RCGP) with a fixed list of GP practices throughout England. Although we are unable to provide the original ILI data, you may access the officially reported influenza data here.

Queries used in our experiments can be found under the folder data/dataset/queries/. The full version of the base queries is provided in base.csv and the seed queries used to conduct semantic filtering are in seed.csv. We also provide the query lists after semantic filtering. The list for England is available in UK.csv (containing US.csv (containing

To train the model(s) in the paper (for the benchmark data sets), run the below commands:

For ETTh1 tasks:

bash scripts/DeformTime/ETTh1.sh

For ETTh2 tasks:

bash scripts/DeformTime/ETTh2.sh

For weather tasks:

bash scripts/DeformTime/weather.sh

The results will be saved in a result.txt file.

Our model achieves the following performance with MAE score. The best results are in bold font and the second best are underlined. We use iTrans. and Cross. as the abbreviation for iTransformer and Crossformer respectively. See full results in the paper.

| Tasks | DeformTime | PatchTST | iTrans. | TimeMixer | Cross. | LightTS | DLinear | |

|---|---|---|---|---|---|---|---|---|

| ETTh1 | 96 | 0.1941 | 0.2017 | 0.2052 | 0.2112 | 0.2126 | 0.2215 | 0.2599 |

| 192 | 0.2116 | 0.2409 | 0.2429 | 0.2382 | 0.2820 | 0.2636 | 0.3798 | |

| 336 | 0.2158 | 0.2559 | 0.2593 | 0.2625 | 0.2947 | 0.2807 | 0.6328 | |

| 720 | 0.2862 | 0.3087 | 0.2886 | 0.3055 | 0.3350 | 0.5334 | 0.7563 | |

| ETTh2 | 96 | 0.3121 | 0.3145 | 0.3420 | 0.3454 | 0.3486 | 0.3507 | 0.3349 |

| 192 | 0.3281 | 0.3839 | 0.4233 | 0.4183 | 0.4035 | 0.4022 | 0.4084 | |

| 336 | 0.3450 | 0.4018 | 0.4332 | 0.4380 | 0.4487 | 0.4425 | 0.4710 | |

| 720 | 0.3640 | 0.4960 | 0.4565 | 0.4729 | 0.5832 | 0.6252 | 0.7981 | |

| Weather | 96 | 0.0244 | 0.0258 | 0.0277 | 0.0322 | 0.0271 | 0.0293 | 0.0251 |

| 192 | 0.0260 | 0.0279 | 0.0277 | 0.0347 | 0.0308 | 0.0319 | 0.0270 | |

| 336 | 0.0291 | 0.0303 | 0.0308 | 0.0359 | 0.0345 | 0.0317 | 0.0305 | |

| 720 | 0.0363 | 0.0389 | 0.0395 | 0.0457 | 0.0395 | 0.0386 | 0.0352 | |

| ILI-ENG | 7 | 1.6417 | 2.3115 | 2.3084 | 2.1748 | 1.8698 | 2.2397 | 2.8214 |

| 14 | 2.2308 | 3.2547 | 3.2301 | 3.0209 | 2.6543 | 2.6879 | 3.7922 | |

| 21 | 2.6500 | 4.3192 | 4.2347 | 3.5501 | 3.0014 | 3.3616 | 4.4739 | |

| 28 | 2.7228 | 4.9964 | 4.8125 | 4.1188 | 3.1983 | 3.4132 | 5.0347 | |

| ILI-US2 | 7 | 0.4122 | 0.7097 | 0.6507 | 0.5284 | 0.4400 | 0.4632 | 0.7355 |

| 14 | 0.4752 | 0.8635 | 0.7896 | 0.6556 | 0.5852 | 0.5827 | 0.8435 | |

| 21 | 0.5425 | 1.0286 | 0.8042 | 0.6794 | 0.6245 | 0.6683 | 0.9124 | |

| 28 | 0.5538 | 1.1525 | 0.9619 | 0.8853 | 0.6512 | 0.7175 | 0.9805 | |

| ILI-US9 | 7 | 0.2622 | 0.4116 | 0.4057 | 0.3239 | 0.3149 | 0.3185 | 0.4675 |

| 14 | 0.3084 | 0.5020 | 0.4702 | 0.4060 | 0.3571 | 0.3791 | 0.5467 | |

| 21 | 0.3179 | 0.5935 | 0.5106 | 0.4576 | 0.3418 | 0.4754 | 0.6001 | |

| 28 | 0.3532 | 0.6665 | 0.6498 | 0.5124 | 0.3747 | 0.4769 | 0.6564 |

- Our implementation of attention deformation was inspired by DAT.

- We also acknowledge Informer and Autoformer for their valuable code and data for time series forecasting.