- Useful Resources

- What is RAFT?

- Use cases

- Is RAFT right for me?

- Getting Started

- Installing RAFT

- Codebase structure and contents

- Contributing

- References

- RAFT Reference Documentation: API Documentation.

- RAFT Getting Started: Getting started with RAFT.

- Build and Install RAFT: Instructions for installing and building RAFT.

- Example Notebooks: Example jupyer notebooks

- RAPIDS Community: Get help, contribute, and collaborate.

- GitHub repository: Download the RAFT source code.

- Issue tracker: Report issues or request features.

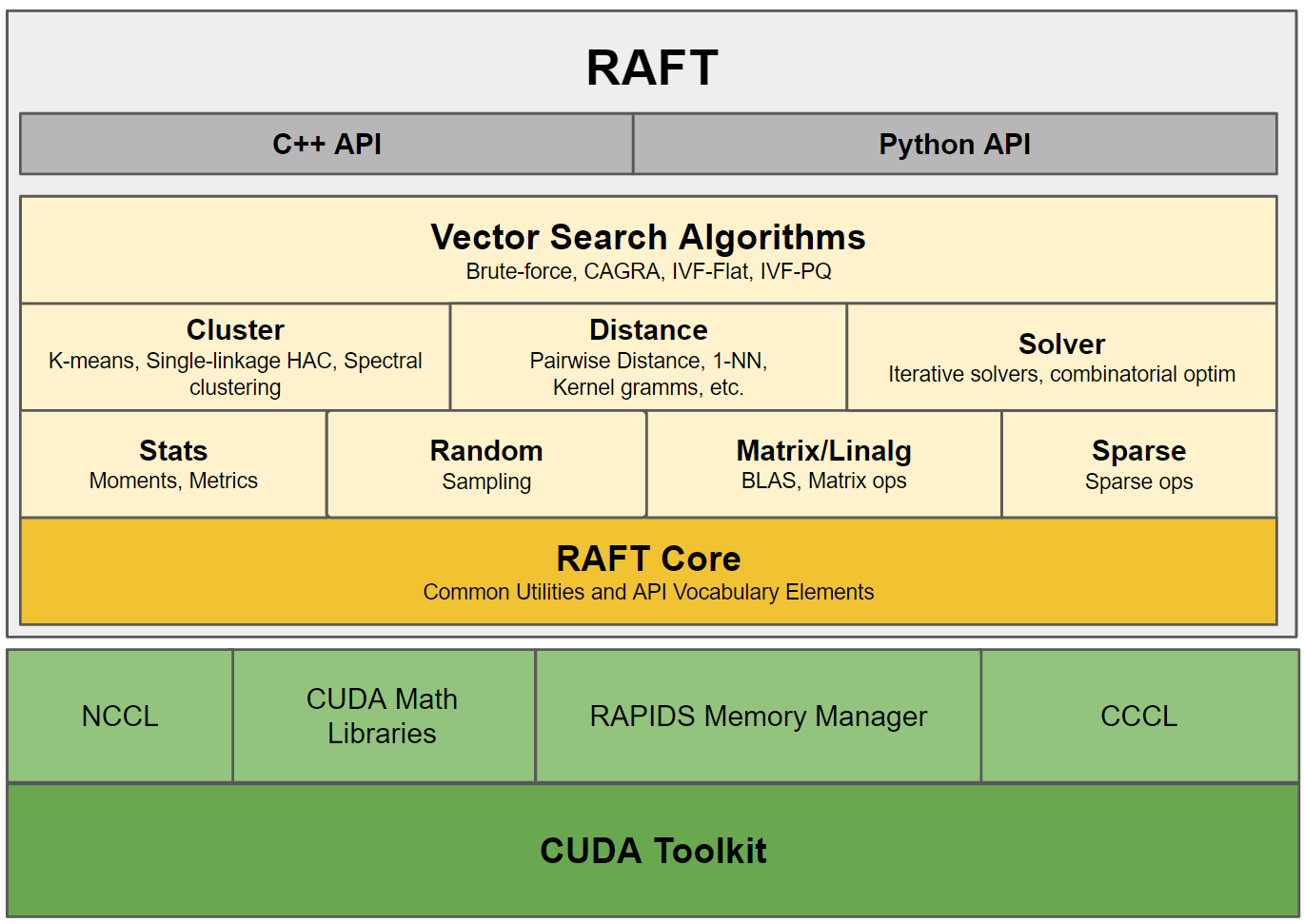

RAFT contains fundamental widely-used algorithms and primitives for machine learning and information retrieval. The algorithms are CUDA-accelerated and form building blocks for more easily writing high performance applications.

By taking a primitives-based approach to algorithm development, RAFT

- accelerates algorithm construction time

- reduces the maintenance burden by maximizing reuse across projects, and

- centralizes core reusable computations, allowing future optimizations to benefit all algorithms that use them.

While not exhaustive, the following general categories help summarize the accelerated functions in RAFT:

| Category | Accelerated Functions in RAFT |

|---|---|

| Nearest Neighbors | vector search, neighborhood graph construction, epsilon neighborhoods, pairwise distances |

| Basic Clustering | spectral clustering, hierarchical clustering, k-means |

| Solvers | combinatorial optimization, iterative solvers |

| Data Formats | sparse & dense, conversions, data generation |

| Dense Operations | linear algebra, matrix and vector operations, reductions, slicing, norms, factorization, least squares, svd & eigenvalue problems |

| Sparse Operations | linear algebra, eigenvalue problems, slicing, norms, reductions, factorization, symmetrization, components & labeling |

| Statistics | sampling, moments and summary statistics, metrics, model evaluation |

| Tools & Utilities | common tools and utilities for developing CUDA applications, multi-node multi-gpu infrastructure |

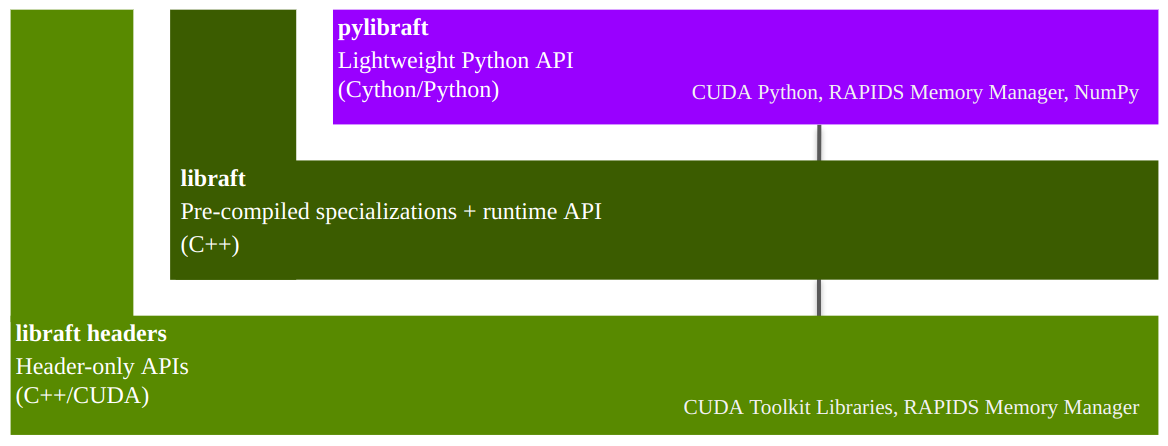

RAFT is a C++ header-only template library with an optional shared library that

- can speed up compile times for common template types, and

- provides host-accessible "runtime" APIs, which don't require a CUDA compiler to use

In addition being a C++ library, RAFT also provides 2 Python libraries:

pylibraft- lightweight Python wrappers around RAFT's host-accessible "runtime" APIs.raft-dask- multi-node multi-GPU communicator infrastructure for building distributed algorithms on the GPU with Dask.

RAFT contains state-of-the-art implementations of approximate nearest neighbors search (ANNS) algorithms on the GPU, such as:

- Brute force. Performs a brute force nearest neighbors search without an index.

- IVF-Flat and IVF-PQ. Use an inverted file index structure to map contents to their locations. IVF-PQ additionally uses product quantization to reduce the memory usage of vectors. These methods were originally popularized by the FAISS library.

- CAGRA (Cuda Anns GRAph-based). Uses a fast ANNS graph construction and search implementation optimized for the GPU. CAGRA outperforms state-of-the art CPU methods (i.e. HNSW) for large batch queries, single queries, and graph construction time.

Projects that use the RAFT ANNS algorithms for accelerating vector search include: Milvus, Redis, and Faiss.

Please see the example Jupyter notebook to get started RAFT for vector search in Python.

RAFT contains a catalog of reusable primitives for composing algorithms that require fast neighborhood computations, such as

- Computing distances between vectors and computing kernel gramm matrices

- Performing ball radius queries for constructing epsilon neighborhoods

- Clustering points to partition a space for smaller and faster searches

- Constructing neighborhood "connectivities" graphs from dense vectors

RAFT's primitives are used in several RAPIDS libraries, including cuML, cuGraph, and cuOpt to build many end-to-end machine learning algorithms that span a large spectrum of different applications, including

- data generation

- model evaluation

- classification and regression

- clustering

- manifold learning

- dimensionality reduction.

RAFT is also used by the popular collaborative filtering library implicit for recommender systems.

RAFT contains low-level primitives for accelerating applications and workflows. Data source providers and application developers may find specific tools -- like ANN algorithms -- very useful. RAFT is not intended to be used directly by data scientists for discovery and experimentation. For data science tools, please see the RAPIDS website.

RAFT relies heavily on RMM which eases the burden of configuring different allocation strategies globally across the libraries that use it.

The APIs in RAFT accept the mdspan multi-dimensional array view for representing data in higher dimensions similar to the ndarray in the Numpy Python library. RAFT also contains the corresponding owning mdarray structure, which simplifies the allocation and management of multi-dimensional data in both host and device (GPU) memory.

The mdarray forms a convenience layer over RMM and can be constructed in RAFT using a number of different helper functions:

#include <raft/core/device_mdarray.hpp>

int n_rows = 10;

int n_cols = 10;

auto scalar = raft::make_device_scalar<float>(handle, 1.0);

auto vector = raft::make_device_vector<float>(handle, n_cols);

auto matrix = raft::make_device_matrix<float>(handle, n_rows, n_cols);Most of the primitives in RAFT accept a raft::device_resources object for the management of resources which are expensive to create, such CUDA streams, stream pools, and handles to other CUDA libraries like cublas and cusolver.

The example below demonstrates creating a RAFT handle and using it with device_matrix and device_vector to allocate memory, generating random clusters, and computing

pairwise Euclidean distances:

#include <raft/core/device_resources.hpp>

#include <raft/core/device_mdarray.hpp>

#include <raft/random/make_blobs.cuh>

#include <raft/distance/distance.cuh>

raft::device_resources handle;

int n_samples = 5000;

int n_features = 50;

auto input = raft::make_device_matrix<float, int>(handle, n_samples, n_features);

auto labels = raft::make_device_vector<int, int>(handle, n_samples);

auto output = raft::make_device_matrix<float, int>(handle, n_samples, n_samples);

raft::random::make_blobs(handle, input.view(), labels.view());

auto metric = raft::distance::DistanceType::L2SqrtExpanded;

raft::distance::pairwise_distance(handle, input.view(), input.view(), output.view(), metric);It's also possible to create raft::device_mdspan views to invoke the same API with raw pointers and shape information:

#include <raft/core/device_resources.hpp>

#include <raft/core/device_mdspan.hpp>

#include <raft/random/make_blobs.cuh>

#include <raft/distance/distance.cuh>

raft::device_resources handle;

int n_samples = 5000;

int n_features = 50;

float *input;

int *labels;

float *output;

...

// Allocate input, labels, and output pointers

...

auto input_view = raft::make_device_matrix_view(input, n_samples, n_features);

auto labels_view = raft::make_device_vector_view(labels, n_samples);

auto output_view = raft::make_device_matrix_view(output, n_samples, n_samples);

raft::random::make_blobs(handle, input_view, labels_view);

auto metric = raft::distance::DistanceType::L2SqrtExpanded;

raft::distance::pairwise_distance(handle, input_view, input_view, output_view, metric);The pylibraft package contains a Python API for RAFT algorithms and primitives. pylibraft integrates nicely into other libraries by being very lightweight with minimal dependencies and accepting any object that supports the __cuda_array_interface__, such as CuPy's ndarray. The number of RAFT algorithms exposed in this package is continuing to grow from release to release.

The example below demonstrates computing the pairwise Euclidean distances between CuPy arrays. Note that CuPy is not a required dependency for pylibraft.

import cupy as cp

from pylibraft.distance import pairwise_distance

n_samples = 5000

n_features = 50

in1 = cp.random.random_sample((n_samples, n_features), dtype=cp.float32)

in2 = cp.random.random_sample((n_samples, n_features), dtype=cp.float32)

output = pairwise_distance(in1, in2, metric="euclidean")The output array in the above example is of type raft.common.device_ndarray, which supports cuda_array_interface making it interoperable with other libraries like CuPy, Numba, PyTorch and RAPIDS cuDF that also support it. CuPy supports DLPack, which also enables zero-copy conversion from raft.common.device_ndarray to JAX and Tensorflow.

Below is an example of converting the output pylibraft.device_ndarray to a CuPy array:

cupy_array = cp.asarray(output)And converting to a PyTorch tensor:

import torch

torch_tensor = torch.as_tensor(output, device='cuda')Or converting to a RAPIDS cuDF dataframe:

cudf_dataframe = cudf.DataFrame(output)When the corresponding library has been installed and available in your environment, this conversion can also be done automatically by all RAFT compute APIs by setting a global configuration option:

import pylibraft.config

pylibraft.config.set_output_as("cupy") # All compute APIs will return cupy arrays

pylibraft.config.set_output_as("torch") # All compute APIs will return torch tensorsYou can also specify a callable that accepts a pylibraft.common.device_ndarray and performs a custom conversion. The following example converts all output to numpy arrays:

pylibraft.config.set_output_as(lambda device_ndarray: return device_ndarray.copy_to_host())pylibraft also supports writing to a pre-allocated output array so any __cuda_array_interface__ supported array can be written to in-place:

import cupy as cp

from pylibraft.distance import pairwise_distance

n_samples = 5000

n_features = 50

in1 = cp.random.random_sample((n_samples, n_features), dtype=cp.float32)

in2 = cp.random.random_sample((n_samples, n_features), dtype=cp.float32)

output = cp.empty((n_samples, n_samples), dtype=cp.float32)

pairwise_distance(in1, in2, out=output, metric="euclidean")RAFT itself can be installed through conda, CMake Package Manager (CPM), pip, or by building the repository from source. Please refer to the build instructions for more a comprehensive guide on installing and building RAFT and using it in downstream projects.

The easiest way to install RAFT is through conda and several packages are provided.

libraft-headersRAFT headerslibraft(optional) shared library of pre-compiled template instantiations and runtime APIs.pylibraft(optional) Python wrappers around RAFT algorithms and primitives.raft-dask(optional) enables deployment of multi-node multi-GPU algorithms that use RAFTraft::commsin Dask clusters.

Use the following command to install all of the RAFT packages with conda (replace rapidsai with rapidsai-nightly to install more up-to-date but less stable nightly packages). mamba is preferred over the conda command.

mamba install -c rapidsai -c conda-forge -c nvidia raft-dask pylibraftYou can also install the conda packages individually using the mamba command above.

After installing RAFT, find_package(raft COMPONENTS compiled distributed) can be used in your CUDA/C++ cmake build to compile and/or link against needed dependencies in your raft target. COMPONENTS are optional and will depend on the packages installed.

pylibraft and raft-dask both have experimental packages that can be installed through pip:

pip install pylibraft-cu11 --extra-index-url=https://pypi.nvidia.com

pip install raft-dask-cu11 --extra-index-url=https://pypi.nvidia.comRAFT uses the RAPIDS-CMake library, which makes it easy to include in downstream cmake projects. RAPIDS-CMake provides a convenience layer around CPM. Please refer to these instructions to install and use rapids-cmake in your project.

You can find an example RAFT project template in the cpp/template directory, which demonstrates how to build a new application with RAFT or incorporate RAFT into an existing cmake project.

Additional CMake targets can be made available by adding components in the table below to the RAFT_COMPONENTS list above, separated by spaces. The raft::raft target will always be available. RAFT headers require, at a minimum, the CUDA toolkit libraries and RMM dependencies.

| Component | Target | Description | Base Dependencies |

|---|---|---|---|

| n/a | raft::raft |

Full RAFT header library | CUDA toolkit, RMM, NVTX, CCCL, CUTLASS |

| compiled | raft::compiled |

Pre-compiled template instantiations and runtime library | raft::raft |

| distributed | raft::distributed |

Dependencies for raft::comms APIs |

raft::raft, UCX, NCCL |

The easiest way to build RAFT from source is to use the build.sh script at the root of the repository:

- Create an environment with the needed dependencies:

mamba env create --name raft_dev_env -f conda/environments/all_cuda-118_arch-x86_64.yaml

mamba activate raft_dev_env

./build.sh raft-dask pylibraft libraft tests bench --compile-lib

The build instructions contain more details on building RAFT from source and including it in downstream projects. You can also find a more comprehensive version of the above CPM code snippet the Building RAFT C++ from source section of the build instructions.

The folder structure mirrors other RAPIDS repos, with the following folders:

bench/ann: Python scripts for running ANN benchmarksci: Scripts for running CI in PRsconda: Conda recipes and development conda environmentscpp: Source code for C++ libraries.bench: Benchmarks source codecmake: CMake modules and templatesdoxygen: Doxygen configurationinclude: The C++ API headers are fully-contained here (deprecated directories are excluded from the listing below)cluster: Basic clustering primitives and algorithms.comms: A multi-node multi-GPU communications abstraction layer for NCCL+UCX and MPI+NCCL, which can be deployed in Dask clusters using theraft-daskPython package.core: Core API headers which require minimal dependencies aside from RMM and Cudatoolkit. These are safe to expose on public APIs and do not requirenvccto build. This is the same for any headers in RAFT which have the suffix*_types.hpp.distance: Distance primitiveslinalg: Dense linear algebramatrix: Dense matrix operationsneighbors: Nearest neighbors and knn graph constructionrandom: Random number generation, sampling, and data generation primitivessolver: Iterative and combinatorial solvers for optimization and approximationsparse: Sparse matrix operationsconvert: Sparse conversion functionsdistance: Sparse distance computationslinalg: Sparse linear algebraneighbors: Sparse nearest neighbors and knn graph constructionop: Various sparse operations such as slicing and filtering (Note: this will soon be renamed tosparse/matrix)solver: Sparse solvers for optimization and approximation

stats: Moments, summary statistics, model performance measuresutil: Various reusable tools and utilities for accelerated algorithm development

internal: A private header-only component that hosts the code shared between benchmarks and tests.scripts: Helpful scripts for developmentsrc: Compiled APIs and template instantiations for the shared librariestemplate: A skeleton template containing the bare-bones file structure and cmake configuration for writing applications with RAFT.test: Googletests source code

docs: Source code and scripts for building library documentation (Uses breath, doxygen, & pydocs)notebooks: IPython notebooks with usage examples and tutorialspython: Source code for Python libraries.pylibraft: Python build and source code for pylibraft libraryraft-dask: Python build and source code for raft-dask library

thirdparty: Third-party licenses

If you are interested in contributing to the RAFT project, please read our Contributing guidelines. Refer to the Developer Guide for details on the developer guidelines, workflows, and principals.

When citing RAFT generally, please consider referencing this Github project.

@misc{rapidsai,

title={Rapidsai/raft: RAFT contains fundamental widely-used algorithms and primitives for data science, Graph and machine learning.},

url={https://github.com/rapidsai/raft},

journal={GitHub},

publisher={Nvidia RAPIDS},

author={Rapidsai},

year={2022}

}If citing the sparse pairwise distances API, please consider using the following bibtex:

@article{nolet2021semiring,

title={Semiring primitives for sparse neighborhood methods on the gpu},

author={Nolet, Corey J and Gala, Divye and Raff, Edward and Eaton, Joe and Rees, Brad and Zedlewski, John and Oates, Tim},

journal={arXiv preprint arXiv:2104.06357},

year={2021}

}If citing the single-linkage agglomerative clustering APIs, please consider the following bibtex:

@misc{nolet2023cuslink,

title={cuSLINK: Single-linkage Agglomerative Clustering on the GPU},

author={Corey J. Nolet and Divye Gala and Alex Fender and Mahesh Doijade and Joe Eaton and Edward Raff and John Zedlewski and Brad Rees and Tim Oates},

year={2023},

eprint={2306.16354},

archivePrefix={arXiv},

primaryClass={cs.LG}

}If citing CAGRA, please consider the following bibtex:

@misc{ootomo2023cagra,

title={CAGRA: Highly Parallel Graph Construction and Approximate Nearest Neighbor Search for GPUs},

author={Hiroyuki Ootomo and Akira Naruse and Corey Nolet and Ray Wang and Tamas Feher and Yong Wang},

year={2023},

eprint={2308.15136},

archivePrefix={arXiv},

primaryClass={cs.DS}

}