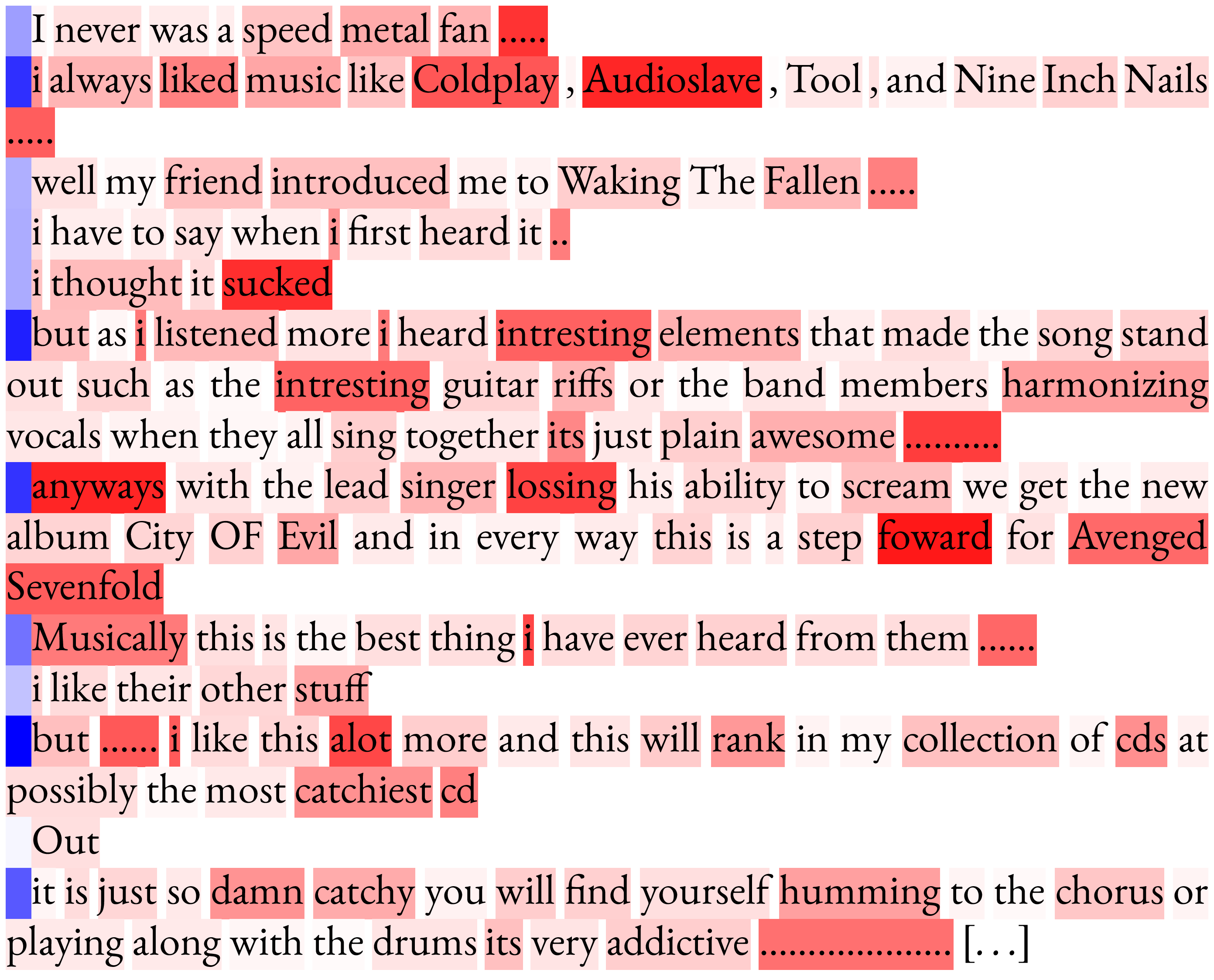

Authorship verification is the task of analyzing the linguistic patterns of two or more texts to determine whether they were written by the same author or not. The analysis is traditionally performed by experts who consider linguistic features, which include spelling mistakes, grammatical inconsistencies, and stylistics for example. Machine learning algorithms, on the other hand, can be trained to accomplish the same, but have traditionally relied on so-called stylometric features. The disadvantage of such features is that their reliability is greatly diminished for short and topically varied social media texts. In this work, we propose an attention-based Siamese neural network approach, with which it is feasible to learn neural features and to visualize the decision-making process.

This repository contains the source code used in our paper Explainable Authorship Verification in Social Media via Attention-based Similarity Learning published at 2019 IEEE International Conference on Big Data (IEEE BigData 2019)

Please, feel free to send any comments or suggestions! (benedikt.boenninghoff[at]rub.de)

We used Python 3.6 (Anaconda 3.6). The following libraries are required:

- Tensorflow 1.12. - 1.15

- spacy 2.3.2 (download tokenizer via "python -m spacy download en_core_web_lg")

- textacy 0.8.0

- fasttext 0.9.2

- numpy 1.18.1

- scipy 1.4.1

- pandas 1.0.4

- scikit-learn 0.20.3

- bs4 0.0.1

This repository works with a small Amazon review dataset, including 9000 review pairs written by 300 distinct authors. Since you can achieve 99% accuracy, this dataset provides a simple sanity check for new authorship verification methods.

mkdir data

cd data

wget https://github.com/marjanhs/prnn/raw/master/data/amazon.7z

sudo apt-get install p7zip-full

7z x amazon.7z

We used pretrained word embeddings. You may prepare them as follows:

cd data

wget https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.en.300.bin.gz

gunzip cc.en.300.bin.gz

python main_preprocess.py

You can train AdHominem as follows:

python main_adhominem.py

Using the evaluation script of the PAN 2020 AV challenge, the results may look like this:

| AUC | c@1 | f_0.5_u | F1 | overall |

|---|---|---|---|---|

| 0.997 | 0.993 | 0.991 | 0.993 | 0.994 |

If you use our code or data, please cite the papers using the following BibTeX entries:

@inproceedings{Boenninghoff2019b,

author={Benedikt Boenninghoff, Steffen Hessler, Dorothea Kolossa and Robert M. Nickel},

title={Explainable Authorship Verification in Social Media via Attention-based Similarity Learning},

booktitle={IEEE International Conference on Big Data (IEEE Big Data 2019), Los Angeles, CA, USA, December 9-12, 2019},

year={2019},

}