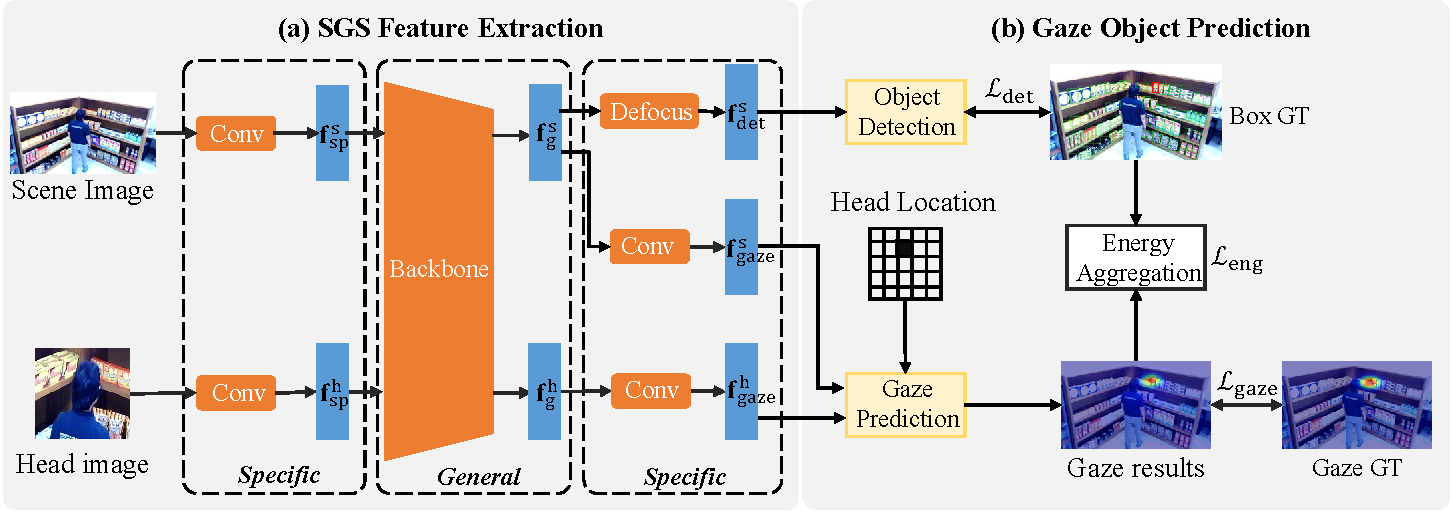

This repository is the official implementation of our CVPR2022 paper "GaTector: A Unified Framework for Gaze Object Prediction", where we propose a novel task named Gaze Object Prediction.

note: We use GOO-real to obtain object detection results and GOO-synth to obtain gaze object prediction results

The GOO dataset contains two subsets: GOO-Sync and GOO-Real.

You can download GOO-synth from OneDrive:

Train: part1, part2, part3, part4, part5, part6, part7, part8, part9, part10, part11

Test: GOOsynth-test_data

Annotation file:

GOOsynth-train_data_Annotation

You can download GOO-Real from OneDrive:

Train: GOOreal-train_data

Test: GOOreal-test_data

Annotation file:

Please ensure the data structure is as below

├── data

└── goo_dataset

└── goosynth

└── picklefile

├── goosynth_test_v2_no_segm.pkl

├── goosynth_train_v2_no_segm.pkl

└── test

└── images

├── 0.png

├── 1.png

├── ...

├── 19199.png

└── train

└── images

├── 0.png

├── 1.png

├── ...

├── 172799.png

└── gooreal

└── train_data

├── 0.png

├── 1.png

├── ...

├── 3608.png

└── test_data

├── 3609.png

├── 3610.png

├── ...

├── 4512.png

├── train.pickle

├── test.pickle

Requirements

conda env create -n Gatector -f environment.yaml

To carry out experiments on the GOO dataset, please follow these commands:

Experiments on GOO-Synth:

python main.py --train_mode 0 --train_dir './data/goo_dataset/goosynth/train/images/' --train_annotation './data/goo_dataset/goosynth/picklefile/goosynth_train_v2_no_segm.pkl' --test_dir './data/goo_dataset/goosynth/test/images/' --test_annotation './data/goo_dataset/goosynth/picklefile/goosynth_test_v2_no_segm.pkl'

Experiments on GOO-Real:

python main.py --train_mode 1 --train_dir './data/goo_dataset/gooreal/train_data/' --train_annotation './data/goo_dataset/gooreal/train.pickle' --test_dir './data/goo_dataset/gooreal/test_data/' --test_annotation './data/goo_dataset/gooreal/test.pickle/'

You can download pretrained models from baiduyun

Pre-trained Models (code:xfyk).

note:

get_map.py path:'./lib/get_map.py'

Object detection result

get mAP

python get_map.py --train_mode 1 --dataset 1

get wUOC

python get_map.py --train_mode 1 --getwUOC True --dataset 1

Object detection + gaze estimation

When you training the model, the index of gaze will be saved in gaze_performence.txt.

get mAP

python get_map.py --train_mode 0 --dataset 0

get wUOC

python get_map.py --train_mode 0 --getwUOC True --dataset 0

note: You can test your data by modifying the images and annotations in './test_data/data_proc' or './test_data/VOCdevkit/VOC2007'

Our model achieves the following performance on GOOSynth dataset:

| AUC | Dist. | Ang. | AP | AP50 | AP75 | GOP wUoC (%) | GOP mSoC (%) |

|---|---|---|---|---|---|---|---|

| 0.957 | 0.073 | 14.91 | 56.8 | 95.3 | 62.5 | 81.10 | 67.94 |

GOO-real (object detection)

GOO-real ep100-loss0.374-val_loss0.359

GOO-synth (gaze estimation + object detection)

GOO-synth ep098-loss15.975-val_loss42.955

Since the wUoC metric is invalid when the area of the ground truth box is equal to the area of the predicted box, we redefine the weight of wUoC as min(p/a, g/a). We call the improved metric mSoC. We report the performance of GOP using mSoC again in results. Fig (a) and (b) shows the definition of mSoC and failure case of wUoC respectively.

@inproceedings{wang2022gatector,

title={GaTector: A Unified Framework for Gaze Object Prediction},

author={Wang, Binglu and Hu, Tao and Li, Baoshan and Chen, Xiaojuan and Zhang, Zhijie},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={19588--19597},

year={2022}

}