Neural network quantization is the process that compresses the weights in a neural network to use a smaller number representation. This makes its representation smaller, both on disk and in memory, and can make the computation less expensive for accelerators, typically by using small integer weights for the coefficients. At the same time, it reduces the precision of the computations, so that good algorithm design is necessary to maintain good quality.

This repository contains tools to research post-training neural networks quantization, with methods to improve over the current state-of-the-art. It is purely for analysis purpose: complete implementations will be made available on other repositories. Our main contributions are two simple improvements that are compatible with most quantization methods: an improved scaling method, and making better use of the bias during quantization.

Sleekit uses a very generic quantization method. The steps to quantize a layer are:

- gathering sample data: we run the network on some data samples to gather statistical informations for each layer;

- chosing of a codebook: a codebook gives a limited number of values that can be represented, and we round the weights to one of the values in the codebook;

- scaling the weights: we apply a scaling factor so that the weights are close to the chosen codebook;

- optimizing the weights: to maintain a good quality for the neural network, we use a specialized algorithm to tweak the weights after rounding.

We present deveral generic improvements that can be applied to any quantization method. They will target both the scaling step, to select better scaling factors, and the weight optimization step to reduce the layer error.

To develop our methods we analyze the effect of quantization decisions on a per-layer basis. Despite many previous works using network-level metrics, post-training quantization methods minimize the error at the layer level. Analyzing the error at the layer level is therefore the natural approach. Moreover, network-level metrics have a tendency to be noisy, can hide small quantization errors or on the contrary be over-sensitive to some layers.

Our baseline for comparison is the GPTQ algorithm with 3-bit and 1.5-bit weights. For the layer weights and metrics, we use layer statistics from a full accuracy run on several smaller networks (OPT-125M, OPT-350M, BLOOM-560M). We compare the error introduced by the quantization with and without our methods.

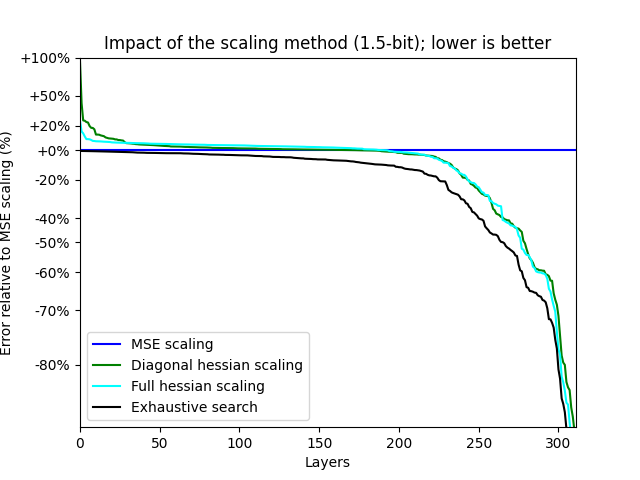

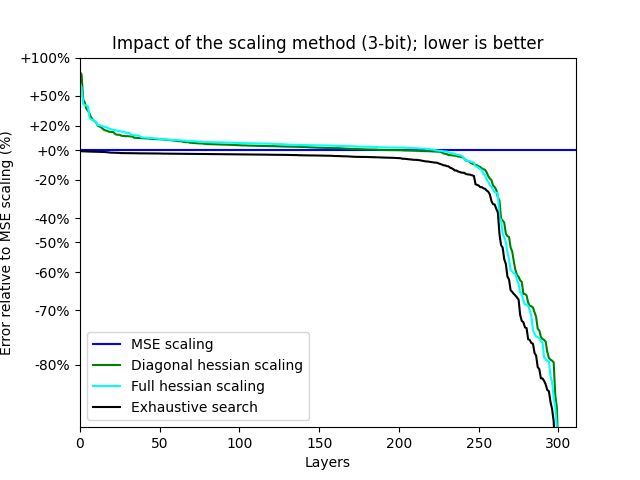

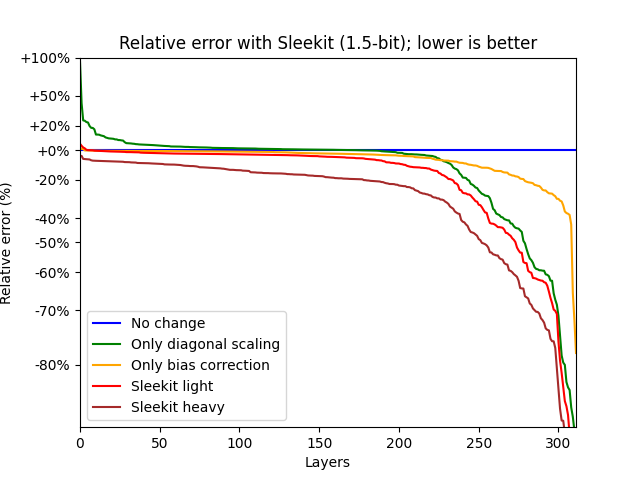

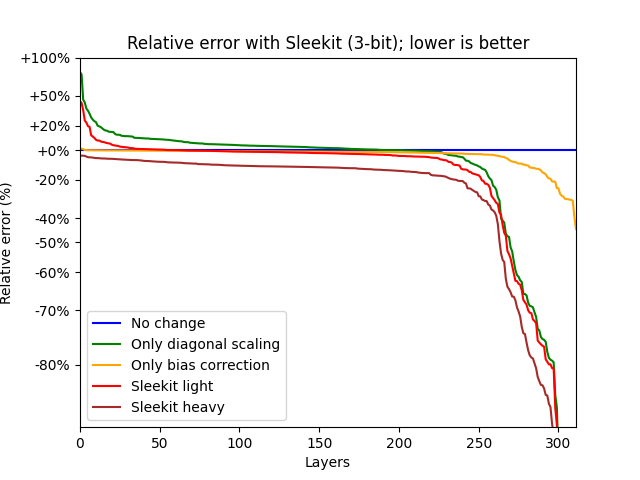

A good scaling factor minimizes the error introduced by quantization. The typical method is to chose a scaling factor that minimizes the mean squared error on the weights (MSE). We introduce a more precise approach, that optimizes the layer's result directly.

For weight optimization, we already have access to an accurate measure of the layer's error (the hessian matrix

- minimizing the mean squared error after rounding to the nearest;

- using the full hessian matrix to compute the error, which is computationally expensive;

- using the diagonal of the hessian matrix to compute the error, which has the same computational cost as the MSE;

- using the full weight optimization to compute the error for each scaling value, which is extremely expensive but is theoretically optimal.

The usual approach of minimizing the MSE yields results that are far from optimal. Using the full hessian matrix or its diagonal yields similar results that are on average much better than MSE alone, although they are still far from the theoretical optimum of integrating the weight optimization algorithm with the scaling method.

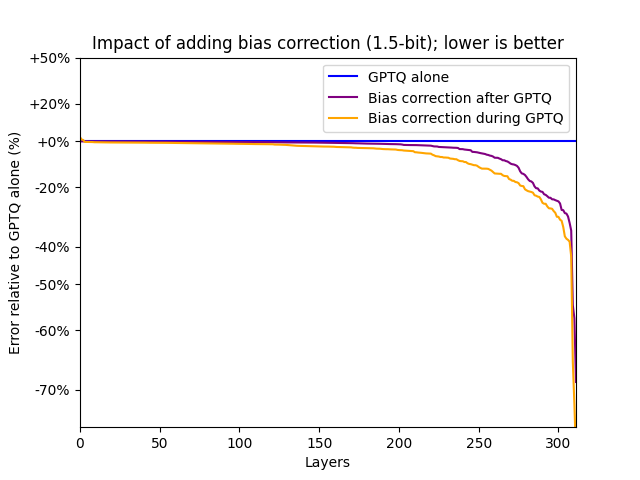

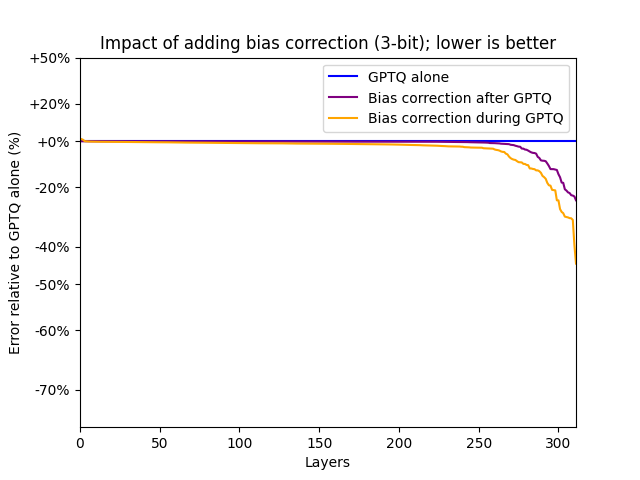

Bias correction is a method used to reduce the impact of quantization on a layer.

Newer quantization methods behave much better, and it is not used much anymore.

However, it is compatible and there is no reason not to use both.

The effect of bias correction can even be integrated in the cost function used for weight optimization, using

We test three different ways to update the bias:

- applying weight optimization alone (GPTQ) without bias correction;

- applying bias correction after weight optimization, yielding a slightly smaller layer error;

- taking the effect of bias correction into account during weight optimization.

Adding back bias correction greatly improves certain layers, in particular some attention layers in all networks. While it is always an improvement in theory, bias correction can play havoc with the optimization algorithm, and taking it into account during weight optimization is sometimes detrimental. Adding bias correction after optimization, or picking the best of the two results, is always better than the baseline. Unsurprisingly, it has more impact with a more agressive quantization.

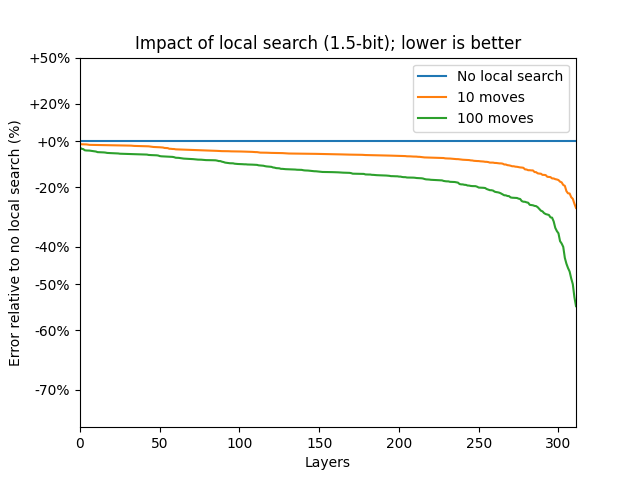

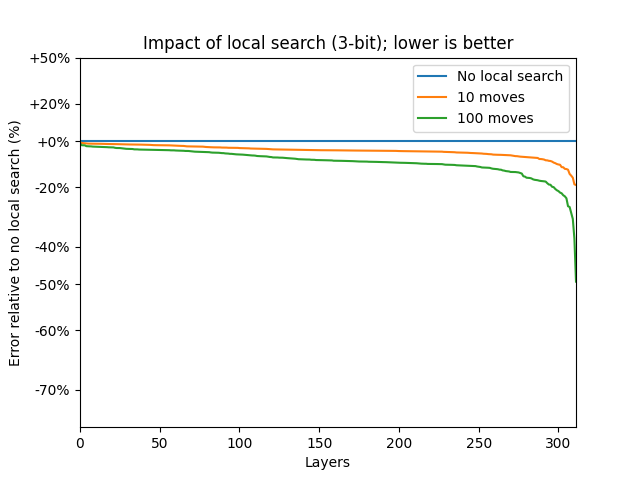

The weight optimization problem is NP-hard, and can only be solved at scale in an approximate manner. GPTQ provides a good heuristic for it, however the heuristic of choice to obtain good solutions to similar problems (QUBO) is a simple local search. For this reason, we test the effect of applying a few local search moves after GPTQ, in a best-first manner.

The effect of just a few local search moves is notable on many layers, and applying them after GPTQ can drastically reduce layer error.

Other tricks yield smaller but useful improvements:

- Using a different ordering for GPTQ. GPTQ makes rounding decisions for the weights in a greedy manner; they obtain a good ordering using the diagonal of the matrix in decreasing order. We improve this slightly, and multiply this value by the sum of squares of the quantization error (without correction); this takes better account of the effect of saturation.

- Using a different dampening for GPTQ. GPTQ does not behave well on ill-conditioned matrix, and adding a larger penalty term to the matrix paradoxically yields better results.

The following approaches did not yield promising results and were abandoned:

- Improved codebooks: the data is far from being gaussian-distributed, but training a codebook naively is not better than a NF4 codebook. A good codebook training would take the importance of individual weights in the layer into account.

- Entropy coding: it is tempting to combine codebook optimization with entropy coding to reduce storage needs. However, the gain in entropy is not huge compared to an error-optimized codebook, and does not seem worth the effort.

- GPTQ reordering: clever heuristic orderings for GPTQ based on the hessian matrix do not bring a reduction in layer error, compared to using its diagonal as the original paper does. We tested several variations using the diagonal of the inverse and pivoted Cholesky decompositions.

- More complex algorithms for weight optimization: it just doesn't scale, but if you want to go in this direction you probably want to use the MQLib as a solver.

Finally, we put these algorithms together in Sleekit:

- the hessian matrix is modified to represent the effect of bias correction;

- scaling is performed based on the hessian diagonal;

- weight optimization uses our slightly improved ordering.

The computational cost of the algorithm is not increased so far compared to GPTQ. At the cost of additional computations we add:

- local search is performed during weight optimization for 100 moves.

The various tricks interact well, and their benefits seem to stack, reducing the squared error by one third on average.

The algorithms in this repository build on the following works:

- Bias correction and GPTQ for the approach to weight quantization, as well as similar works such as AdaRound, AdaQuant, OBQ or GPTVQ;

- Lloyd and LBG for the choice of quantization grids;

- The GPTQ repository was used for data and testing.