You need to evaluate a few fine-tuned LLM checkpoints. None of the existing benchmark suite fits your domain task, and your content can't be reviewed by a 3rd party (e.g. GPT-4). Human evaluation seems to be the most viable option... Well, maybe it's not that bad!

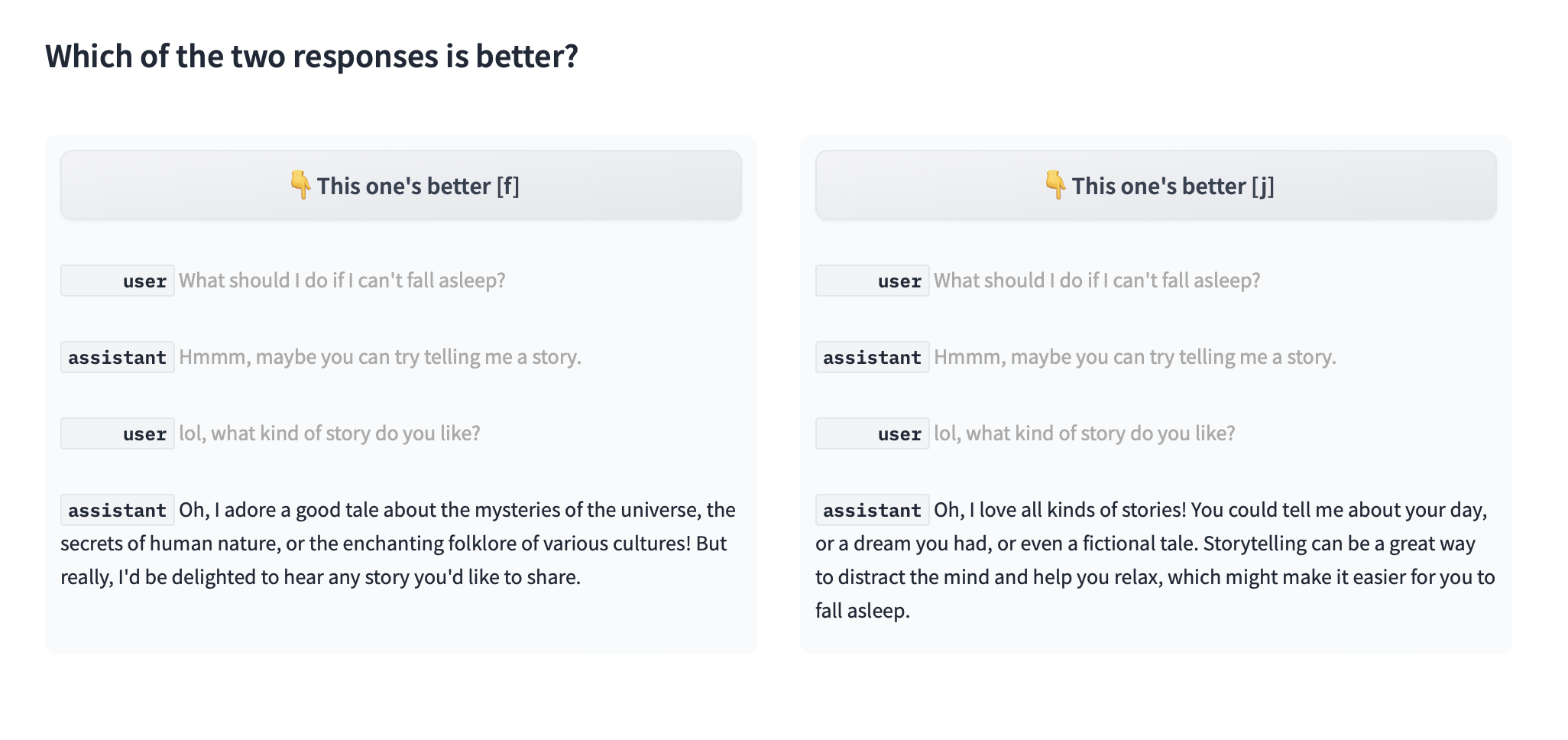

Let's strip down the evaluation process to just a single question:

Press f or j to choose the winner of each match. You can make the decision, one match at a time.

Inspired by Chatbot Arena.

- In a Python (>= 3.12) environment,

pip install -r requirements.txt - Fill out

config.tomlwith your model endpoint infomation and prompts. Seeconfig-example.toml. - Run

python generate.py config.tomlto gather responses from models. - Run

python evaluate.py config.tomlto host your competition!

At each match, two of the models/checkpoints are compared by anonymous evaluation of their responses to the same prompt. Matches are shuffled.

mode = "top3_1v1" (default if there are 2 models)

A simple additive scoring system. For each prompt:

- For each model, generate m=8 sample responses. Run a single-elimination tournament to get top 3 responses. (m matches x 2 models)

- Let the best responses of two models compete, then 2nd best of two models, then 3rd best. Winner of each gets 4.8, 3.2, 2.0 points, respectively. (3 matches)

Number of samples, points, and prompt weights are configurable.

mode = "mle_elo" (default if there are 3+ models)

Maximum likelihood estimate (MLE) of Elo rating is used to rank models. The Elo implementation is based on Chatbot Arena's analysis notebook. For each prompt:

- For each model, generate m=16 sample responses. Eliminate half of them by pairwise comparison. (m/2 matches x n models, n ≤ m/2+1)

- Randomly arrange matches, with each sample response participating in only one match. (mn/4 matches)

Elo rating is fitted after all matches are completed. Number of samples and prompt weights are configurable.

pip install -r requirements-dev.txt

pre-commit install