Authors: Donghao Zhou, Pengfei Chen, Qiong Wang, Guangyong Chen, Pheng-Ann Heng

Affiliations: SIAT-CAS, UCAS, Tencent, Zhejiang Lab, CUHK

[Paper] | [Poster] | [Slides] | [Video]

Abstract

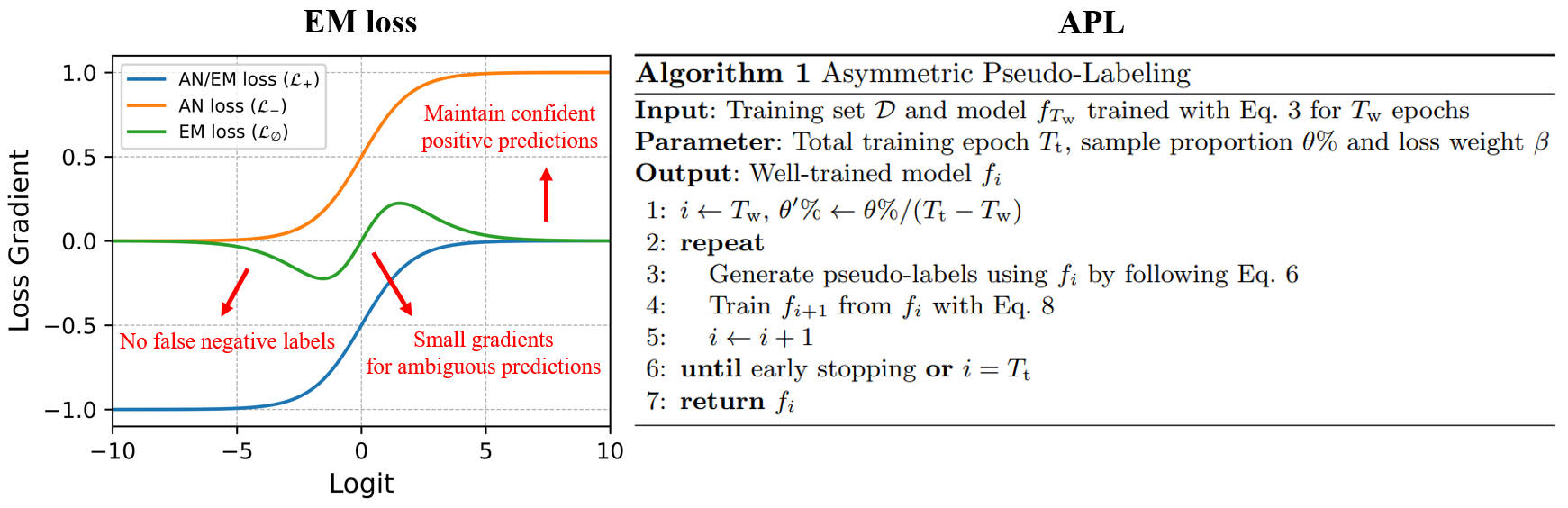

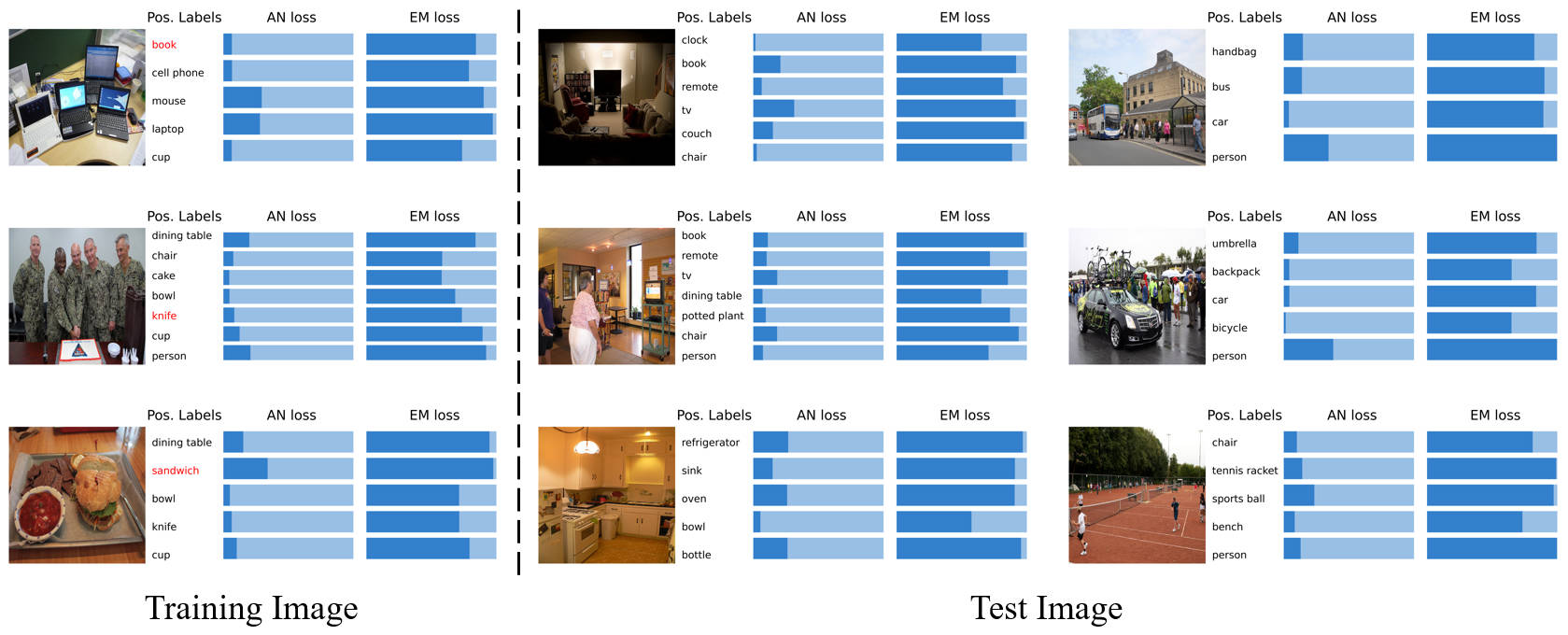

Due to the difficulty of collecting exhaustive multi-label annotations, multi-label datasets often contain partial labels. We consider an extreme of this weakly supervised learning problem, called single positive multi-label learning (SPML), where each multi-label training image has only one positive label. Traditionally, all unannotated labels are assumed as negative labels in SPML, which introduces false negative labels and causes model training to be dominated by assumed negative labels. In this work, we choose to treat all unannotated labels from an alternative perspective, i.e. acknowledging they are unknown. Hence, we propose entropy-maximization (EM) loss to attain a special gradient regime for providing proper supervision signals. Moreover, we propose asymmetric pseudo-labeling (APL), which adopts asymmetric-tolerance strategies and a self-paced procedure, to cooperate with EM loss and then provide more precise supervision. Experiments show that our method significantly improves performance and achieves state-of-the-art results on all four benchmarks.

- 2022.11: We have collected relevant papers and code for SPML in Awesome-Single-Positive-Multi-Label-Learning!

- 2022.11: We have provided some usage tips for adopting our method in your research. Hope them can help in your work!

- Create a Conda environment for the code:

conda create --name SPML python=3.8.8

- Activate the environment:

conda activate SPML

- Install the dependencies:

pip install -r requirements.txt

- Run the following commands:

cd {PATH-TO-THIS-CODE}/data/pascal

curl http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar --output pascal_raw.tar

tar -xf pascal_raw.tar

rm pascal_raw.tar

- Run the following commands:

cd {PATH-TO-THIS-CODE}/data/coco

curl http://images.cocodataset.org/annotations/annotations_trainval2014.zip --output coco_annotations.zip

curl http://images.cocodataset.org/zips/train2014.zip --output coco_train_raw.zip

curl http://images.cocodataset.org/zips/val2014.zip --output coco_val_raw.zip

unzip -q coco_annotations.zip

unzip -q coco_train_raw.zip

unzip -q coco_val_raw.zip

rm coco_annotations.zip

rm coco_train_raw.zip

rm coco_val_raw.zip

- Follow the instructions in this website to download the raw images of NUS-WIDE named

Flickr.zip. - Run the following commands:

mv {PATH-TO-DOWNLOAD-FILES}/Flickr.zip {PATH-TO-THIS-CODE}/data/nuswide

unzip -q Flickr.zip

rm Flickr.zip

❗Instead of re-crawling the images of the NUS-WIDE dataset as done in this paper, we download and use the official version of the NUS-WIDE dataset in our experiments.

- Download

CUB_200_2011.tgzin this website. - Run the following commands:

mv {PATH-TO-DOWNLOAD-FILES}/CUB_200_2011.tgz {PATH-TO-THIS-CODE}/data/cub

tar -xf CUB_200_2011.tgz

rm CUB_200_2011.tgz

For PASCAL VOC, MS-COCO, and CUB, use Python code to format data:

cd {PATH-TO-THIS-CODE}

python preproc/format_pascal.py

python preproc/format_coco.py

python preproc/format_cub.py

For NUS-WIDE, please download the formatted files here and move them to the corresponding path:

mv {PATH-TO-DOWNLOAD-FILES}/{DOWNLOAD-FILES} {PATH-TO-THIS-CODE}/data/nuswide

{DOWNLOAD-FILES} should be replaced by formatted_train_images.npy, formatted_train_labels.npy, formatted_val_images.npy, or formatted_train_labels.npy.

In the last step, run generate_observed_labels.py to yield single positive annotations from full annotations of each dataset:

python preproc/generate_observed_labels.py --dataset {DATASET}

{DATASET} should be replaced by pascal, coco, nuswide, or cub.

Run main.py to train and evaluate a model:

python main.py -d {DATASET} -l {LOSS} -g {GPU} -s {PYTORCH-SEED}

Command-line arguments are as follows:

{DATASET}: The adopted dataset. (default:pascal| available:pascal,coco,nuswide, orcub){LOSS}: The method used for training. (default:EM_APL| available:bce,iun,an,EM, orEM_APL){GPU}: The GPU index. (default:0){PYTORCH-SEED}: The seed of PyTorch. (default:0)

For example, to train and evaluate a model on the PASCAL VOC dataset using our EM loss+APL, please run:

python main.py -d pascal -l EM_APL

- If you want to use our EM loss in your work and evaluate its performance with metrics with a threshold (e.g., F1-score, IOU-accuracy), please set the threshold to

0.75rather than0.5for a fair comparison as we done in Appendix E of our paper. - The hyperparameters of our method for different datasets have been described in here.

If you find some useful insights from our work or our code is helpful in your research, please consider citing our paper:

@inproceedings{zhou2022acknowledging,

title={Acknowledging the unknown for multi-label learning with single positive labels},

author={Zhou, Donghao and Chen, Pengfei and Wang, Qiong and Chen, Guangyong and Heng, Pheng-Ann},

booktitle={European Conference on Computer Vision},

pages={423--440},

year={2022},

organization={Springer}

}Feel free to contact me (Donghao Zhou: dh.zhou@siat.ac.cn) if anything is unclear.

Our code is built upon the repository of single-positive-multi-label. We would like to thank its authors for their excellent work.