Since we cannot trust the XAI methods blindly, it is necessary to define and have some metrics capable of providing a quality index of each explanation method. However, there are still no metrics used as standard in the state of the art. The objective assessment of the explanations quality is still an active field of research. The various metrics defined are not general but depend on the specific model task on which the XAI methods are used.

Many efforts have been made to define quality measures for heatmaps and saliency maps which explain individual predictions of an Image Classification model. One of the main metrics used to evaluate explanations in the form of saliency maps is called FaithFulness.

The faithfulness of an explanation refers to whether the relevance scores reflect the true importance. The assumption of this evaluation metric is that perturbation of relevant (according to the heatmap) input variables should lead to a steeper decline of the prediction score than perturbation on the less important ones. Thus, the average decline of the prediction score after several rounds of perturbation (starting from the most relevant input variables) defines an objective measure of heatmap quality. If the explanation identifies the truly relevant input variables, then the decline should be large.

There are several methods to compute the Faithfulness, one of them is a metric called Area Over the Perturbation Curve - (AOPC), described below.

The AOPC approach, measures the change in classifier output as pixels are sequentially perturbed (flipped in binary images, or set to a different value for RGB images) in order of their relevance as estimated by the explanation method. It can be seen as a greedy iterative procedure that consists of measuring how the class encoded in the image disappears when we progressively remove information from the image

The classification output should decrease more rapidly for methods that provide more accurate estimates of pixel relevance. This approach can be done in two ways:

- Most Relevant First (MoRF): The pixels are perturbed starting from the most important according to the heatmap (rapid decrease in classification output).

- Least Relevant First (LeRF): The least relevant pixels are perturbed first. In this case, the classification output should change more slowly the more accurate the saliency method.

In this work, we decided to use the MoRF version. It is computed as follow:

where

denotes the mean over all images in the dataset.

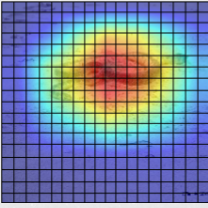

The algorithm starts with an image on which the AOPC (yes, there is an AOPC value for each image, and it is then averaged across all the images in the dataset) needs to be computed. This image is classified, and an explanation for this classification is generated using an explainability model. From this explanation, a grid is created (according to the dimensions requested by the user), and each grid block is assigned an importance value. The more important the pixels within the block are for the classification, the more important the block itself will be. This is done based on the values of the explanation. An illustrative example is provided in the images below.

Original image. |

Explaination of the original image. |

Example of how the grid is created. |

Starting from this point, the various blocks are perturbed in descending order of importance, and the formula mentioned above is calculated. Perturbation can be done in various ways (such as zeroing out pixels, adding noise, using random values, etc.). In this case, we have chosen to use the mean. Specifically, for each block that needs to be perturbed, the average value is calculated, and this value is assigned to every pixel within the block. An illustrative example of how the perturbetion work over different iteration is provided in the images below.

As mentioned earlier, the faster the classification output decreases, the better the explainability method will be. Now observe the following example graphs:

- in the first case, we can observe a very high AOPC value, indicating a favorable evaluation for the explanation method.

- In the right image, we can see a lower AOPC value, indicating poorer performance.

The project is composed by 3 different python script:

-

AOPC_MoRF.py$\rightarrow$ the main file in which the AOPC across al the image of your dataset is computed. It gives in output a.pklfile with the results. The results are saved in a dict that has the following format:

{image_id: (original_class,AOPC,sum(AOPC)/L+1)} -

generate_cams.py$\rightarrow$ the file that you have to use before the computation of AOPC in order to generate all the cams (explanations) using selected Explanation Method. -

report_analysis.py$\rightarrow$ this is an optional file, you can use this to load the.pklresults file and plot the results (for example the AOPC chart).

Below are described the parameters and other characteristics of each script.

Command line arguments:

imgs_dir: Path of the directory containing images for AOPC.cams_dir: Path of the directory containing explations for AOPC.--block_size(or-size): The block size for AOPC evaluation, with a default value of 8.--block_row(or-row): The number of blocks per row in images, with a default value of 28.--percentile(or-pct): The percentile up to which AOPC is computed, with a default value ofNone.--results_file_name(or-file_name): The name of the file in which results are saved, with a default value ofresults.pkl.--verbose(or-v): Enable verbose mode, with a default value ofFalse(possible valueTrueorFalse).

--block_size and --block_row arguments must be consistent with each other. For example, using image of 224x224 pixel, if you use a block size of 8x8 pixel (the arguments is 8) you can compute by yourself the value for the argument block row

For simplicity this file has no argument to pass by command line. You have only to change somethings inside the code. For example you can change the directory path from which the images are taken (the images which you want to classify and then to produce classifcation explainations). Inside you can also change the model for the classification and the Explaination method used.

In the code we use a Resnet50 model and GradCam as explaination method.

Obviously you have to use this file only if you don't have already the explainations. If you know how to produce them by yourself you don't need this file (consider it just an utils file).

Also this file has no argument to pass by command line. You only have to change the filename of the results .pkl file directly in the script.

Also this file is an utils file, you can use this as a baseline to obtain more analysis on the computed results. As it is the file produce in output the mean AOPC across all the images in the dataset and plot the chart of the AOPC curve for each image. It saves each plot in the AOPC_plots 📂 directory.

- The first step is to prepare the image dataset. For instance, place the images in a folder similar to the one already provided, named

imgs📂. - Rename all the images following a specific pattern. For example, if you wish to use the provided regex, rename the images as

img_{X}.png, whereXis a number. We recommend starting from0and incrementing it iteratively by1, similar to the three example images already provided. - Next, you need to generate the explanations using the method you want to test. Inside the

generate_cams.pyfile, you can find an example of how to do this using various methods taken from this library. To execute it, run the following command:python3 generate_cams.py

- Similarly to the images, you should rename the generated cams correctly and place them in a folder, such as the one already provided, named

cams📂. For example, you can name the cams asimg_cam_{X}.png, whereXis a number. - You now have everything you need to compute the AOPC across your image datasets. To do this, run the algorithm with the following command:

python3 AOPC_MoRF.py imgs/ cams/ -block_size 8 -block_row 28 --file_name results.pkl -v True

- The results will be saved in a file named

results.pkl. - To analyze the results, you can use the script

results_analysis.py:This script will generate charts similar to those found in thepython3 results_analysis.py

AOPC_plotsfolder 📂 and will provide the mean AOPC across all the images as output.

❗Now, you are ready to get started! ❗

Before running the project, prepare the system as follows:

- Install virtuenv if is not in your system

pip install virtualenv- Install the virtualenv in the same dir of the project

virtualenv AOPC

source AOPC/bin/activate- Install all the requirements dependencies

pip install -r requirements.txtIf you have any problem and you need help, you can open an Issue.

The code is implemented basing on the following research papers:

- Samek, Wojciech et al. "Evaluating the visualization of what a deep neural network has learned." IEEE transactions on neural networks and learning systems 28.11 (2016): 2660-2673. \cite{tomsett2020sanity, samek2016evaluating}

- Tomsett, Richard et al. "Sanity checks for saliency metrics." Proceedings of the AAAI conference on artificial intelligence 34.04 (2020): 6021-6029.