An Android 11+ application which can detect and classify the types and the main ways that masks/respirators are worn by people according to the WWMR-DB dataset.

The detected ways of wearing masks/respirators are:

mask/respirator correctly wornmask/respirator not wornmask/respirator hanging from an earmask/respirator on the tip of the nosemask/respirator on the forheadmask/respirator under the nosemask/respirator over the chinmask/respirator under the chin

The detected types of masks/respirators are:

non-medical masksurgical maskrespirator with valverespirator without valve

The application offer two main features:

live detectionavailable in theMainActivityadvanced analysisavailable in theAnalyzeActivity

The live detection feature uses the CameraX API to capture and analyze the frames acquired by the smartphone camera in real time.

The CameraX API also allows the user to switch between the front and rear cameras, to tap-to-focus and to pinch-to-zoom on the camera preview.

In this case, the detection is done by using a custom trained convolutional neural network based upon the EfficientDet-Lite 0 spec.

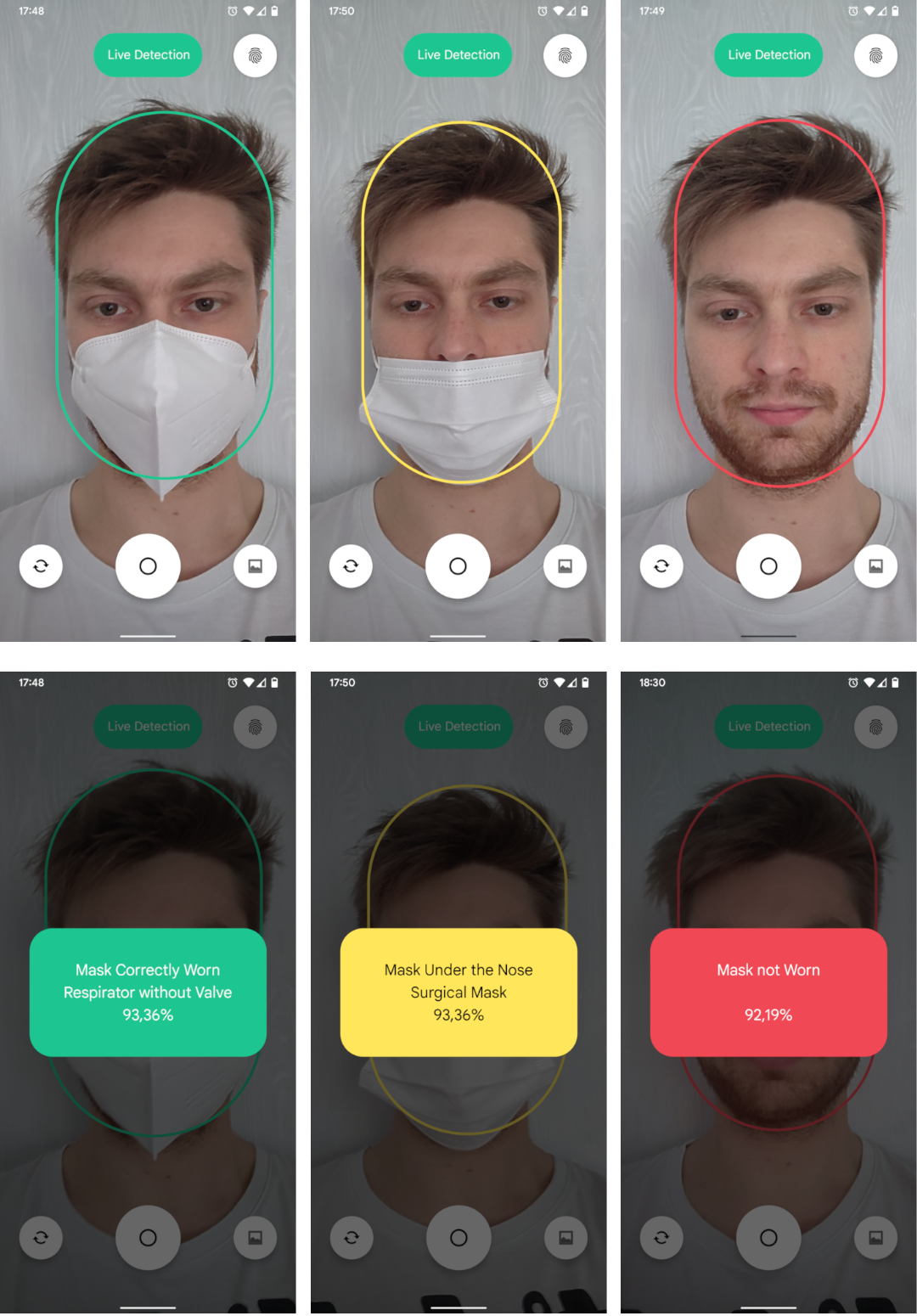

Once live-detected, a face correctly/incorrectly wearing a mask/respirator will be surrounded by an oval (rounded rectangle) that can have 3 possible colors:

greenif the mask/respirator is correctly wornyellowif the mask/respirator is worn incorrectlyredif the mask/respirator is not worn at all

For instance:

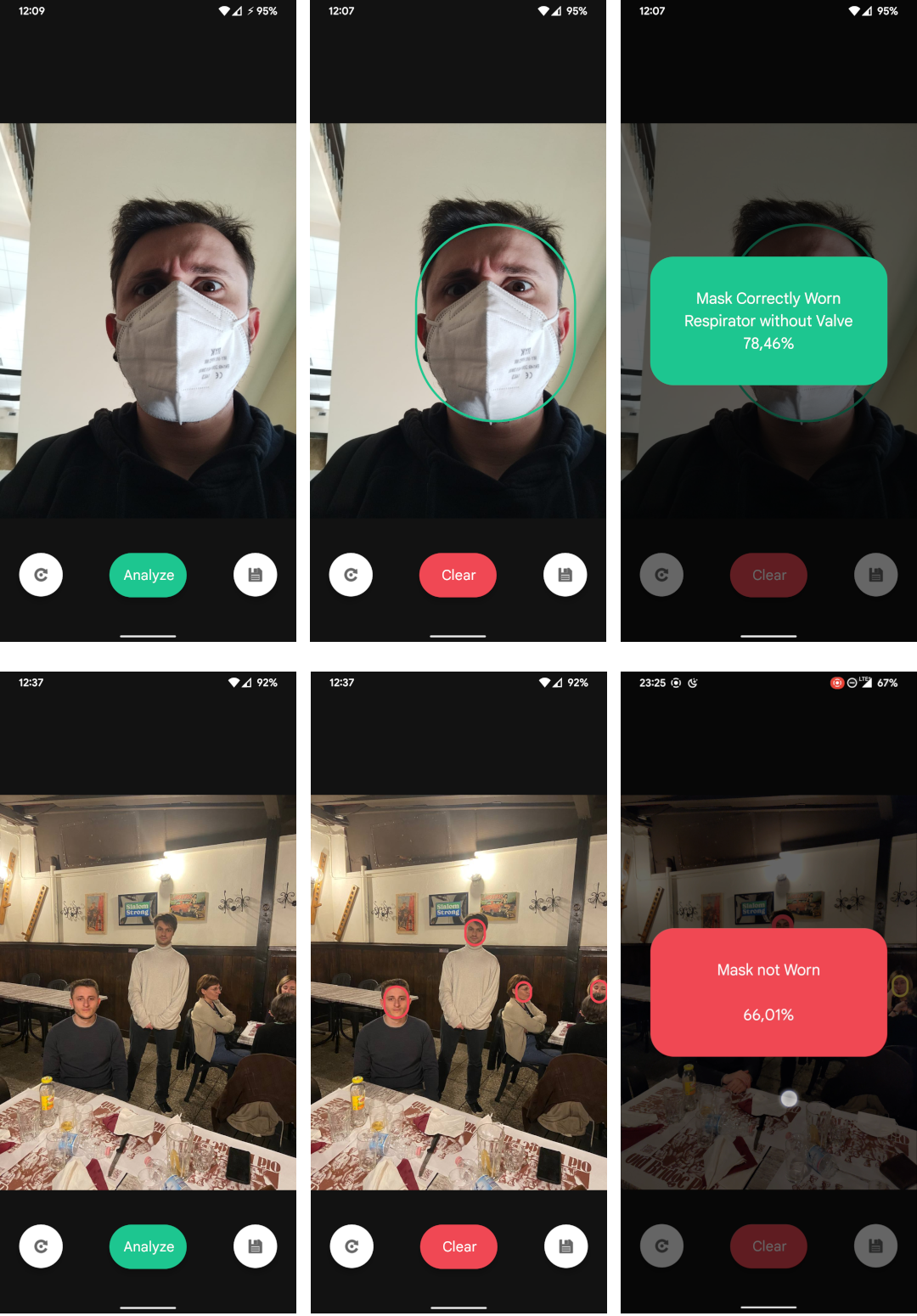

The advanced analysis feature relies on another custom trained convolution neural network, but insted of being based upon the fast and lightweight spec of the live detection feature, it uses the EfficientDet-Lite 4 spec as the underlaying basis.

This choice allow for more accurate detections at the cost of a lower framerate (30fps of the live detection vs 4fps of the advanced analysis).

An example of the advanced analysis:

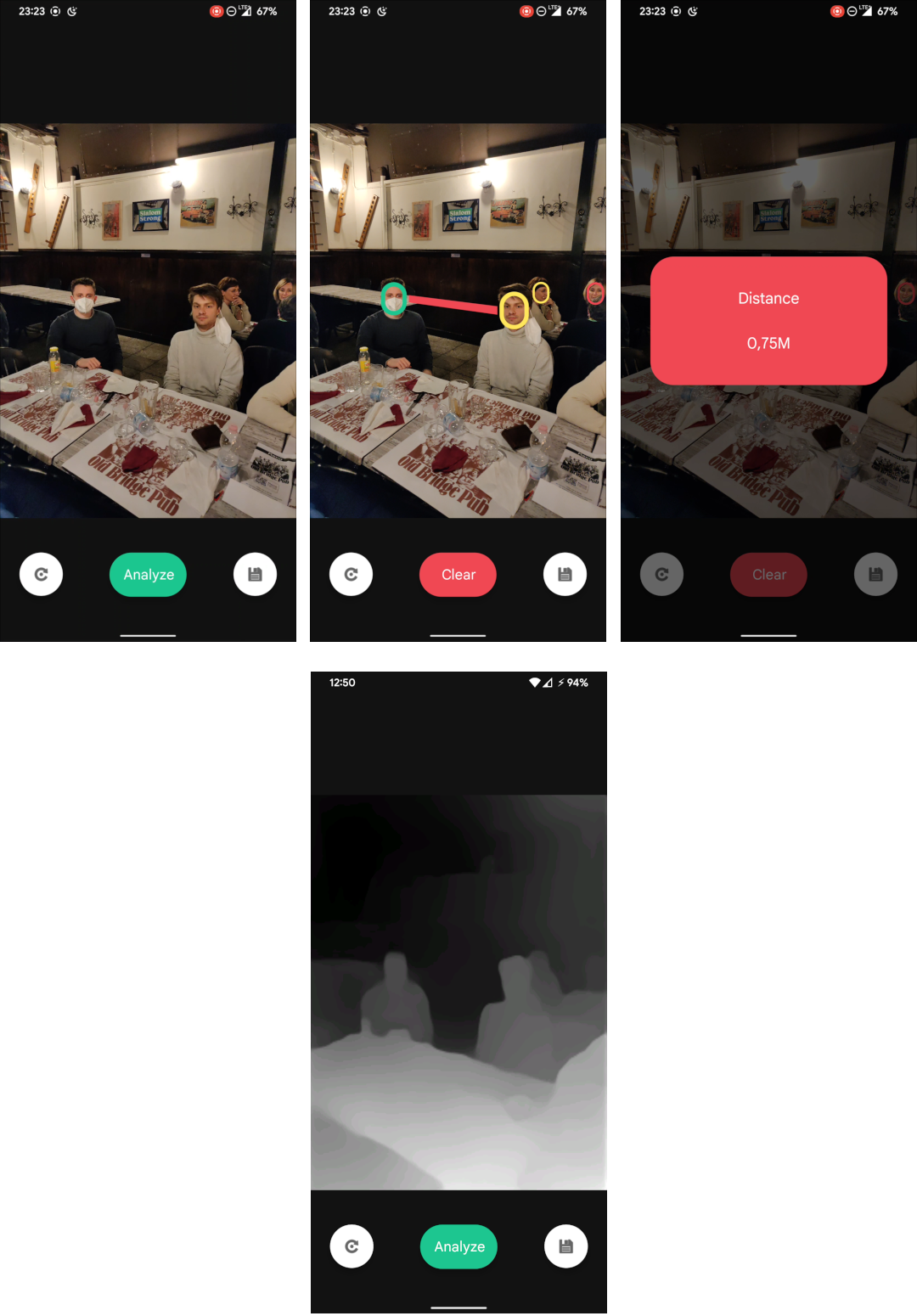

Based upon the work done on the main application, this expansion allows the application to know if two people are respecting the social distance of 1m required by the Covid-19 restrictions.

The underlaying method is based upon 3 main steps:

- understanding which person is the furthest and which one's the closest by the mean of a depth-map obtained thanks to the

MiDasneural network - using the proportions of the human face to understand at which distance each person is at

- correcting the distortion of the measurements due to the distances between the people and the observer (smartphone)

These are some of the obtained results:

This way of calculating the distance between two people is ineffective whenever the focal length of the smartphone camera differs from the ones used during testing.

This method also don't take into consideration that men and women have slightly different face proportions and so the measurements between two women may have a different measurement error if compared to the distance calculated between two men. The same reasoning applies to young people.

Also the MiDas neural network is not perfect and may yield some inconsistent depth-map which may interfere with the distances calculations.

The PDF paper of this work is available at: ./githubResources/Relazione Sistemi Digitali M.pdf

The Power Point presentation of this work is available at: ./githubResources/Presentazione Sistemi Digitali M.pptx

The PDF presentation of this work is available at: ./githubResources/Presentazione Sistemi Digitali M.pdf

The dataset used for training the NN of this work is available at: IEEEDataPort

The Google Colab training file of this work is available at: ./githubResources/TrainingAI-SisDig.ipynb

Click here to download the apk file of this application.

Cristian Davide Conte

Simone Morelli