Code of the paper:

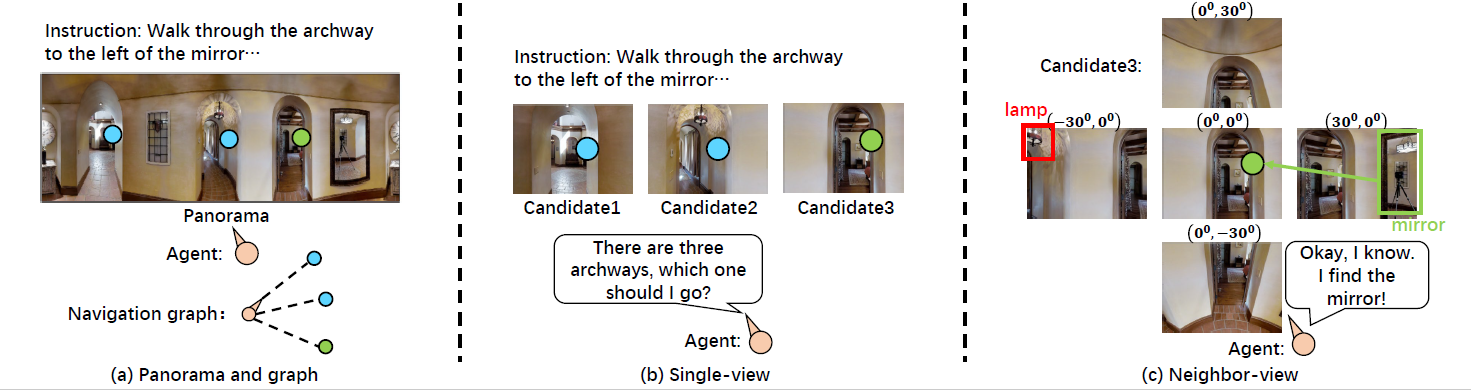

Neighbor-view Enhanced Model for Vision and Language Navigation (ACM MM2021 oral)

Dong An, Yuankai Qi, Yan Huang, Qi Wu, Liang Wang, Tieniu Tan

Install the Matterport3D Simulator. Please note that the code is based on Simulator-v2.

Please find the versions of packages in our environment in requirements.txt. In particular, we use:

- Python 3.6.9

- NumPy 1.19.1

- OpenCV 4.1.0.25

- PyTorch 0.4.0

- Torchvision 0.1.8

Please follow the instructions below to prepare the data in directories:

connectivity- Download the connectivity maps [23.8MB].

data- Download the R2R data [5.8MB].

- Download the vocabulary and the augmented data from EnvDrop [79.5MB].

img_features- Download the Scene features [4.2GB] (ResNet-152-Places365).

- Download the pre-processed Object features and vocabulary [1.3GB] (Caffe Faster-RCNN).

GT for CLS score- Download the id_paths.json [1.4MB], put it in

tasks/R2R/data/

- Download the id_paths.json [1.4MB], put it in

snap- Download the trained network weights [116.0MB]

Please read Peter Anderson's VLN paper for the R2R Navigation task.

Our code is based on the code structure of EnvDrop and Recurrent VLN-Bert.

To replicate the performance reported in our paper, load the trained network weights and run validation:

bash run/valid.bash 0Here is the full log:

Loaded the listener model at iter 119600 from snap/NvEM_bt/state_dict/best_val_unseen

Env name: val_seen, nav_error: 3.4389, oracle_error: 2.1848, steps: 5.5749, lengths: 11.2468, success_rate: 0.6866, oracle_rate: 0.7640, spl: 0.6456

Env name: val_unseen, nav_error: 4.2603, oracle_error: 2.8130, steps: 6.3585, lengths: 12.4147, success_rate: 0.6011, oracle_rate: 0.6790, spl: 0.5497

To train the network from scratch, first train a Navigator on the R2R training split:

bash run/follower.bash 0The trained Navigator will be saved under snap/.

You also need to train a Speaker for augmented training:

bash run/speaker.bash 0The trained Speaker will be saved under snap/.

Finally, keep training the Navigator with the mixture of original data and augmented data:

bash run/follower_bt.bash 0If you use or discuss our Neighbor-view Enhanced Model, please cite our paper:

@misc{an2021neighborview,

title={Neighbor-view Enhanced Model for Vision and Language Navigation},

author={Dong An and Yuankai Qi and Yan Huang and Qi Wu and Liang Wang and Tieniu Tan},

year={2021},

eprint={2107.07201},

archivePrefix={arXiv},

primaryClass={cs.CV}

}