To finish the MixPath code

Done:

- NSGA-II (use pymoo)

- Plot the result

TODO:

- SNPE/OPENVINO's LookupTable

Train

python S1/train_search.py \

--exp_name experiment_name \

--m 4\

--data_dir ~/.torch/datasets \

--seed 2020Search

python S1/eval_search.py \

--exp_name search_cifar\

--m 4\

--data_dir ~/.torch/datasets \

--model_path ./super_train/experiment_name/super_train_states.pt.tar\

--batch_size 500\

--n_generations 40\

--pop_size 40\

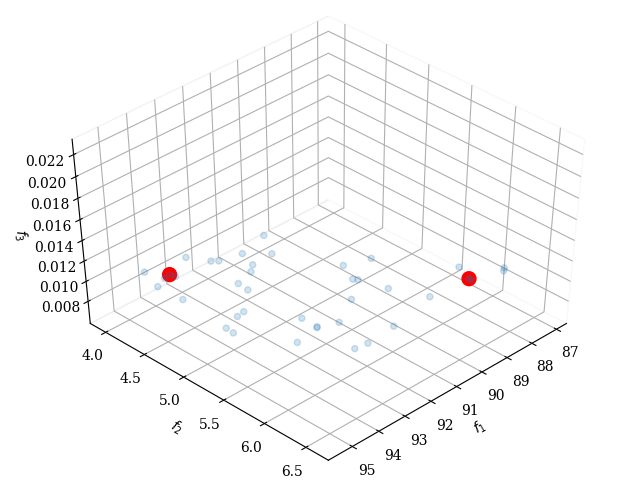

--n_offsprings 10result of search, f1: Accuracy, f2: parameter amount, f3: GPU latency

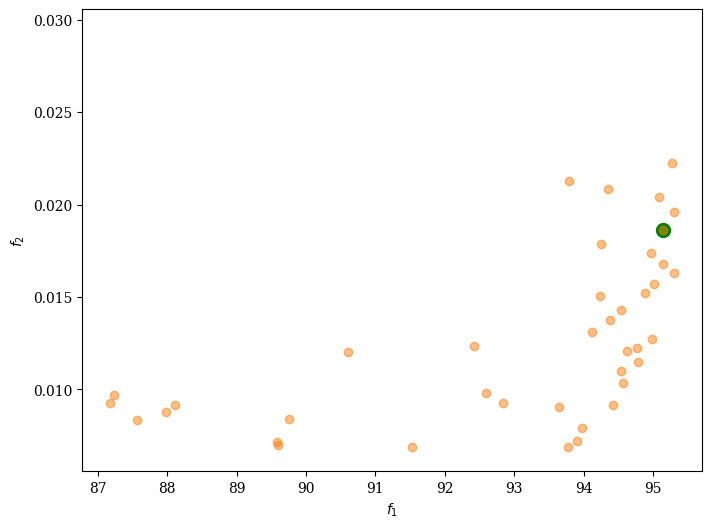

result of search, f1: Accuracy, f2: GPU latency

According to https://github.com/kuangliu/pytorch-cifar

| Model | Acc. |

|---|---|

| VGG16 | 92.64% |

| ResNet18 | 93.02% |

| ResNet50 | 93.62% |

| ResNet101 | 93.75% |

| MobileNetV2 | 94.43% |

| ResNeXt29(32x4d) | 94.73% |

| ResNeXt29(2x64d) | 94.82% |

| DenseNet121 | 95.04% |

| PreActResNet18 | 95.11% |

| DPN92 | 95.16% |

| MixPath_S1(my) | 95.29% |

This repo provides the supernet of S1 and our confirmatory experiments on NAS-Bench-101.

Dear DL folks, we are opening several precious positions both for professionals and interns avid in AutoML/NAS, please send your resume/cv to zhangbo11@xiaomi.com. 全职/实习生申请投递至前述邮箱。

Python >= 3.6, Pytorch >= 1.0.0, torchvision >= 0.2.0

CIFAR-10 can be automatically downloaded by torchvision. It has 50,000 images for

training and 10,000 images for validation.

python S1/train_search.py \

--exp_name experiment_name \

--m number_of_paths[1,2,3,4]

--data_dir /path/to/dataset \

--seed 2020 \

python NasBench101/nas_train_search.py \

--exp_name experiment_name \

--m number_of_paths[1,2,3,4]

--data_dir /path/to/dataset \

--seed 2020 \

@article{chu2020mixpath,

title={MixPath: A Unified Approach for One-shot Neural Architecture Search},

author={Chu, Xiangxiang and Li, Xudong and Lu, Yi and Zhang, Bo and Li, Jixiang},

journal={arXiv preprint arXiv:2001.05887},

url={https://arxiv.org/abs/2001.05887},

year={2020}

}