The official repository of the paper HyperLLaVA: Dynamic Visual and Language Expert Tuning for Multimodal Large Language Models.

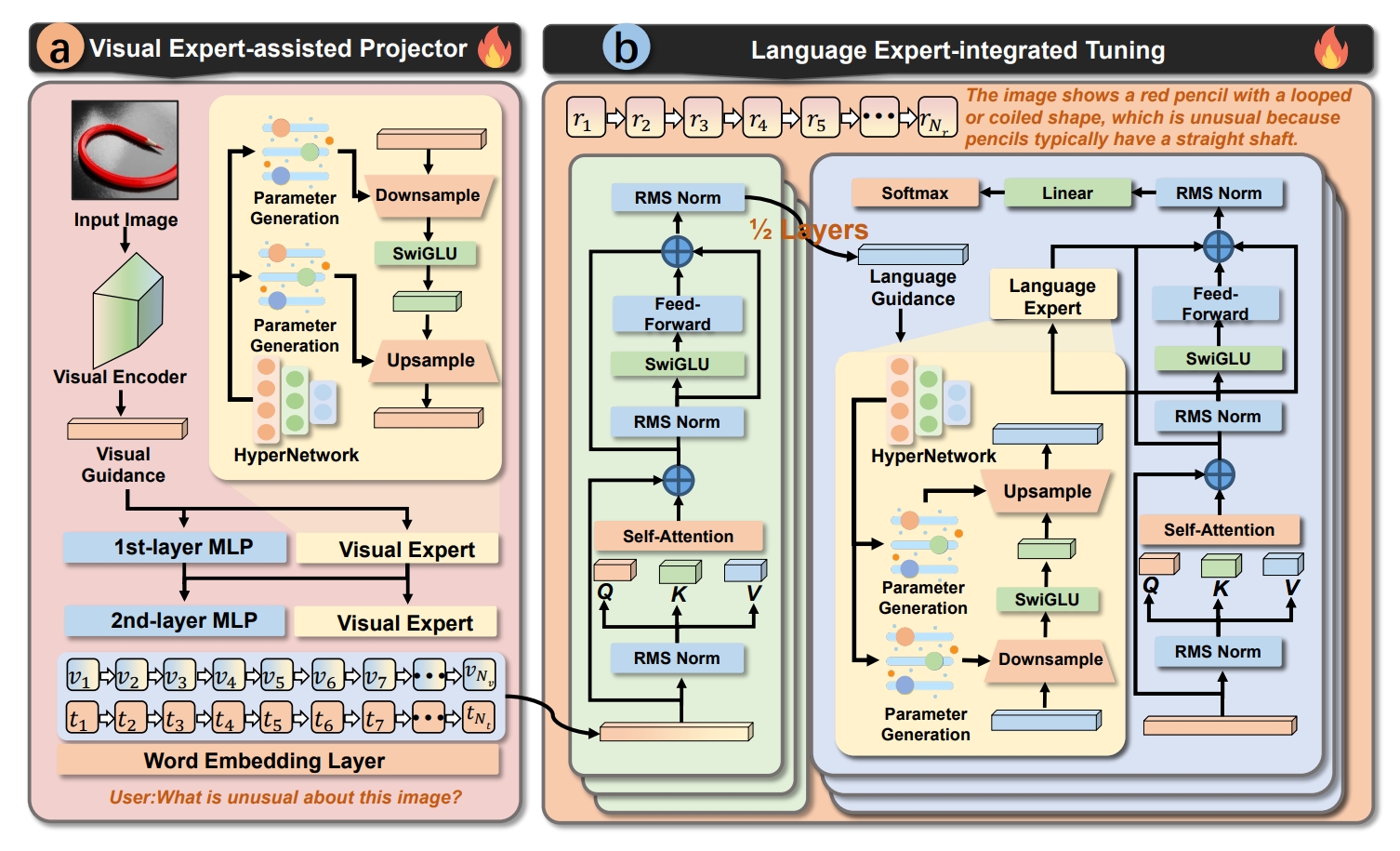

HyperLLaVA is a Multimodal Large Language Model (MLLM) designed for effectively enhancing performance on downstream multimodal tasks. It is composed of a Visual Expert-Assisted Projector and a Language Expert-integrated Tuning module. The architecture of the proposed HyperLLaVA is shown in the following figure.

Code will be available soon.

If you find our work useful in your research and would like to cite our project, please use the following citation: found this work useful, please consider giving this repository a star and citing our paper as follows:

@misc{zhang2024hyperllava,

title={HyperLLaVA: Dynamic Visual and Language Expert Tuning for Multimodal Large Language Models},

author={Wenqiao Zhang and Tianwei Lin and Jiang Liu and Fangxun Shu and Haoyuan Li and Lei Zhang and He Wanggui and Hao Zhou and Zheqi Lv and Hao Jiang and Juncheng Li and Siliang Tang and Yueting Zhuang},

year={2024},

eprint={2403.13447},

archivePrefix={arXiv},

primaryClass={cs.AI}

}