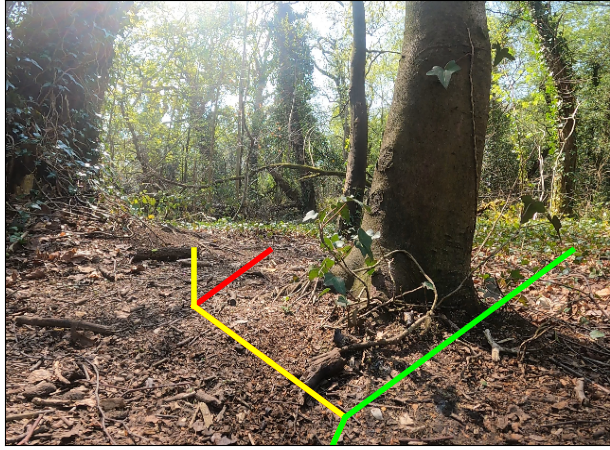

This work provides a method for real-time visual navigation for ground robots in unstructured environments. The algorithm uses a convolutional network to propose a set of trajectories and costs for efficient and collision-free movement from a single webcam. The models in this project are trained to specifically target forests. For more details, see the paper.

Figure: Top three trajectories (green, yellow, red) predicted from a forest scene.

Setup: Clone the repository and follow the instructions in the headers of each of the files listed below.

- Generate an NDVI prediction model from

ndvi-model/model.py - Label datasets using

regional-cost-model/label.py - Train the model with

regional-cost-model/model.py - Convert the model to TFLite with

tflite-model/tflite.py - Run the TFLite model online with

tflite-model/tflite_standalone.py

Notes: Prior to step 5, it may be necessary to resolve the order of the model's output heads. See Appendix D of the paper and tflite-model/reorder.py. For further information on any of the steps, see the paper and included comments in the code.

- TensorFlow 2.x

- OpenCV 4.x

- scikit-learn 1.x

- TFLite 2.x

- SciPy 1.x

- DroneKit 2.x

- pymavlink 2.x

Other miscellaneous libraries (e.g. matplotlib) will be needed to run the code but are not required.

- Hardware: Raspberry Pi 4B (TBC GiB)

- Traversal Cost Labelling Parameters: (γ, α, β) = (0, 1, 0.1)

- Model Parameters:

- Optimizer: Adam

- Batch Size: 32

- Loss: MAE

- Epochs: 100

- Train-Test Split: 80-20

- Number of Instances: 9700

This work is all the original work of the author and is distributed under the GNU General Public License v3.0. For more details, see the LICENSE file.