This project was based on python 3.6 and pytorch 1.7.0. See requirements.txt for all prerequisites, and you can also install them using the following command.

pip install -r requirements.txt| Size | Dimensionality | Clusters | Type | Link | |

|---|---|---|---|---|---|

| Animals | 10000 | 512 | 10 | image | Kaggle |

| Anuran calls | 7195 | 22 | 8 | tabular | UCI |

| Banknote | 1097 | 4 | 2 | text | UCI |

| Cifar10 | 10000 | 512 | 10 | image | Alex Krizhevsky |

| Cnae9 | 864 | 856 | 9 | text | UCI |

| Cats-vs-Dogs | 10000 | 512 | 2 | image | Kaggle |

| Fish | 9000 | 512 | 9 | image | Kaggle |

| Food | 3585 | 512 | 11 | image | Kaggle |

| Har | 8240 | 561 | 6 | tabular | UCI |

| Isolet | 1920 | 617 | 8 | text | UCI |

| ML binary | 1000 | 10 | 2 | tabular | Kaggle |

| MNIST | 10000 | 784 | 10 | image | Yann LeCun |

| Pendigits | 8794 | 16 | 10 | tabular | UCI |

| Retina | 10000 | 50 | 12 | tabular | Paper |

| Satimage | 5148 | 36 | 6 | image | UCI |

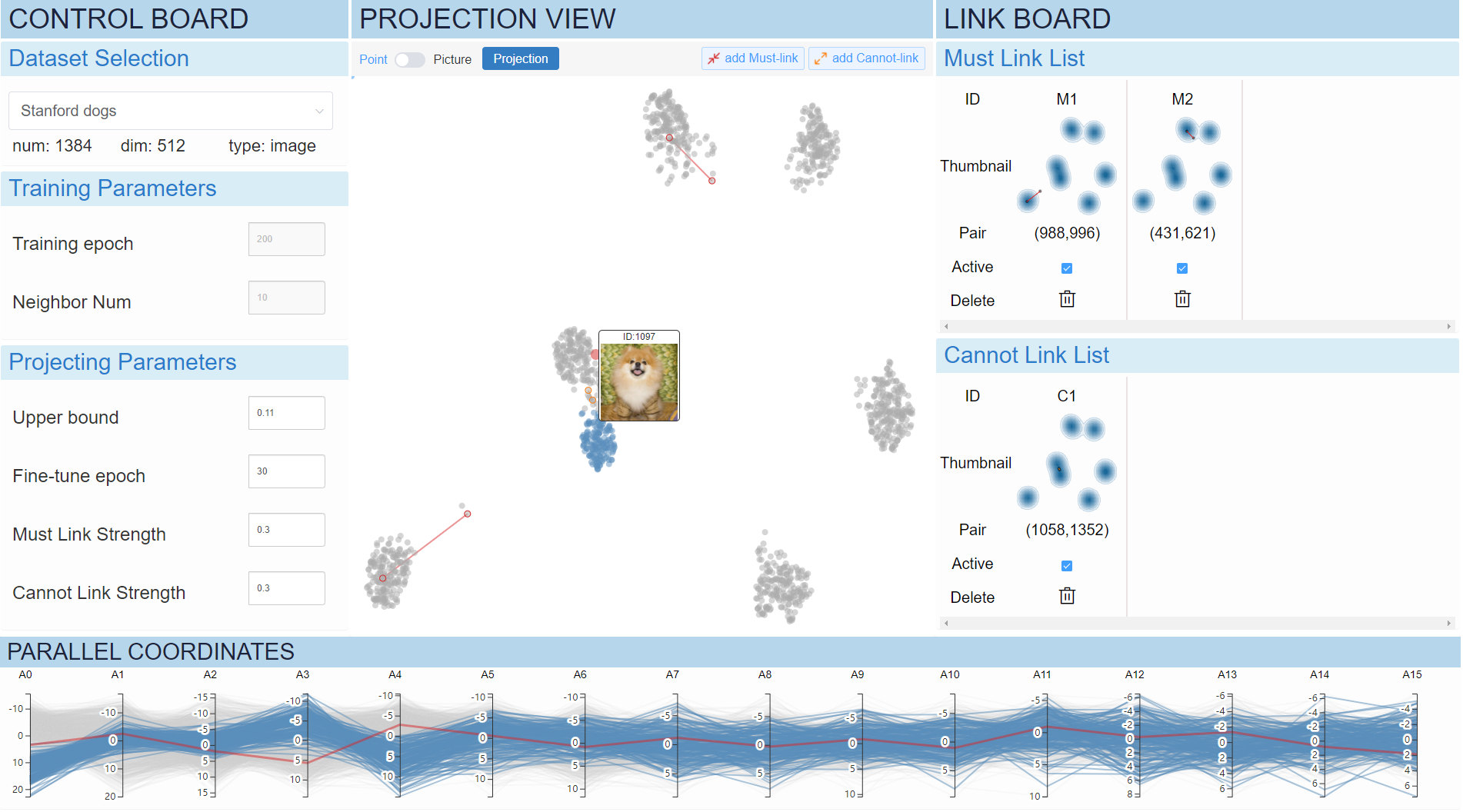

| Stanford Dogs | 1384 | 512 | 7 | image | Stanford University |

| Texture | 4400 | 40 | 11 | text | KEEL |

| USPS | 7440 | 256 | 10 | image | Kaggle |

| Weathers | 900 | 512 | 4 | image | Kaggle |

| WiFi | 1600 | 7 | 4 | tabular | UCI |

For image dataset such as Animals, Cifar10, Cats-vs-Dogs, Fish, Food, Stanford Dogs and Weathers, we use SimCLR to get their 512 dimensional representations.

All the datasets are supported with H5 format (e.g. usps.h5), and we need all the dataset to be stored at data/H5 Data. For image data sets, place all images as 0.jpg,1.jpg,...,n-1.jpg format and put it in the static/images/(dataset name)(e.g. static/images/usps) directory.

The pre-training model weights on all the above data sets can be found in Google Drive.

To train the model on USPS with a single GPU, check the configuration file configs/CDR.yaml, and try the following command:

python train.py --configs configs/CDR.yamlThe configuration files can be found under the folder ./configs, and we provide two config files with the format .yaml. We give the guidance of several key parameters in this paper below.

- n_neighbors(K): It determines the granularity of the local structure to be maintained in low-dimensional space. A too small value will cause one cluster in the high-dimensional space be projected into two low-dimensional clusters, while too large value will aggravate the problem of clustering overlap. The default setting is K = 15.

- batch_size(B): It determines the number of negative samples. A larger value is better, but it also depends on the data size. We recommend to use

B = n/10, wherenis the number of instances. - temperature(t): It determines the ability of the model upon neighborhood preservation. The smaller the value is, the more strict the model is to maintain the neighborhood, but it also keeps more error neighbors. The default setting is t = 0.15.

- separate_upper(μ): It determines the intensity of cluster separation. The larger the value is, the higher the cluster separation degree is. The default setting is μ = 0.11.

To use our pre-trained model, try the following command:

# python vis.py --configs 'configuration file path' --ckpt 'model weights path'

# Example on USPS dataset

python vis.py --configs configs/CDR.yaml --ckpt_path model_weights/usps.pth.tarUsing our prototype interface for interactive visual clustering analysis, try the following command.

python app.py --config configs/ICDR.yamlAfter that, the prototype interface can be found in http://127.0.0.1:5000 .

@article{xia2022interactive,

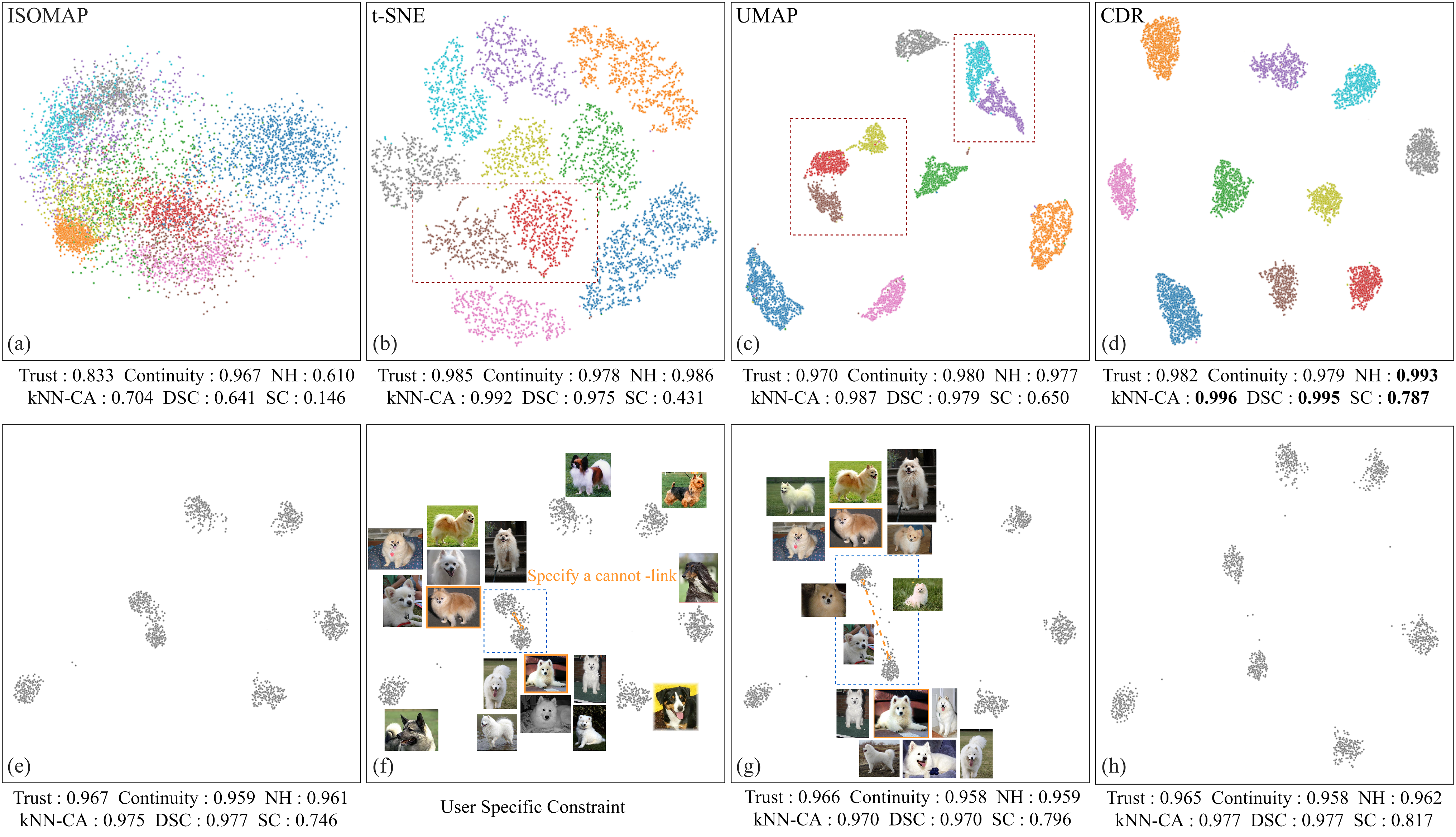

title={Interactive visual cluster analysis by contrastive dimensionality reduction},

author={Xia, Jiazhi and Huang, Linquan and Lin, Weixing and Zhao, Xin and Wu, Jing and Chen, Yang and Zhao, Ying and Chen, Wei},

journal={IEEE Transactions on Visualization and Computer Graphics},

volume={29},

number={1},

pages={734--744},

year={2022},

publisher={IEEE}

}