WordScape: a Pipeline to extract multilingual, visually rich Documents with Layout Annotations from Web Crawl Data

This repository contains code for WordScape pipeline. The pipeline extracts Word documents from the web, renders document pages as images, extracts the text and generates bounding box annotations for semantic entities.

The WordScape paper is available here.

You can download a list of 9.4M urls, including the SHA256 checksums of the associated documents from here. Using this list, you can skip the step of parsing commoncrawl, and directly download the documents and verify their integrity.

| CommonCrawl Snapshot | Number of URLs | Download Link |

|---|---|---|

| 2013-48 | 57,150 | Download |

| 2016-50 | 309,734 | Download |

| 2020-40 | 959,098 | Download |

| 2021-43 | 1,424,709 | Download |

| 2023-06 | 3,009,335 | Download |

| 2023-14 | 3,658,202 | Download |

| all | 9,418,228 | Download |

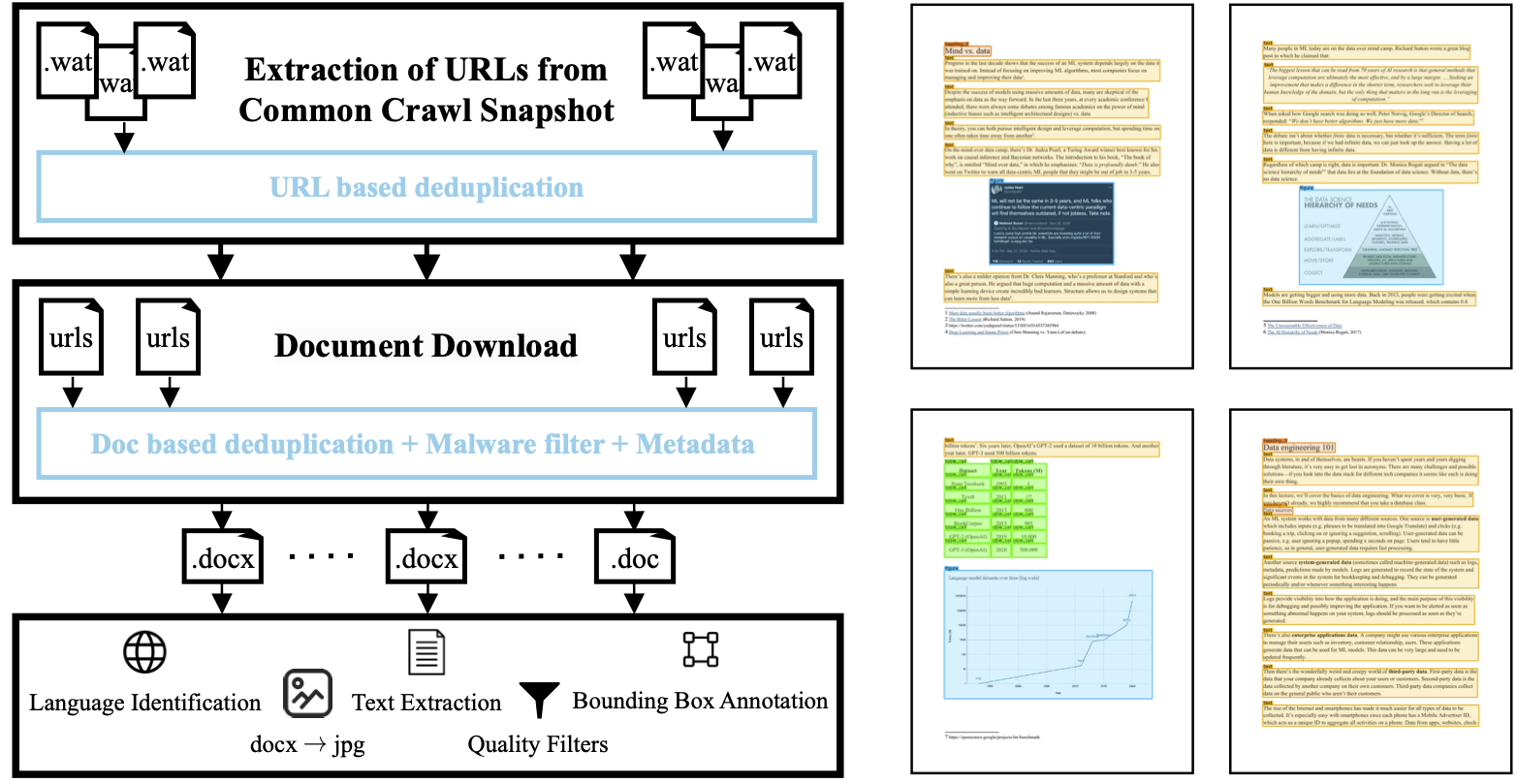

The WordScape pipeline consists of three core steps and makes extensive use of the Open XML structure of Word documents. The first step is to extract links to Word files from the Common Crawl web corpus. In the second step, the Word documents are downloaded through HTTP requests. In the third third step, we process processing them to create a multimodal dataset with page images, text, and bounding box annotations for semantic entities like headings and tables. Here we briefly describe the three steps of the pipeline and refer to the instructions for each individual step for more details.

If you wish to directly run the pipeline on the preprocessed urls, you can do so using Docker. For instructions, see the section Running WordScape using Docker.

The initial step in the WordScape pipeline involves extracting URLs pointing to Word files from Common Crawl snapshots, utilizing metadata files to select HTTP URLs ending in .doc or .docx. The parsed URLs are subsequently merged, deduplicated on a per-snapshot basis, and globally deduplicated across all previously processed snapshots. The output of this step of the pipeline is a list of URLs stored as a parquet file.

You find more details on how to run this step of the pipeline in the Common Crawl Parsing README.

In this stage of the WordScape pipeline, documents are downloaded from extracted URLs, with rejections based on various criteria such as HTTP errors, invalid formats, potentially malicious features, and excessive file sizes. Upon successful download, metadata fields including HTTP status, OLE information, and a SHA-256 hash of the response are saved to analyze and ensure content integrity.

You find more details on how to run this step of the pipeline in the Document Download README.

In the processing of downloaded Word documents consists, we identify the language using FastText, render document pages as jpeg using LibreOffice and PDF2Image, generate bounding box annotations and extract text using Python-docx and PDFPlumber.

You find more details on how to run this step of the pipeline in the Document Annotation README.

To further refine the dataset, we provide tools to filter the raw dataset with respect to which entity categories should be present in the dataset, the number of entities for each category, the language, as well as the language score and quality score. In addition, we also provide scripts to train a YOLOv5l model on the dataset.

You find more details related to the pipeline extensions and model training in the Extensions README.

The most straightforward way to run the pipeline is to run it in Docker. We provide a Dockerfile in app/ that contains

all the dependencies required to run the pipeline. The Docker image can then be used to directly run the WordScape

pipeline on the urls provided. To build the image, run the following command from the root folder of this repository:

cd app

docker build -t wordscape .Then, create or symlink a directory where the data will be stored. This directory will be mounted in the Docker container.

DATA_ROOT=/path/to/your/data/folder

mkdir -p DATA_ROOTThen, to run the pipeline, you can use the following command. This will download the url lists, download the associated documents and then annotate them and create the dataset.

MAX_DOCS=32

docker run -v "$DATA_ROOT:/mnt/data" wordscape --dump_id "CC-MAIN-2023-06" --max_docs "$MAX_DOCS"

Omitting the --max_docs flag or setting it to -1 will run the pipeline on all urls in the list.

This pipeline has been successfully tested on both MacOS and Linux Ubuntu and CentOS. We recommned working with a virtual environment to manage python dependencies. Make sure you run the following steps from the root folder of this repository.

Note that this pipeline has only been tested with Python 3.8.

- Create or symlink the

datafolder. This folder will be used to store the output of the pipeline. If you want to symlink the folder, you can use the following command:

ln -s /path/to/your/data/folder data- Create a virtual environment and activate it:

python -m venv .venv

source .venv/bin/activate- Install the python dependencies:

pip install -r requirements.txt- Download the FastText language classifier

curl https://dl.fbaipublicfiles.com/fasttext/supervised-models/lid.176.bin -o resources/fasttext-models/lid.176.ftz- Install LibreOffice and Unoserver. See the sections below for detailed instructions. These dependencies are required

in the annotation step of the pipeline to 1) convert

.docto.docxand 2) convert.docxto PDF.

Version 7.4.6 (LTS) is supported. Follow installation instructions from https://www.libreoffice.org/download/download-libreoffice/. Take care of the README in the libreoffice instruction files for your distribution!

Double check the correctness of your path: run

whereis sofficeexample output:

soffice: /opt/libreoffice7.4/program/sofficeIn your bash profile:

export PATH=$PATH:/opt/libreoffice7.4/programWe provide a step-by-step guide to install unoserver on Ubuntu here. For more details, see the Unoserver Github repository.

sudo apt-get install python3-unoIn your bash profile:

export PYTHONPATH=${PYTHONPATH}:/usr/lib/python3/dist-packages/run:

whereis uno.pyexample output:

uno.py: /opt/libreoffice7.4/program/uno.pyIn your bash profile (if not already completed in LibreOffice installation step):

export PATH=$PATH:/opt/libreoffice7.4/programCheck the guides on https://www.libreoffice.org/get-help/install-howto/ for installation instructions.

Alternatively, you can also install LibreOffice via Homebrew, using the following command:

brew install --cask libreofficeTo test the installation was successful, you can convert a document from docx to pdf using the following command:

soffice --headless --convert-to pdf /path/to/docs/document1.docx --outdir .We provide a step-by-step guide to install unoserver on MacOS here (tested with MacOS Ventura). For more details, see the Unoserver Github repository.

Unoserver needs to run with the python installation used by LibreOffice. You can install unoserver via:

/Applications/LibreOffice.app/Contents/Resources/python -m pip install unoserverYou need the two executables unoserver and unoconvert to convert docx to pdfs. On Mac, they should be located in

/Applications/LibreOffice.app/Contents/Frameworks/LibreOfficePython.framework/bin/. To check if it works, run

/Applications/LibreOffice.app/Contents/Frameworks/LibreOfficePython.framework/bin/unoserverIf this throws an error, it is possibly due to the shebang line pointing to a python installation which does not have uno libraries installed. If so, replace it with the python installation used by LibreOffice:

#!/Applications/LibreOffice.app/Contents/Resources/pythonThe same applies to the unoconvert executable.

On CentOS, you can install LibreOffice and unoserver without root access using the

script install_libreoffice_centos.sh in the scripts folder:

bash scripts/install_libreoffice_centos.shTo check that unoserver is installed correctly, run which unoserver and which unoconv. Both should return a path

to the binaries.

If you find the paper and the pipeline code useful, please consider citing us:

@inproceedings{wordscape,

author={Weber, Maurice and Siebenschuh, Carlo and Butler, Rory Marshall and Alexandrov, Anton and Thanner, Valdemar Ragnar and Tsolakis, Georgios and Jabbar, Haris and Foster, Ian and Li, Bo and Stevens, Rick and Zhang, Ce},

booktitle = {Advances in Neural Information Processing Systems},

title={WordScape: a Pipeline to extract multilingual, visually rich Documents with Layout Annotations from Web Crawl Data},

year={2023}

}

By contributing to this repository, you agree to license your work under the license specified in the LICENSE file.