Semantic Connectivity-Driven Pseudo-labeling for Cross-domain Segmentation.

📔 For more information, please see our paper at ARXIV

Python 3.8.0

pytorch 1.10.1

torchvision 0.11.2

einops 0.3.2

Please see requirements.txt for all the other requirements.

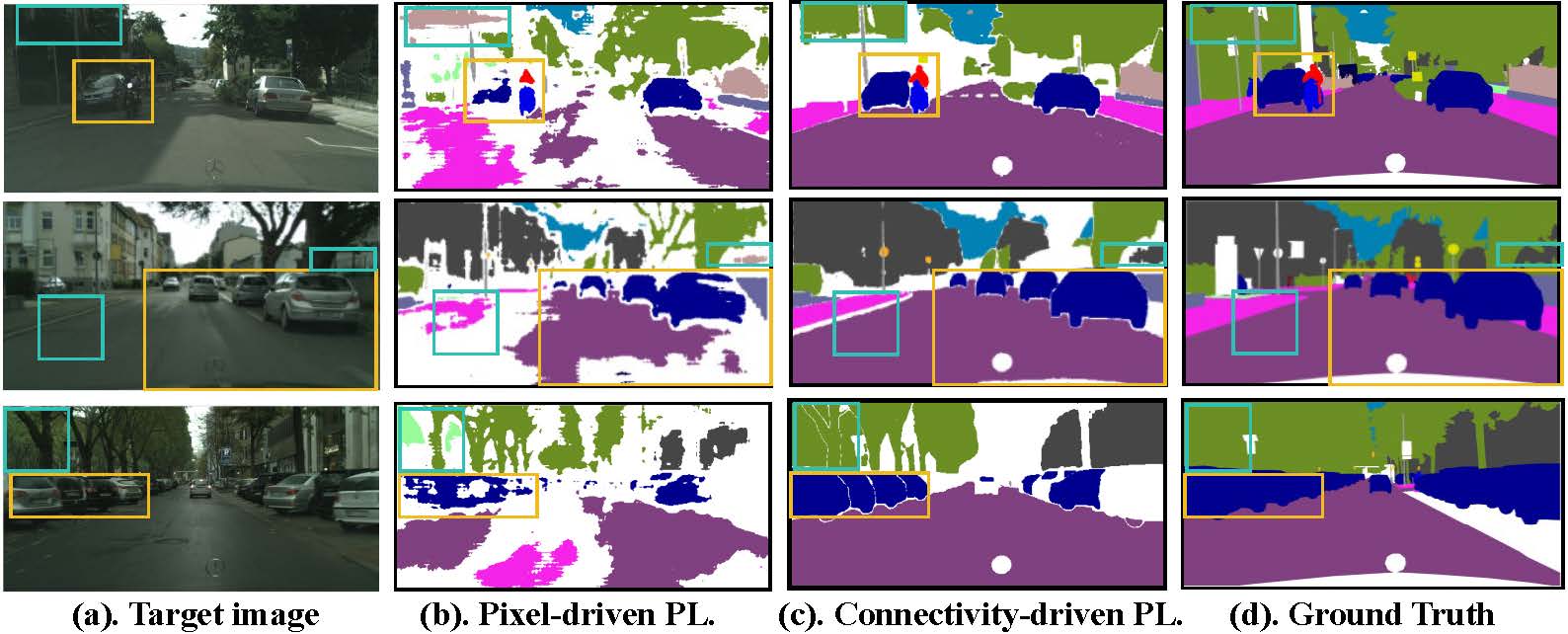

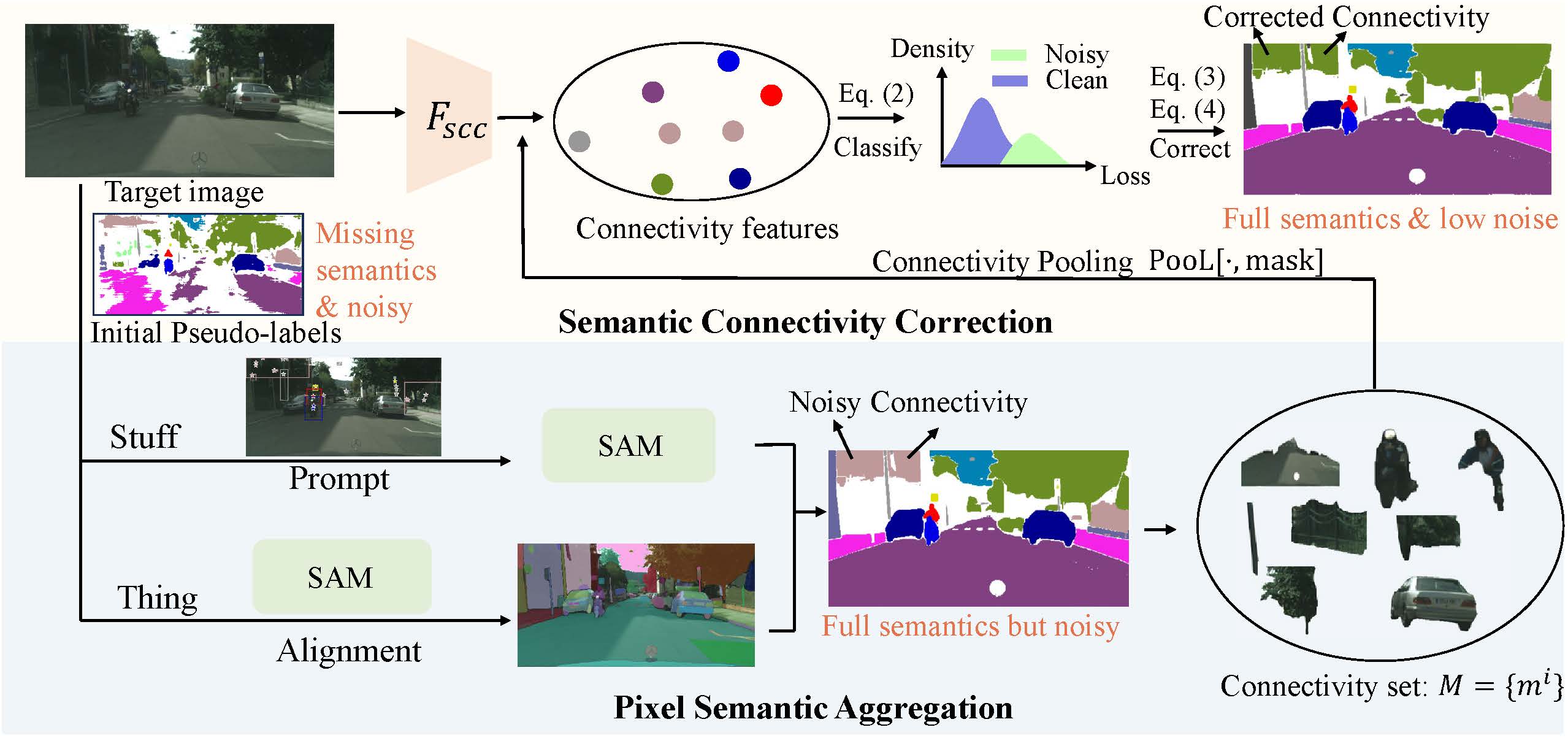

You can use PSA and SCC to obtain high-purity connectivity-based pseudo-labels. These pseudo-labels can then be exploited and embedded into existing unsupervised domain adaptative semantic segmentation methods.

First, you can obtain pixel-level pseudo-labels by pixel thresholding (e.g.cbst ) from a UDA method (e.g. ProDA ) or a source-free UDA method (e.g. DTST), or a UDG method (e.g. SHADE ).

And organize them in the following format.

"""

├─image

├─pixel-level pseudo-label

└─list

"""

list (XXX.txt) records the image names (XXX.png) and their corresponding pixel-level pseudo-labels.

Then, run the PSA as follows:

${exp_name}="HRDA_seco"

CUDA_VISIBLE_DEVICES="1" nohup python seco_sam.py --id-list-path ./splits/cityscapes/${exp_name}/all.txt --class-num ${class_name} > logs/${exp_name} 2>&1 &

Afterward, you can find the aggregated pseudo-labels in root_path/${exp_name}_vit_{B/H}.

After PSA, the noise is also amplified, and then you can use SCC to denoise the connected regions. Refer to (SCC ) part for specific instructions.

In the paper, we leverage the pseudo-labels generated by SeCo across multiple codebases. Due to the diversity in code structures, we provide a unified implementation for utilizing SeCo's pseudo-labels. This involves partitioning the unlabeled data into two subsets (one as a labeled subset and the other as an unlabeled subset) and employing a semi-supervised method (Unimatch ) for further adaptation, as mentioned in the paper.

| Adaptation task | model | Before adaptation | After adaptation | Training logs |

|---|---|---|---|---|

| GTA → Cityscapes | deeplab-r101 | 55.1 DTST | 64.6 | training_logs/seco_gta |

| Synthia → Cityscapes | deeplab-r101 | 52.3 DTST | 59.2 | training_logs/seco_synthia |

| GTA → BDD100K | deeplab-r101 | 37.9 SFOCDA | 44.3 | training_logs/seco_bdd |

This table shows the source-free setting, which is more competitive than the report results in Tables 1 and 2 in the paper. More adaptation scripts will be updated later.

Code is released for non-commercial and research purposes only. For commercial purposes, please contact the authors.

Many thanks to those wonderful work and the open-source code.

If you use this code for your research, please cite our paper:

@misc{zhao2023semantic,

title={Semantic Connectivity-Driven Pseudo-labeling for Cross-domain Segmentation},

author={Dong Zhao and Ruizhi Yang and Shuang Wang and Qi Zang and Yang Hu and Licheng Jiao and Nicu Sebe and Zhun Zhong},

year={2023},

eprint={2312.06331},

archivePrefix={arXiv},

primaryClass={cs.CV}

}