This repository contains PyTorch evaluation code, training code and pretrained models for the following projects:

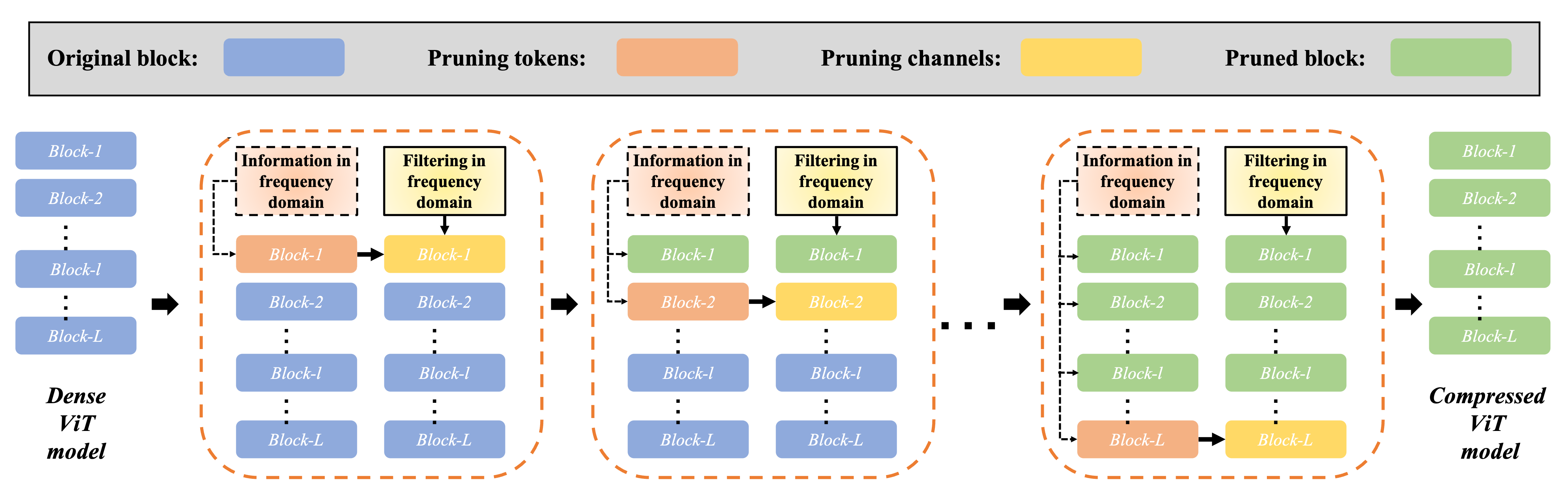

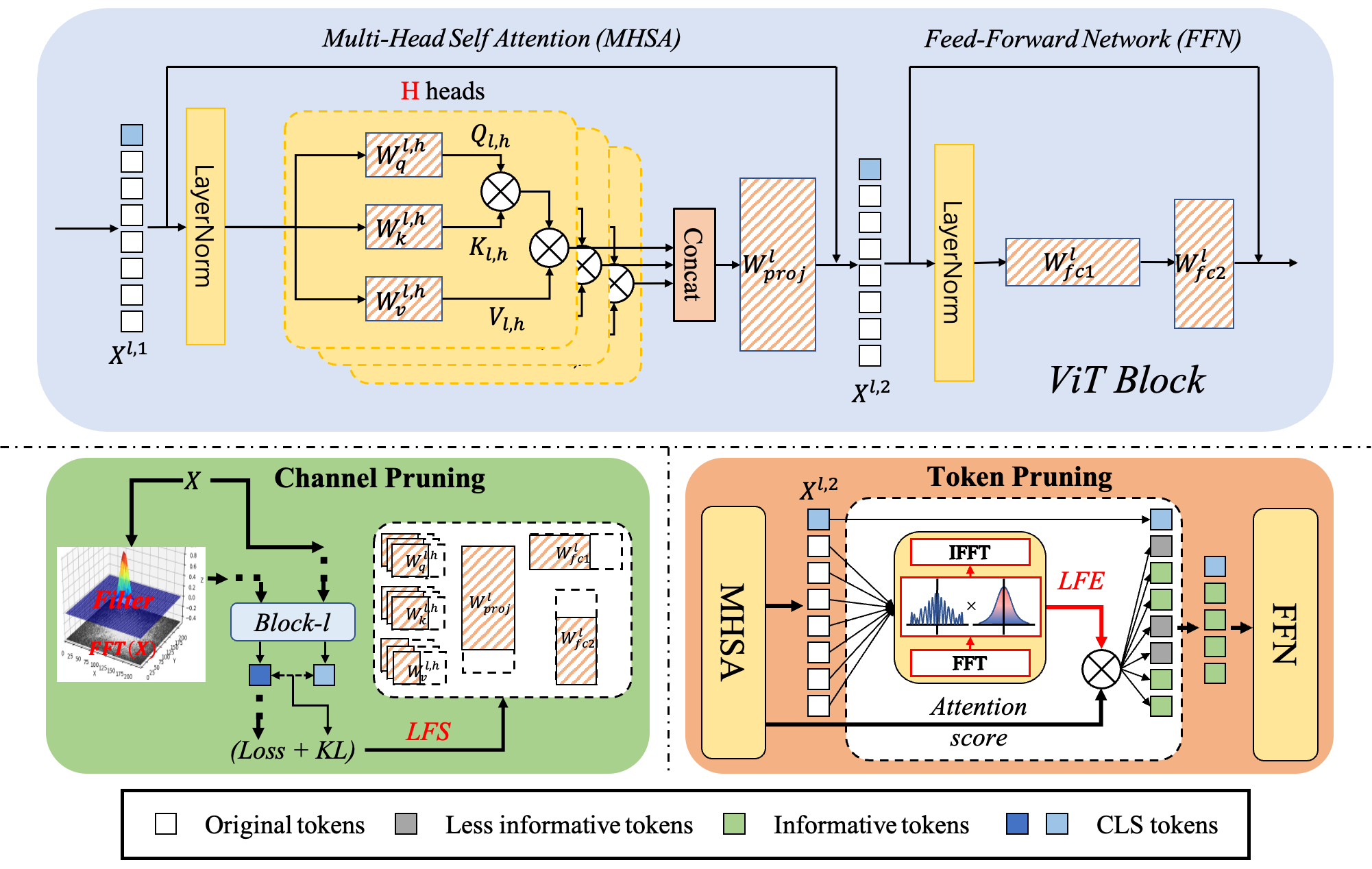

- VTC-LFC (Vision Transformer Compression with Low-Frequency Components), NeurIPS 2022

For details see VTC-LFC: Vision Transformer Compression with Low-Frequency Components by Zhenyu Wang, Hao Luo, Pichao Wang, Feng Ding, Fan Wang, and Hao Li.

We provide compressed DeiT models fine-tuned on ImageNet 2012.

| name | acc@1 | acc@5 | #FLOPs | #params | url |

|---|---|---|---|---|---|

| DeiT-tiny | 71.6 | 90.7 | 0.7G | 5.1M | model |

| DeiT-small | 79.4 | 94.6 | 2.1G | 17.7M | model |

| DeiT-base | 81.3 | 95.3 | 7.5G | 63.5M | model |

We also provide the pruned models without fine-tuning.

| name | #FLOPs | #params | url |

|---|---|---|---|

| DeiT-tiny w/o fine-tuning | 0.7G | 5.1M | model |

| DeiT-small w/o fine-tuning | 2.1G | 17.7M | model |

| DeiT-base w/o fine-tuning | 7.5G | 63.5M | model |

First, clone the repository locally:

git clone

Then, install requirements:

pip install -r -requriments.txt

(we use /torch 1.7.1 /torchvision 0.8.2 /timm 0.3.4/ fvcore 0.1.5 /cuda 11.0 / 16G or 32G V100 for training and evaluation.

Note that we use torch.cuda.amp to accelerate speed of training which requires pytorch >=1.6)

Download and extract ImageNet train and val images from http://image-net.org/.

The directory structure is the standard layout for the torchvision datasets.ImageFolder, and the training and validation data is expected to be in the train/ folder and val folder respectively:

/path/to/imagenet/

train/

class1/

img1.jpeg

class2/

img2.jpeg

val/

class1/

img3.jpeg

class/2

img4.jpeg

You can download the official pretrained models from here.

| name | acc@1 | acc@5 | #params | url |

|---|---|---|---|---|

| DeiT-tiny | 72.2 | 91.1 | 5M | model |

| DeiT-small | 79.9 | 95.0 | 22M | model |

| DeiT-base | 81.8 | 95.6 | 86M | model |

To pruning a pre-trained DeiT model on ImageNet:

python -m torch.distributed.launch --master_port 29500 --nproc_per_node=2 --use_env ./pruning/pruning_py/pruning_deit.py \

--device cuda \

--data-set IMNET \

--batch-size 128 \

--num-classes 1000 \

--dist-eval \

--model deit_tiny_cfged_patch16_224 \

--data-path /path/to/imagenet \

--resume /path/to/pretrained model \

--prune-part qkv,fc1 \

--prune-criterion lfs \

--prune-block-id 0,1,2,3,4,5,6,7,8,9,10,11 \

--cutoff-channel 0.1 \

--cutoff-token 0.85 \

--lfs-lambda 0.1 \

--num-samples 2000 \

--keep-qk-scale \

--prune-mode bcp \

--allowable-drop 9.5 \

--drop-for-token 0.56 \

--prune --prune-only \

--output-dir /path/to/save

To fine-tuning a pruned DeiT model on ImageNet:

python -m torch.distributed.launch --master_port 29500 --nproc_per_node=8 --use_env ./pruning/pruning_py/pruning_deit.py \

--device cuda \

--epochs 300 \

--lr 0.0001 \

--data-set IMNET \

--num-classes 1000 \

--dist-eval \

--model deit_tiny_cfged_patch16_224 \

--batch-size 512 \

--teacher-model deit_tiny_cfged_patch16_224 \

--distillation-alpha 0.1 \

--distillation-type hard \

--warmup-epochs 0 \

--keep-qk-scale \

--finetune-only \

--output-dir /path/to/save \

--data-path /path/to/imagenet \

--teacher-path /path/to/original model \

--resume /path/to/pruned model

If you use this code for a paper please cite:

@inproceedings{

wang2022vtclfc,

title={{VTC}-{LFC}: Vision Transformer Compression with Low-Frequency Components},

author={Zhenyu Wang and Hao Luo and Pichao WANG and Feng Ding and Fan Wang and Hao Li},

booktitle={Thirty-Sixth Conference on Neural Information Processing Systems},

year={2022},

url={https://openreview.net/forum?id=HuiLIB6EaOk}

}

This repository is released under the Apache 2.0 license as found in the LICENSE file.

We actively welcome your pull requests!