This Project is the twelfth task of the Udacity Self-Driving Car Nanodegree program. The main goal of the project is to train an artificial neural network for semantic segmentation of a video from a front-facing camera on a car in order to mark road pixels using Tensorflow.

KITTI Road segmentation (main task of the project):

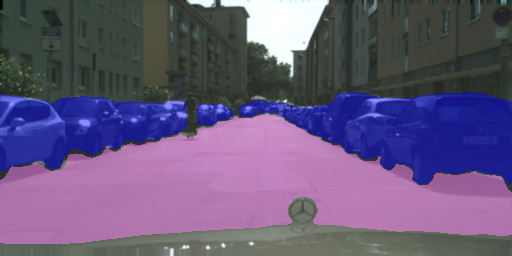

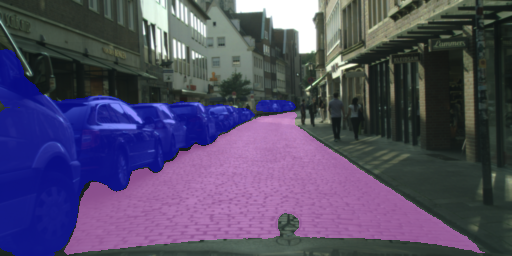

Cityscapes multiclass segmentation (optional task):

Segmentation.ipynb- Jupyter notebook with the main code for the projecthelper.py- python program for images pre- and post- processing.runs- directory with processed imagescityscapes.ipynb- Jupyter notebook with some visualization and preprocessing of the Cityscape dataset. Please, see the notebook for correct dataset directories placing.Segmentation_cityscapes.ipynb- Jupyter notebook with the main code for the Cityscape dataset.helper_cityscapes.py- python program for images pre- and post- processing for the Cityscape dataset.

Note: The repository does not contain any training images. You have to download the image datasetsplace them in appropriate directories on your own.

A Fully Convolutional Network (FCN-8 Architecture developed at Berkeley, see paper ) was applied for the project. It uses VGG16 pretrained on ImageNet as an encoder. Decoder is used to upsample features, extracted by the VGG16 model, to the original image size. The decoder is based on transposed convolution layers.

The goal is to assign each pixel of the input image to the appropriate class (road, backgroung, etc). So, it is a classification problem, that is why, cross entropy loss was applied.

Hyperparameters were chosen by the try-and-error process. Adam optimizer was used as a well-established optimizer. Weights were initialized by a random normal initializer. Some benefits of L2 weights regularization were observed, therefore, it was applied in order to reduce grainy edges of masks.

Resized input images were also treated by random contrast and brightness augmentation (as linear function of the input image). It helps to produce reasonable predictions in difficult light conditions.

def bc_img(img, s = 1.0, m = 0.0):

img = img.astype(np.int)

img = img * s + m

img[img > 255] = 255

img[img < 0] = 0

img = img.astype(np.uint8)

return img Deep shadows and contrast variations are not a problem because of rich augmentation on the training stage.

Two classes (roads and cars) were chosen from the Cityscapes dataset for the optional task. The classes are unbalanced (roads are prevalent), so, a weighted loss function was involved (see Segmentation_cityscapes.ipynb for details). Interestingly, RMSProp optimizer performed better for the imageset.

Unfortunately, accord to the Cityscapes dataset licence I can not publish all produced images, however, there are some of them.

It correctly do not label a cyclist as a car, but mark small partly occluded cars.

It does not have problems with recognizing a cobbled street as a road.

And the ANN is able to mark cars in different projections.

References:

- KITTI dataset

- Cityscapes dataset

- FCN ANN

_____________________ Udacity Readme.md ____________________

Make sure you have the following is installed:

Download the Kitti Road dataset from here. Extract the dataset in the data folder. This will create the folder data_road with all the training a test images.

Implement the code in the main.py module indicated by the "TODO" comments.

The comments indicated with "OPTIONAL" tag are not required to complete.

Run the following command to run the project:

python main.py

Note If running this in Jupyter Notebook system messages, such as those regarding test status, may appear in the terminal rather than the notebook.

- Ensure you've passed all the unit tests.

- Ensure you pass all points on the rubric.

- Submit the following in a zip file.

helper.pymain.pyproject_tests.py- Newest inference images from

runsfolder

A well written README file can enhance your project and portfolio. Develop your abilities to create professional README files by completing this free course.