How can you start talking to your model in the same concepts?

Concept Bottleneck Models (CBMs) assume that training examples (e.g., x-ray images) are annotated with high-level concepts (e.g., types of abnormalities), and perform classification by first predicting the concepts, followed by predicting the label relying on these concepts. The main difficulty in using CBMs comes from having to choose concepts that are predictive of the label and then having to label training examples with these concepts. In our approach, we adopt a more moderate assumption and instead use text descriptions (e.g., radiology reports), accompanying the images in training, to guide the induction of concepts.

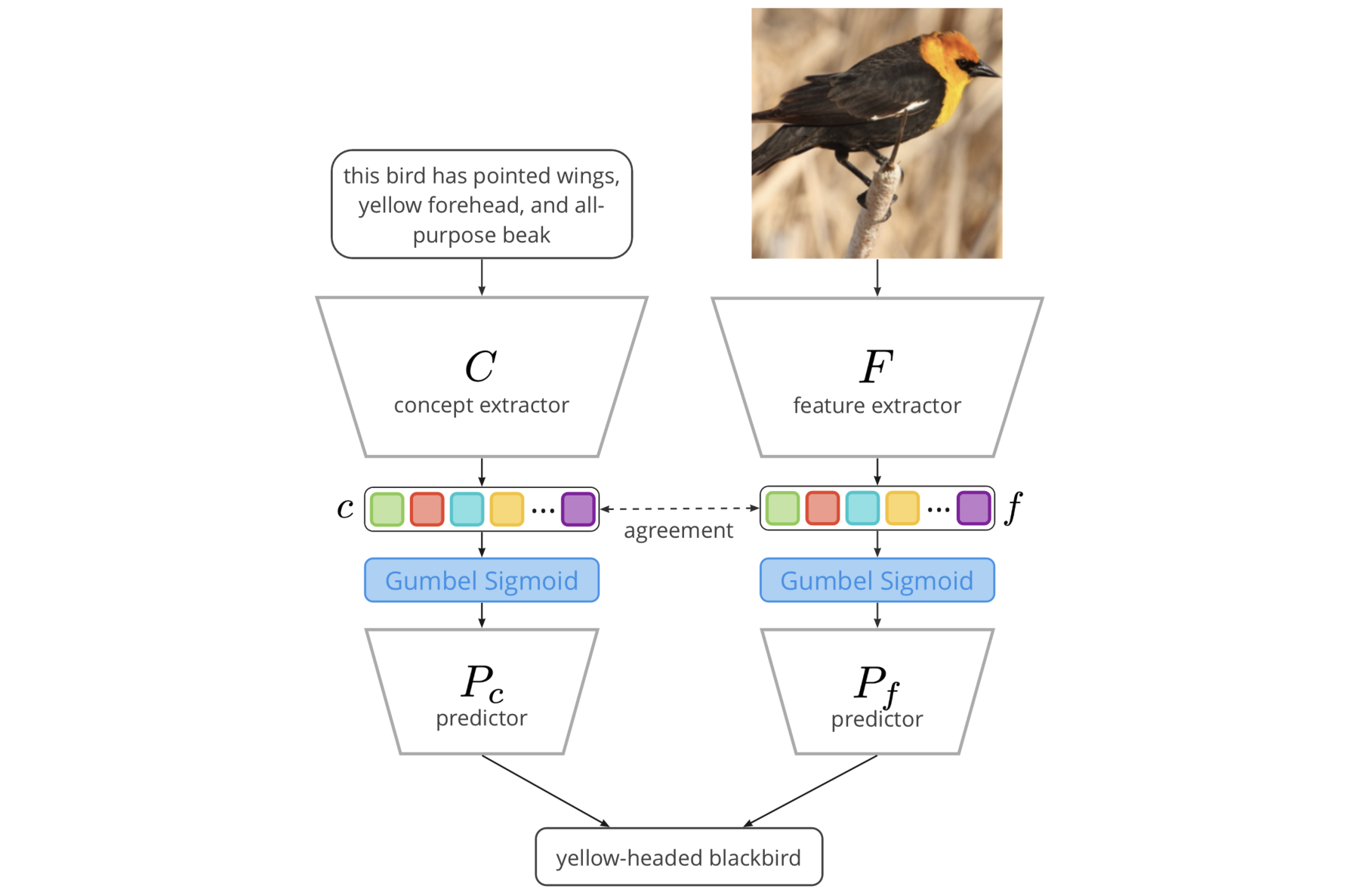

Our cross-modal approach (see above) treats concepts as discrete latent variables and promotes concepts that (1) are predictive of the label, and (2) can be predicted reliably from both the image and text. Through experiments conducted on datasets ranging from synthetic datasets (e.g., synthetic images with generated descriptions) to realistic medical imaging datasets, we demonstrate that cross-modal learning encourages the induction of interpretable concepts while also facilitating disentanglement. Our results also suggest that this guidance leads to increased robustness by suppressing the reliance on shortcut features.

- Set-up conda environment

make set-up-env - Create

.envfile from.env.example— optional, only if you're using Telegram to check pipelines - Set-up experiment registry in clear-ml

clearml-init - Download data for Shapes, CUB-200, MSCOCO, MIMIC-CXR

make download_data - Pre-process CUB-200

make preprocess_cub - Pre-process MIMIC-CXR

make preprocess_mimic - Now you're ready to run your first experiment! Checkpoints and explanations will appear in hydra

outputs/directory (already in.gitignore).python main.py dataset.batch_size=64 seed=42 +experiment={XXX}

-

How can I retrieve explanations?

Our pipeline saves explanations in

results.json, which can be found in experiment folder. For visualization you can useinspect.ipynbnotebook. -

How can I assess my model in terms of DCI?

Measuring disentanglement, completeness, informativeness is moved outside the default pipeline and can be performed via

metrics.ipynbnotebook.

Following table helps you to navigate through all experimental setups. The configurations files are located in this directory. However, some of them are outdated and left to revise our hypotheses later (for clarity we omit them in navigation table below). Feel free to reuse our setups and add new ones here.

| Model | EID-DATASET | pretrained | act_fn | norm_fn | slot_norm | dummy_concept | dummy_tokens | reg_dist | tie_loss* |

|---|---|---|---|---|---|---|---|---|---|

| Standard | E35-SHP | ✓ | relu | - | - | - | - | - | - |

| Standard | E36-SHP | ✓ | sigmoid | - | - | - | - | - | - |

| Standard | E36-CUB | ✓ | sigmoid | - | - | - | - | - | - |

| Standard | E36-MIM | ✓ | sigmoid | - | - | - | - | - | - |

| Standard | E36-SHP-NOROBUST | ✓ | sigmoid | - | - | - | - | - | - |

| Standard | E37-SHP | ✓ | gumbel | - | - | - | - | - | - |

| XCBs | E38-SHP | ✓ | sigmoid | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E39-SHP | ✓ | gumbel | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E39-CUB | ✓ | gumbel | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E39-MIM | ✓ | gumbel | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E39-SHP-NOROBUST | ✓ | gumbel | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E40-SHP | ✓ | gumbel | softmax | ✗ | - | - | ✗ | KL( |

| XCBs | E41-SHP | ✓ | gumbel | softmax | ✗ | - | - | ✗ | KL( |

| XCBs | E42-SHP | ✓ | gumbel | entmax | ✗ | - | - | ✗ | JS( |

| XCBs | E43-SHP | ✓ | gumbel | softmax | ✓ | ✓ | ✗ | ✗ | JS( |

| XCBs | E44-SHP | ✓ | gumbel | softmax | ✓ | ✓ | ✓ | ✗ | JS( |

| XCBs | E45-SHP | ✓ | gumbel | entmax | ✓ | ✓ | ✗ | ✗ | JS( |

| XCBs | E46-SHP | ✓ | gumbel | entmax | ✓ | ✓ | ✓ | ✗ | JS( |

| XCBs | E47-SHP | ✓ | gumbel | softmax | ✗ | - | - | ✓ | JS( |

| XCBs | E47-MIM | ✓ | gumbel | softmax | ✗ | - | - | ✓ | JS( |

| XCBs | E47-SHP-NOISE | ✓ | gumbel | softmax | ✗ | - | - | ✓ | JS( |

| XCBs | E47-SHP-REDUNDANCY | ✓ | gumbel | softmax | ✗ | - | - | ✓ | JS( |

| XCBs | E48-SHP | ✓ | gumbel | entmax | ✗ | - | - | ✓ | JS( |

| Standard | E49-SHP | ✗ | relu | - | - | - | - | - | - |

| Standard | E50-SHP | ✗ | sigmoid | - | - | - | - | - | - |

| Standard | E51-SHP | ✗ | gumbel | - | - | - | - | - | - |

| XCBs | E52-SHP | ✗ | sigmoid | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E53-SHP | ✗ | gumbel | softmax | ✗ | - | - | ✗ | JS( |

| XCBs | E54-SHP | ✗ | gumbel | softmax | ✗ | - | - | ✗ | KL( |

| XCBs | E55-SHP | ✗ | gumbel | softmax | ✗ | - | - | ✗ | KL( |

| XCBs | E56-SHP | ✗ | gumbel | entmax | ✗ | - | - | ✗ | JS( |

| XCBs | E57-SHP | ✗ | gumbel | softmax | ✓ | ✓ | ✗ | ✗ | JS( |

| XCBs | E58-SHP | ✗ | gumbel | softmax | ✓ | ✓ | ✓ | ✗ | JS( |

| XCBs | E59-SHP | ✗ | gumbel | entmax | ✓ | ✓ | ✗ | ✗ | JS( |

| XCBs | E60-SHP | ✗ | gumbel | entmax | ✓ | ✓ | ✓ | ✗ | JS( |

| XCBs | E61-SHP | ✗ | gumbel | softmax | ✗ | - | - | ✓ | JS( |

| XCBs | E62-SHP | ✗ | gumbel | entmax | ✗ | - | - | ✓ | JS( |

* KL stands for Kullback–Leibler divergence, JS - for Jensen–Shannon divergence

Our research paper "Cross-Modal Conceptualization in Bottleneck Models" was published in proceedings of EMNLP 2023. To cite it please use the following bibtex:

@inproceedings{alukaev-etal-2023-cross,

title = "Cross-Modal Conceptualization in Bottleneck Models",

author = "Alukaev, Danis and

Kiselev, Semen and

Pershin, Ilya and

Ibragimov, Bulat and

Ivanov, Vladimir and

Kornaev, Alexey and

Titov, Ivan",

editor = "Bouamor, Houda and

Pino, Juan and

Bali, Kalika",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.318",

pages = "5241--5253",

abstract = "Concept Bottleneck Models (CBMs) assume that training examples (e.g., x-ray images) are annotated with high-level concepts (e.g., types of abnormalities), and perform classification by first predicting the concepts, followed by predicting the label relying on these concepts. However, the primary challenge in employing CBMs lies in the requirement of defining concepts predictive of the label and annotating training examples with these concepts. In our approach, we adopt a more moderate assumption and instead use text descriptions (e.g., radiology reports), accompanying the images, to guide the induction of concepts. Our crossmodal approach treats concepts as discrete latent variables and promotes concepts that (1) are predictive of the label, and (2) can be predicted reliably from both the image and text. Through experiments conducted on datasets ranging from synthetic datasets (e.g., synthetic images with generated descriptions) to realistic medical imaging datasets, we demonstrate that crossmodal learning encourages the induction of interpretable concepts while also facilitating disentanglement.",

}