Place and animate 3D content that follows the user's face and matches facial expressions, using the TrueDepth camera on iPhone X.

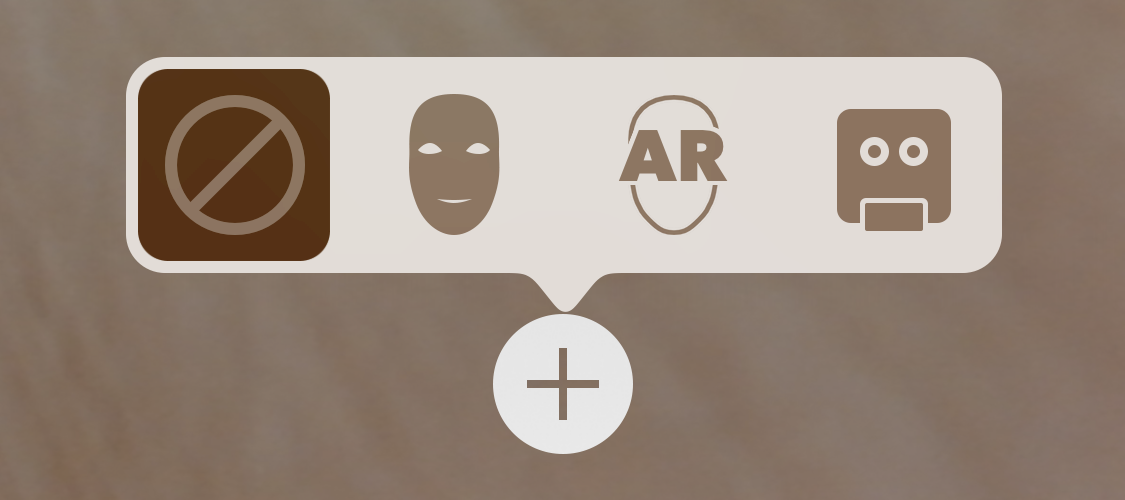

This sample app presents a simple interface allowing you to choose between four augmented reality (AR) visualizations on devices with a TrueDepth front-facing camera (see iOS Device Compatibility Reference).

- The camera view alone, without any AR content.

- The face mesh provided by ARKit, with automatic estimation of the real-world directional lighting environment.

- Virtual 3D content that appears to attach to (and be obscured by parts of) the user's real face.

- A simple robot character whose facial expression is animated to match that of the user.

Use the "+" button in the sample app to switch between these modes, as shown below.

ARKit face tracking requires iOS 11 and an iOS device with a TrueDepth front-facing camera (see iOS Device Compatibility Reference). ARKit is not available in iOS Simulator.

Like other uses of ARKit, face tracking requires configuring and running a session (an ARSession object) and rendering the camera image together with virtual content in a view. For more detailed explanations of session and view setup, see About Augmented Reality and ARKit and Building Your First AR Experience. This sample uses SceneKit to display an AR experience, but you can also use SpriteKit or build your own renderer using Metal (see ARSKView and Displaying an AR Experience with Metal).

Face tracking differs from other uses of ARKit in the class you use to configure the session. To enable face tracking, create an instance of ARFaceTrackingConfiguration, configure its properties, and pass it to the run(_:options:) method of the AR session associated with your view, as shown below.

guard ARFaceTrackingConfiguration.isSupported else { return }

let configuration = ARFaceTrackingConfiguration()

configuration.isLightEstimationEnabled = true

session.run(configuration, options: [.resetTracking, .removeExistingAnchors])View in Source

Before offering your user features that require a face tracking AR session, check the ARFaceTrackingConfiguration.isSupported property to determine whether the current device supports ARKit face tracking.

When face tracking is active, ARKit automatically adds ARFaceAnchor objects to the running AR session, containing information about the user's face, including its position and orientation.

- Note: ARKit detects and provides information about only one user's face. If multiple faces are present in the camera image, ARKit chooses the largest or most clearly recognizable face.

In a SceneKit-based AR experience, you can add 3D content corresponding to a face anchor in the renderer(_:didAdd:for:) method (from the ARSCNViewDelegate protocol). ARKit adds a SceneKit node for the anchor, and updates that node's position and orientation on each frame, so any SceneKit content you add to that node automatically follows the position and orientation of the user's face.

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

// Hold onto the `faceNode` so that the session does not need to be restarted when switching masks.

faceNode = node

serialQueue.async {

self.setupFaceNodeContent()

}

}View in Source

In this example, the renderer(_:didAdd:for:) method calls the setupFaceNodeContent method to add SceneKit content to the faceNode. For example, if you change the showsCoordinateOrigin variable in the sample code, the app adds a visualization of the x/y/z axes to the node, indicating the origin of the face anchor's coordinate system.

ARKit provides a coarse 3D mesh geometry matching the size, shape, topology, and current facial expression of the user's face. ARKit also provides the ARSCNFaceGeometry class, offering an easy way to visualize this mesh in SceneKit.

Your AR experience can use this mesh to place or draw content that appears to attach to the face. For example, by applying a semitransparent texture to this geometry you could paint virtual tattoos or makeup onto the user's skin.

To create a SceneKit face geometry, initialize an ARSCNFaceGeometry object with the Metal device your SceneKit view uses for rendering:

// This relies on the earlier check of `ARFaceTrackingConfiguration.isSupported`.

let device = sceneView.device!

let maskGeometry = ARSCNFaceGeometry(device: device)!View in Source

The sample code's setupFaceNodeContent method (mentioned above) adds a node containing the face geometry to the scene. By making that node a child of the node provided by the face anchor, the face model automatically tracks the position and orientation of the user's face.

To also make the face model onscreen conform to the shape of the user's face, even as the user blinks, talks, and makes various facial expressions, you need to retrieve an updated face mesh in the renderer(_:didUpdate:for:) delegate callback.

func renderer(_ renderer: SCNSceneRenderer, didUpdate node: SCNNode, for anchor: ARAnchor) {

guard let faceAnchor = anchor as? ARFaceAnchor else { return }

virtualFaceNode?.update(withFaceAnchor: faceAnchor)

}View in Source

Then, update the ARSCNFaceGeometry object in your scene to match by passing the new face mesh to its update(from:) method:

func update(withFaceAnchor anchor: ARFaceAnchor) {

let faceGeometry = geometry as! ARSCNFaceGeometry

faceGeometry.update(from: anchor.geometry)

}View in Source

Another use of the face mesh that ARKit provides is to create occlusion geometry in your scene. An occlusion geometry is a 3D model that doesn't render any visible content (allowing the camera image to show through), but obstructs the camera's view of other virtual content in the scene.

This technique creates the illusion that the real face interacts with virtual objects, even though the face is a 2D camera image and the virtual content is a rendered 3D object. For example, if you place an occlusion geometry and virtual glasses on the user's face, the face can obscure the frame of the glasses.

To create an occlusion geometry for the face, start by creating an ARSCNFaceGeometry object as in the previous example. However, instead of configuring that object's SceneKit material with a visible appearance, set the material to render depth but not color during rendering:

geometry.firstMaterial!.colorBufferWriteMask = []

occlusionNode = SCNNode(geometry: geometry)

occlusionNode.renderingOrder = -1View in Source

Because the material renders depth, other objects rendered by SceneKit correctly appear in front of it or behind it. But because the material doesn't render color, the camera image appears in its place. The sample app combines this technique with a SceneKit object positioned in front of the user's eyes, creating an effect where the object is realistically obscured by the user's nose.

In addition to the face mesh shown in the above examples, ARKit also provides a more abstract model of the user's facial expressions in the form of a blendShapes dictionary. You can use the named coefficient values in this dictionary to control the animation parameters of your own 2D or 3D assets, creating a character (such as an avatar or puppet) that follows the user's real facial movements and expressions.

As a basic demonstration of blend shape animation, this sample includes a simple model of a robot character's head, created using SceneKit primitive shapes. (See the robotHead.scn file in the source code.)

To get the user's current facial expression, read the blendShapes dictionary from the face anchor in the renderer(_:didUpdate:for:) delegate callback:

func update(withFaceAnchor faceAnchor: ARFaceAnchor) {

blendShapes = faceAnchor.blendShapes

}View in Source

Then, examine the key-value pairs in that dictionary to calculate animation parameters for your model. There are 52 unique ARFaceAnchor.BlendShapeLocation coefficients. Your app can use as few or as many of them as neccessary to create the artistic effect you want. In this sample, the RobotHead class performs this calculation, mapping the eyeBlinkLeft and eyeBlinkRight parameters to one axis of the scale factor of the robot's eyes, and the jawOpen parameter to offset the position of the robot's jaw.

var blendShapes: [ARFaceAnchor.BlendShapeLocation: Any] = [:] {

didSet {

guard let eyeBlinkLeft = blendShapes[.eyeBlinkLeft] as? Float,

let eyeBlinkRight = blendShapes[.eyeBlinkRight] as? Float,

let jawOpen = blendShapes[.jawOpen] as? Float

else { return }

eyeLeftNode.scale.z = 1 - eyeBlinkLeft

eyeRightNode.scale.z = 1 - eyeBlinkRight

jawNode.position.y = originalJawY - jawHeight * jawOpen

}

}View in Source