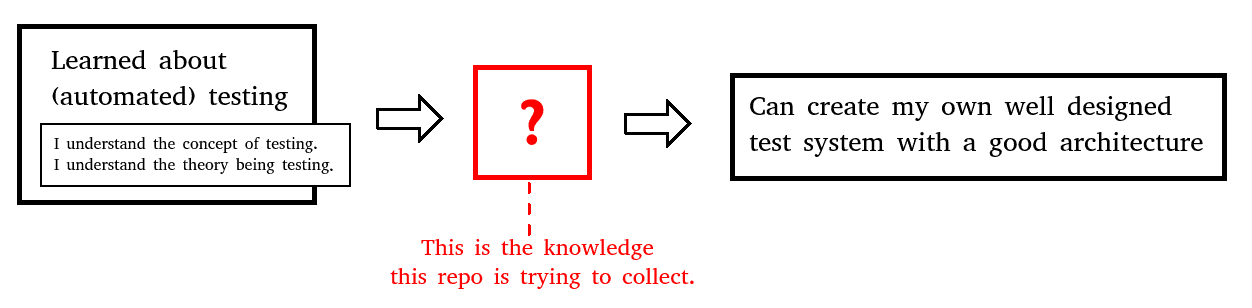

This page / repo ought to be a collection of practical advanced topics about automated testing - Something like follow-up guide on auto testing after one is familiar with the basic concepts (Like if you read a programming book and now need to learn about software architecture) (See also this question on Stackexchange.

I'm searching for a couple of years now, but could not find much consolidated useful information for the bridge between I understand the concept of auto testing and I can confidently write a well designed test system. This collection should help me (and hopefully others) to get on that way.

I don't mean to infringe anyones copyright with the offline versions. They are there to prevent dead links for the future. If you feel infringed, please contact me.

The following are categories / properties a test should express. The big question is: How

- Functional dependency

- Test level:

- non-ui / ui tests

- unit test / integration test / system test

- Test runtime

- Test environment dependency (stand alone test vs. "I need a DB")

- Test case naming: short version vs. step-by-step

There are various kinds of tests. Each with their own width and depth. For example you have unit tests, integration tests, system tests, acceptance tests and so on. How would manage them? How would make them visible? How would you keep track of everything? How would you keep track of what you've already tested and what not.

And +α: How do you go about that topic when some of the tests are automated tests and some are manual tests?

For example, what tools would you use? How would you structure the project and/or tests?

Backgroud: As for commercial development, you don't necessarily care if you tests succeed or fail. You care about if the requirements are met or not.

Why not BDD?

BDD uses concrete use cases, but a real spec is mostly abstract.So you end up with the same problem: You need to map the concrete use cases to the abstract spec.

I.e. test2 makes use of functionality test1 tests exhaustively. How do I express that in a test? Background: I'd If test1 and test2 both fail, it should be clear, that test1 is the one I should look for an error first.

E.g. there is test_show_error_message and test_software_starts_up. test_software_starts_up is the the test with more priority and if anything happens should be looked at first. How do I express that?

-

How do I express that tests take only short time or a long time to test?

-

How do I express that tests have external dependencies?

Unit tests, Integration Tests, System Tests, ... Do I have a completely separated test system for each of these? If yes, How do I make sure to not forget to start up each of them after a change?

Example:

Show error on button press

Pros:

- Easy to find; easy to talk about; most test systems are designed for this naming convention.

Cons:

- Setup is not described: What happend before the button press?

- What should happen after? If tests are essentially the spec, then it's important to ask what should happen after the error was shown? Expressing it with this short style is very difficult.

Example:

Open main screen, enter wrong login information, click login button => error shown

Pros:

- The test case becomes very clear

- New bugs are easily analyzable, because they also describe a list of steps taken

Cons:

- Difficult to talk about test

- Test identifiers become long

- branching becomes cumbersome; related tests might end up far away.

Notes: This naming pattern goes well with hierarchical tests, where edges contain actions and leaves contain checks.

- Open main screen

- => main screen is shown

- => login information enterable

- enter wrong login information

- => login information is entered

- click login button

- => error shown

- => opacity of other components is lower

E.g. I want to test an add(a,b) function: do I have many different tests with rather silly names like test_test_lower_bound and make it difficult to overview or do I use some kind of CSV table to feed in data? Pros? Cons?

Question

Do I build up and destroy a database server everytime a test starts that needs the database? Do I use one huge test database containing all test data for all tests and use transactions and rollbacks? What I test code that finishes transaction? What I I'm working with a MySQL database?

Answer

Regarding DB construction:

- You can build and destroy the whole database everytime (this will probably be very slow, but do the job)

- (If the DB supports it), you might use transactions or "sessions", that you roll back after each test. However, this is highly DBMS dependent and you might run into problems for specific edge cases (E.g. not many DBMSs support transactions inside transactions inside transactions ... )

As for the data to setup, one possibility is to prepare a set of convenience functions, that will create a certain test environment. But caution: don't overdo it here. Otherwise you might end up with many tests depending on a convenience function, that you then cannot change anymore, because too many depend on it.

- Testing static values (most basic form: assert_equal (foo(5), output=25)

- Fuzz Testing: Basically brute force testing offline | online

The following list links to various topics.

-

Robert Nystrom - Game Programming Patterns: How a test suite should look (don't remember concrete chapters) online

-

Justin Searls - How to stop hating your test suite youtube

-

About What kind of tests to write/ to not write: offline | online

-

More scientific approach on what tests to automate youtube

-

Where to start in a system without any tests (SE question)

-

About overlapping tests:

-

Martin Fowler - Testing asynchronous Javascript online

-

Martin Fowler - In Memory Test Database online

-

Martin Fowler - GUI Tests: The Page Object online

-

Martin Fowler - Self Testing Code online

-

Anti Patterns in Test Automation online

-

Unit testing anti-patterns catalog online

- Second Class Citizen (= no good architecture for test code)

- The Free Ride (= add test cases to existing tests)

- Happy Path (= Don't test critical behavior)

- Local Hero (= Test depends on something specific of the development environment)

- Hidden Dependency (= Test depends on something undocumented)

- Chain Gang (= Tests that must run in a certain order)

- The Mockery (= Too many mocks; Test mock object instead of domain code)

- The Silent Catcher (= Test if an exception is thrown; but the thrown exception was an unexpected one)

- The Inspector (= A unit test that violates encapsulation in an effort to achieve 100% code coverage)

- Excessive Setup (= Test that requires a huge setup)

- Anal Probe (= A test which has to use insane, illegal or otherwise unhealthy ways to perform its task)

- The Test With No Name (= E.g.

testForBUG123) - Butterfly (= Test with data that changes all the time (like current date/time)

- Flickering Test (= Test which occasionally fails, not at specific times; is generally due to race conditions within the test)

- Wait and See (= Setup,

sleepa specific amount of time, check) - Inappropriately Shared Fixture (= Several test cases in the test fixture do not even use or need the setup / teardown)

- The Giant (= Test that, although it is validly testing the object under test, can span thousands of lines and contain many many test cases)

- I'll believe it when I see some flashing GUIs (= fixation/obsession with testing the app via its GUI 'just like a real user')

- Wet Floor (= Don't clean up persisted data after test)

- Cuckoo (= A unit test which sits in a test case with several others, and enjoys the same (potentially lengthy) setup process as the other tests in the test case, but then discards some or all of the artifacts from the setup and creates its own.)

- The Secret Catcher (= Test that appears to be doing no testing, due to absence of assertions. The test is really relying on an exception to be thrown and expecting the testing framework to capture the exception and report it to the user as a failure)

- The Conjoined Twins (= Integration test that are called Unit tests)

-

Martin Fowler on testing online

- Specification By Example online

- Testing Strategies in a Microservice Architecture online

- The Practical Test Pyramid online

- Is TDD dead online

- Eradicating Non-Determinism in Tests online

- Mocks Aren't Stubs online

- Continuous Delivery online

- QA in Production online

- Test Impact analysis online

- Database And Build Time online

- Software that isn't testable online

- Exploratory Testing online

- Humble Objects online

- Self Initializing Fake online

- Testing strategy: Synthetic Monitoring online

-

Martin Fowler on Test Categories online

-

Different perspective of developer and tester Stackexchange link

- Wikipedia: List of GUI testing tools