"Brain" games in rust

A simple tic tac toe bot. The program that trains two "dumb" bots over 1,000,000 games, one playing Xs and one playing Os. What you should observe is that the bots will begin to tie the further along they get until neither bot can gain an advantage.

The training portion of the program is written in rust and compiled with wasm-pack. Those output files are loading via a Web Worker to keep the training loop off of the main thread.

Running in browser

./build_wasm.shThen point a local server to www and play right away or train first. The wasm specific code is in "lib" and the standard rust program starts in "main" (will be updated soon to play and train).

Running native

Will run one single game (for now)

cargo run --releaseNOTE

currently only works in chrome or chromium since I am using the "module" type of web worker. Will work fine in others with some bundling.

Explanation:

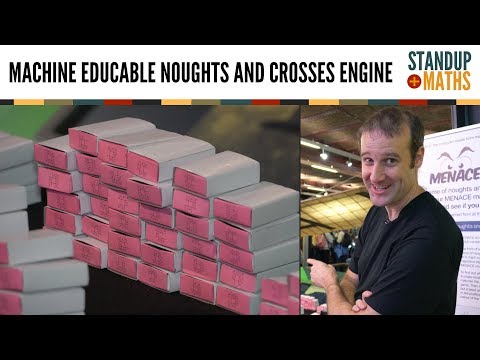

The implementation here isn't machine learning as we would think about it with neural networks and the like. It is very brute force and isn't something that would scale much past something like "tic tac toe" since it requires the bot to be aware of all potential game states. This bot is an emulation of the match box "computer" described in the video below. A fun exercise and nothing more.

Observations

When training the bot the first time (1000000) games. You will generally end up with ~65000 wins for Xs and ~35000 for Os with the rest being ties. If you train them a seecond time those numbers drop to just a couple hundred each and eventually trailing off with neither X or O being able to win any games. Since the decisions are "random" there is still a chance that either bot could loose a game but it is very unlikely.

The bot CAN be tricked by playing odd or obviously bad moves. At the moment the strategy for NOT LOOSING to the bot. When it one move away from winning, dont' block. It won't be as used to seeing that sort of play and kinda panics and there is a chance it will place elsewhere.

By tweaking a few parameters and updating a bit of the bot's "learn" function we can change how we teach the bot the game. For example, in tic tac toe, if two players play optimal games, the game will always end in a tie. If ending in a tie is ultimately the end result, what would happen if you told the bot that a tie was as good as a win? Is there a measureably difference between a bot that is taught to win vs a bot that is taught not to loose?

The starting value for each potential move impacts the numbers above significantly and affects how well the bot will play against a person. If the starting number is to low (3) then it is possible for a player to throw non obvious moves at the bot and it will fail to play optimally. If that number is to high (100) then it will take significantly MORE games then 1000000 to teach the bot to play well. The current values sits at 10.

The bots don't care how many moves it takes to win. They will sometimes block instead of going for the win but if and only if they will win at the end. A win is a win.

Ex. (Bot is O)

Given this game, the bot is just as likely to take index 1 or 7 as index 0. The end result is the same but the bot "plays with" it's oponent. Could be improved by boosting winning moves or even clearing out other options if a winning move is available. A potential downside of vastly upping winning moves is that the oponent bot would see variations less often and therefore be open to being tripped up by suboptimal moves.

| X | ||

| X | O | X |

| O |

this move:

| O | X | |

| X | O | X |

| O |

is seen to be just as good as this one:

| O | X | |

| X | O | X |

| O |

To the bot both moves are equally good since they both lead to a winning result.