BrowserClient

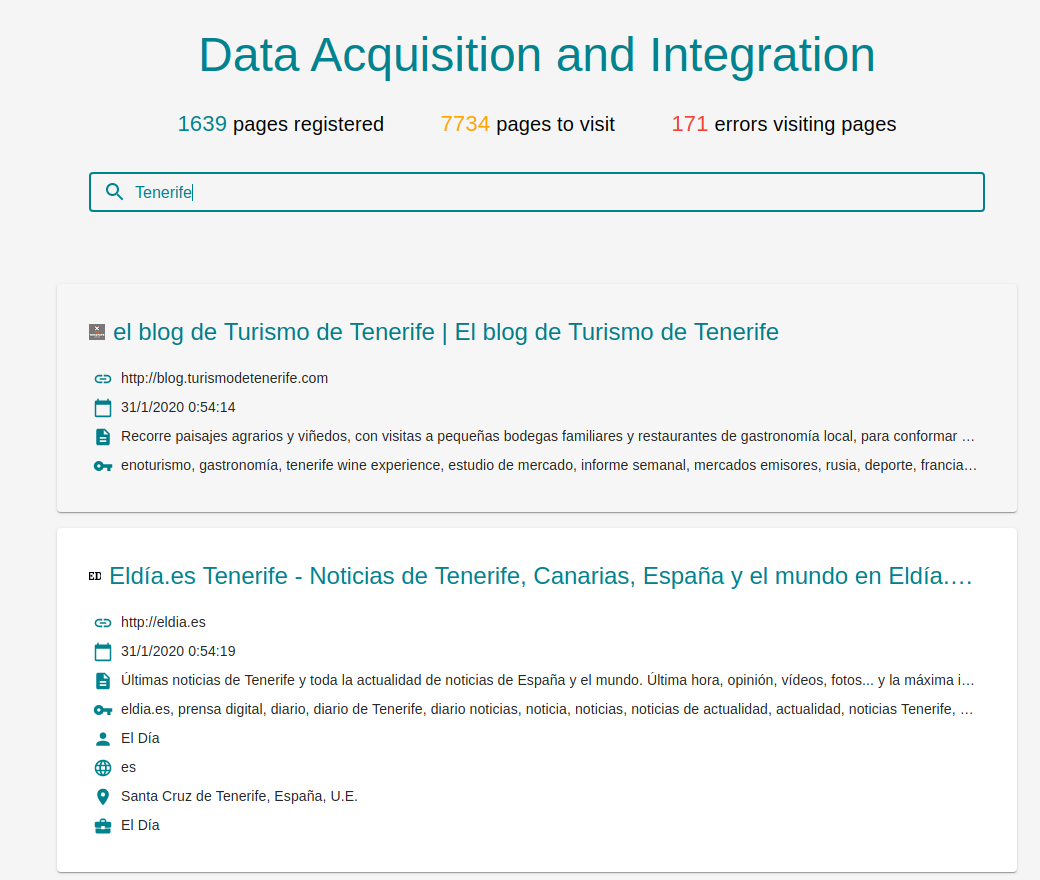

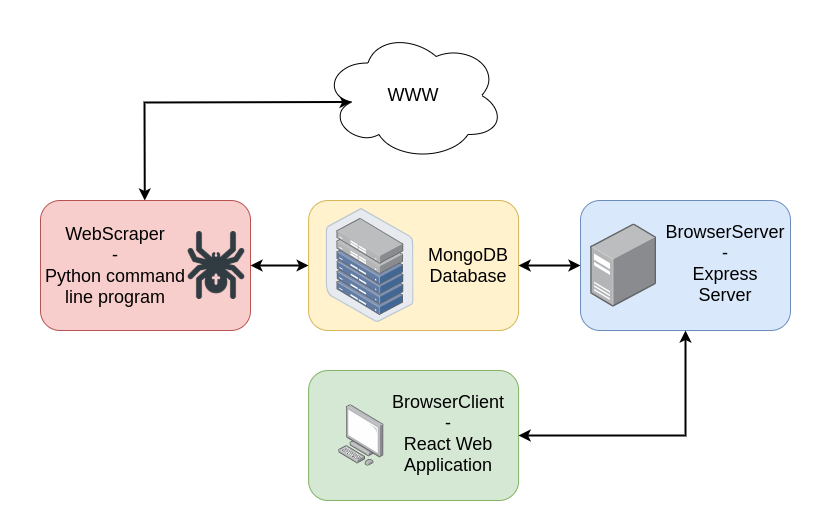

React application which allows users to search information about webpages. The searches are sent to /search BrowserServer's endpoint when the users modifies the value stored in the search bar. BrowserClient alse makes GET petitions periodically to /ping BrowserServer's endpoint to obtain information about the amount of documents stored on each database collection. The information about the webpages is retrieved using WebScraper. BrowserClient was created as a part of the final project of Data Acquisition and Integration subject.

When a search is performed, the web application displays one card for each page registered. The card will show the information acquired when registering the webpage with WebScraper:

- Title

- Url

- Registry timestamp

- Description

- Keywords

- Author

- Language

- Locality

- Organization

By default, BrowserServer limits the results to 100 webpages. The search of the webpages is made using $text MongoDB query operator and the results are sorted using textScore. To be able to perform the searches it was necessary to add a text type index on several fields of the visited collection. Each index field has a weight assigned:

- title: 10

- meta

- keywords: 5

- headers:

- h1: 3

- h2: 2

- content: 1

Usage

To start the application run the comands below:

$ yarn

$ yarn startor

$ npm i

$ npm startAfter running the previous commands the web application can be tested accessing to localhost:3000. Note that for correct usage it is necessary to start BrowserServer.

About the project

With BrowserClient, users are able to search information about registered webpages. The petitions made by BrowserClient are resolved by BrowserServer. Webpages information is stored in a MongoDB database using WebScraper. The database has 3 collections:

- visited: Stores information about visited webpages

- title: Webpage title

- meta: Information extracted from webpages meta tags

- keywords

- description

- author

- lang

- locality

- organization

- content: Filtered webpage content

- headers: Webpage headers

- lastVisited: Timestamp

- url: Webpage url

- baseDomain: Flag indicating if the url corresponds to a base domain

- notVisited: Stores information about the webpages to be visited

- url: Webpage url

- depth: Recursivity depth, obtained during the document registry process

- baseDomain: Flag indicating if the url corresponds to a base domain

- error: Stores information about webpages that were not stored due to an error

- lastVisited: Timestamp

- url: Webpage url

- baseDomain: Flag indicating if the url corresponds to a base domain

- errorType: Error type

- message: Error message

Setup

To initialize the database it is necessary to execute WebScraper specifying -i or --initialize option:

$ python3 src/main.py --initializeOnce the database is initialized, it is possible to start using WebScraper for retrieve webpages information. For more details, see README.md file in WebScraper repository.

After obtaining and storing information, it's time to start BrowserServer. For more information, visit BrowserServer repository and check README.md file.

In order to perform searches it is necessary to start BrowserClient web application as showed below.