TSIT: A Simple and Versatile Framework for Image-to-Image Translation

This repository will provide the official code for the following paper:

TSIT: A Simple and Versatile Framework for Image-to-Image Translation

Liming Jiang, Changxu Zhang, Mingyang Huang, Chunxiao Liu, Jianping Shi and Chen Change Loy

In ECCV 2020 (Spotlight).

Paper

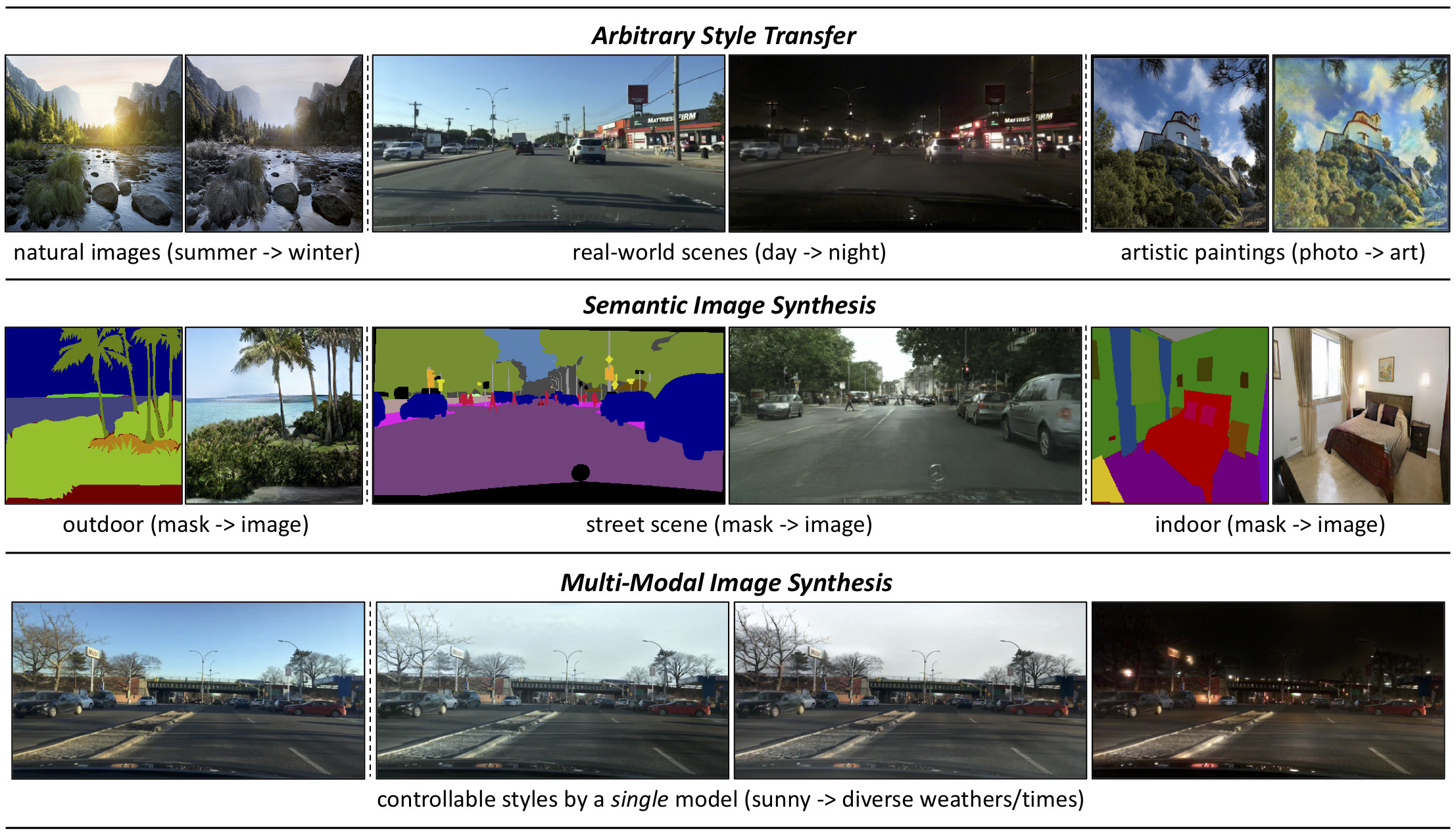

Abstract: We introduce a simple and versatile framework for image-to-image translation. We unearth the importance of normalization layers, and provide a carefully designed two-stream generative model with newly proposed feature transformations in a coarse-to-fine fashion. This allows multi-scale semantic structure information and style representation to be effectively captured and fused by the network, permitting our method to scale to various tasks in both unsupervised and supervised settings. No additional constraints (e.g., cycle consistency) are needed, contributing to a very clean and simple method. Multi-modal image synthesis with arbitrary style control is made possible. A systematic study compares the proposed method with several state-of-the-art task-specific baselines, verifying its effectiveness in both perceptual quality and quantitative evaluations.

Updates

- [07/2020] The paper of TSIT is accepted by ECCV 2020 (Spotlight).

Code

The code of our work will be made publicly available. Please stay tuned.

Citation

If you find this work useful for your research, please cite our paper:

@inproceedings{jiang2020tsit,

title={TSIT: A Simple and Versatile Framework for Image-to-Image Translation},

author={Jiang, Liming and Zhang, Changxu and Huang, Mingyang and Liu, Chunxiao and Shi, Jianping and Loy, Chen Change},

booktitle={ECCV},

year={2020}

}

License

Copyright (c) 2020