BBS-Net: RGB-D Salient Object Detection with a Bifurcated Backbone Strategy Network

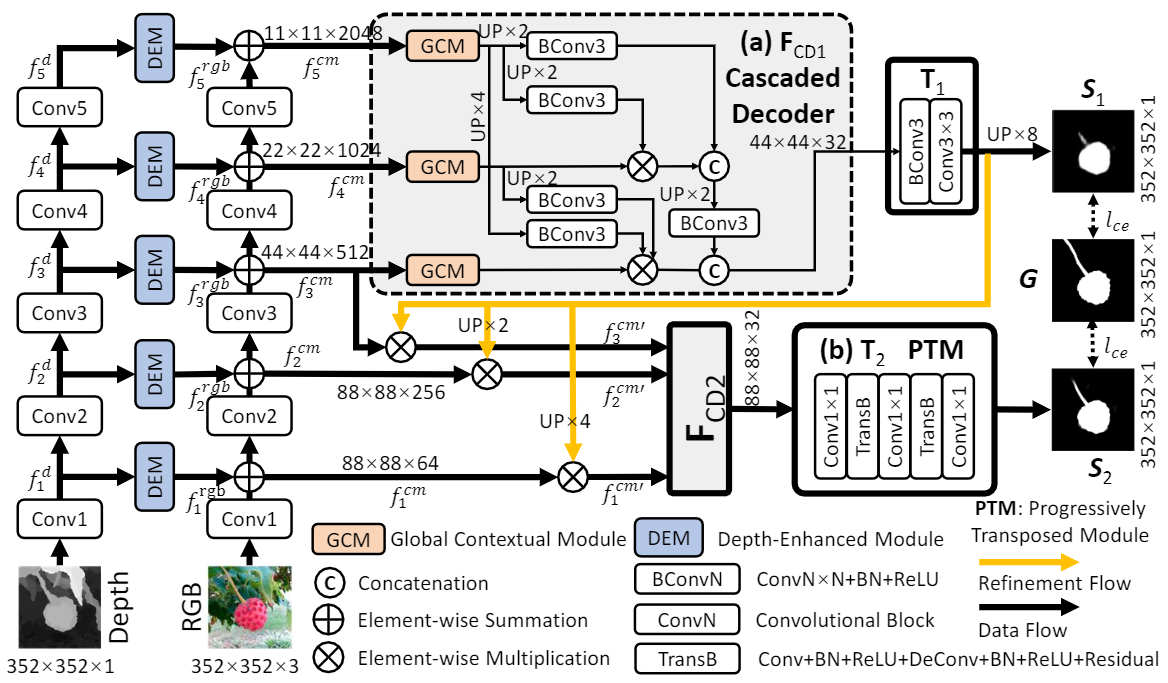

Figure 1: Pipeline of the BBS-Net.

Python 3.7, Pytorch 0.4.0+, Cuda 10.0, TensorboardX 2.0, opencv-python

-

Download the raw data from Baidu Pan [code: yiy1] or Google Drive and trained model (BBSNet.pth) from Here [code: dwcp]. Then put them under the following directory:

-BBS_dataset\ -RGBD_for_train\ -RGBD_for_test\ -test_in_train\ -BBSNet -models\ -model_pths\ -BBSNet.pth ... -

Note that the depth maps of the raw data above are not normalized. If you train and test using the normalized depth maps, the performance will be improved.

-

Train the BBSNet:

python BBSNet_train.py --batchsize 10 --gpu_id 0 -

Test the BBSNet:

python BBSNet_test.py --gpu_id 0The test maps will be saved to './test_maps/'.

-

Evaluate the result maps:

You can evaluate the result maps using the tool in Python_GPU Version or Matlab Version.

-

If you need the codes using VGG16 and VGG19 backbones, please send to the email (zhaiyingjier@163.com). Please provide your Name & Institution. Please note the code can be only used for research purpose.

Figure 2: Qualitative visual comparison of the proposed model versus 8 SOTA

models.

Table 1: Quantitative comparison of models using S-measure max F-measure, max E-measureand MAE scores on 7 datasets.

Table 2: Performance comparison using different backbones.

- Test maps of the above datasets (ResNet50 backbone) can be download from here [code: qgai ].

- Test maps of vgg16 and vgg19 backbones of our model can be download from here [code: zuds ].

- Test maps of DUT-RGBD dataset (using the proposed training-test splits of DMRA) can be downloaded from here [code: 3nme ].

Please cite the following paper if you use this repository in your reseach.

@inproceedings{fan2020bbsnet,

title={BBS-Net: RGB-D Salient Object Detection with a Bifurcated Backbone Strategy Network},

author={Fan, Deng-Ping and Zhai, Yingjie and Borji, Ali and Yang, Jufeng and Shao, Ling},

booktitle={ECCV},

year={2020}

}

- For more information about BBS-Net, please read the Manuscript (PDF) (Chinese version[code:0r4a]).

- Note that there is a wrong in the Fig.3 (c) of the ECCV version. The second and third BConv3 in the first column of the figure should be BConv5 and BConv7 respectively.

The complete RGB-D SOD benchmark can be found in this page:

http://dpfan.net/d3netbenchmark/

We implement this project based on the code of ‘Cascaded Partial Decoder for Fast and Accurate Salient Object Detection, CVPR2019’ proposed by Wu et al.