Hopfield Networks are a type of recurrent neural network (RNN) under the topic of unsupervised learning. It was first described in detail by Hopfield in his 1982 paper "Neural networks and physical systems with emergent collective computational abilities". Hopfield Networks provided us with a model on how human memory is able to store information, which served its use in both biology and computer science.

We aim uncover the mathematics behind Hopfield Networks, and present a working set of codes that can be found in this Github repository.

- numpy

- matplotlib

- PIL

- pandas (for Modified Hopfield Networks)

- tqdm (for Modified Hopfield Networks)

In this section, we will focus on the main use of Hopfield Networks — image restoration. Solving optimization problems is another major application of Hopfield network. In our case, we train the Hopfield Network by feeding it with correct images (ground truth) and attempt to recover said images from the network by using only corrupted versions of them as input.

The image used for both training and testing has to be processed for it to be a valid input of a Hopfield Network. To convert the image to state type(image) = PIL.Image.

states = np.asarray(image.convert("1")) # converting to black and white image

states = states * 2 - 1 # converting image to polar values of {-1, 1}

states = states.flatten() # converting image to a singular axisTraining of the Hopfield Network follows the Hebbian learning rule. The weights of the network

Cross product of the state and its transposed vector is used, as the weights of edges between two nodes in the Hopfield Network are symmetric, in which

Training can be done simply as such:

weights = np.outer(states, states.T)

np.fill_diagonal(weights, 0)

previous_weights += weightsnp.fill_diagonal() is a really handy and efficient tool in zeroing out the diagonal of our weight matrix instead of subtracting the identity matrix from it. From the Hebbian learning rule, we note that old weights should not be forgotten when a new set of weights is learned. As such, we add both the old and new weights together to obtain our updated weights. This method of updating weights also means that similar patterns are likely to be jumbled up when trying to retrieve them later.

There are two ways in which we can recover the states from Classical Hopfield Networks: synchronous and asynchronous.

For the synchronous update rule, it is more straightforward as all values in the state matrix are recovered at the same time. By allowing

Codewise it would look like this, where we note that the values of our states should be converted back to polar:

predicted_states = (np.matmul(weights, states) >= threshold) * 2 - 1On the other hand, the asynchronous update rule would take a longer time to converge, as it attempts to recover values in the state matrix one at a time and at random. While it is less efficient, it is often more accurate when retrieving stored information from the Hopfield Network than its synchronous counterpart. Using the above formula from the synchronous update rule, our code can be translated to be as such:

predicted_states = states.copy()

for _ in range(number_of_iterations):

index = np.random.randint(0, len(weights))

predicted_states[index] = (np.matmul(weights[index], predicted_states) >= threshold[index]) * 2 - 1This section can be found in the Classical Hopfield Network.ipynb file.

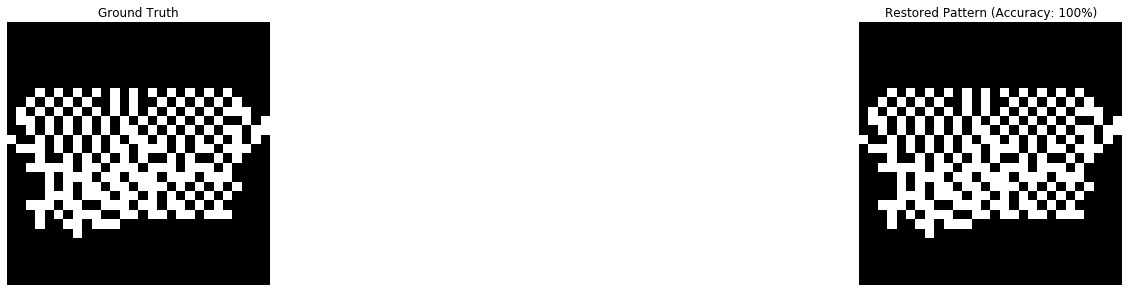

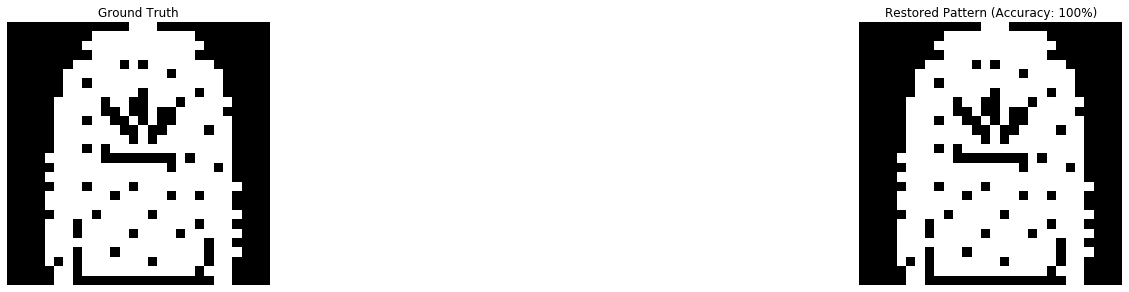

To give an example, we use the 5 images below from the MNIST fashion dataset to train our Hopfield Network.

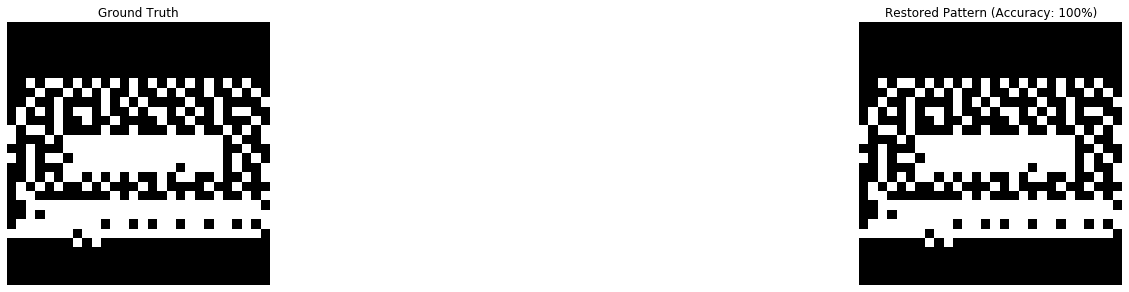

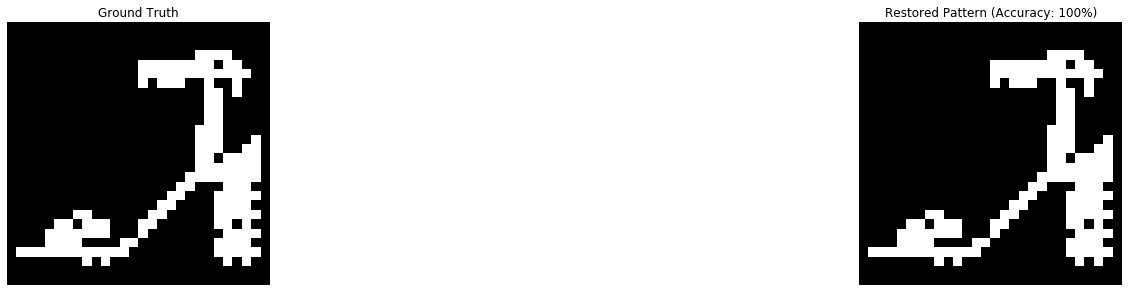

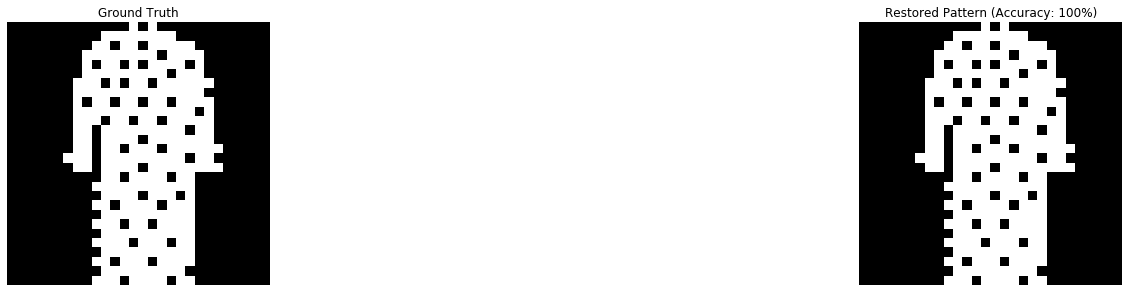

We then attempt to restore all images by retrieving it from the Hopfield Network, using the uncorrupted original images as input. In this particular set of data, we managed to restore all of the images with 100% accuracy using the synchronous update rule, which meant that the network was still able to distinguish between the 5 images.

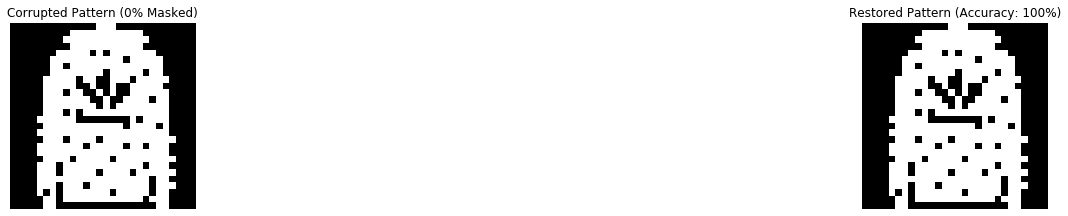

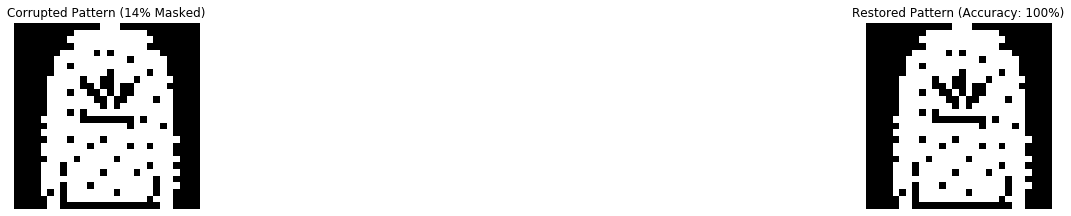

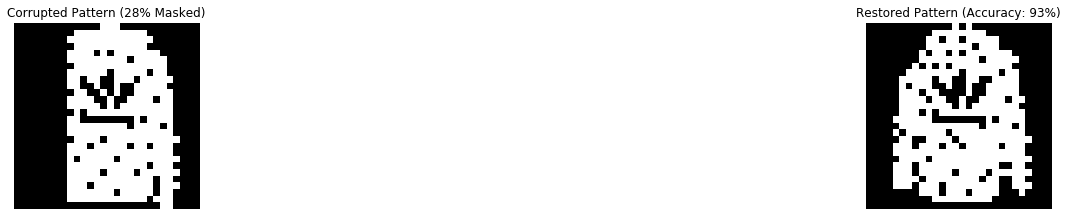

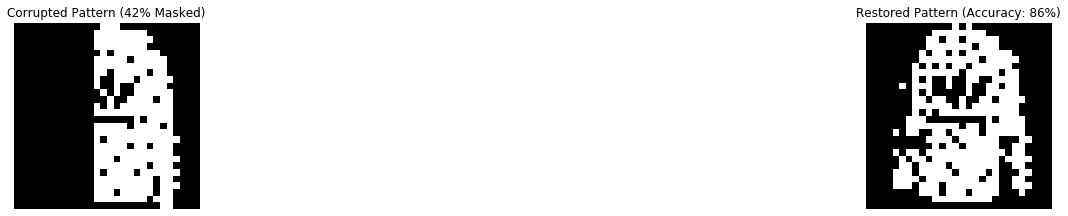

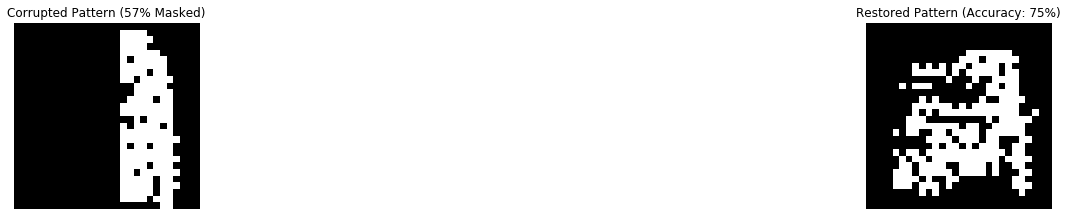

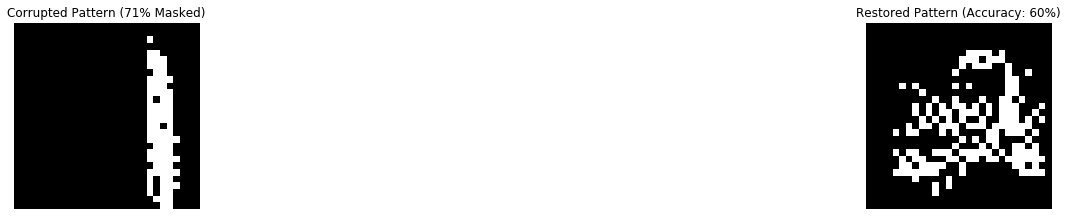

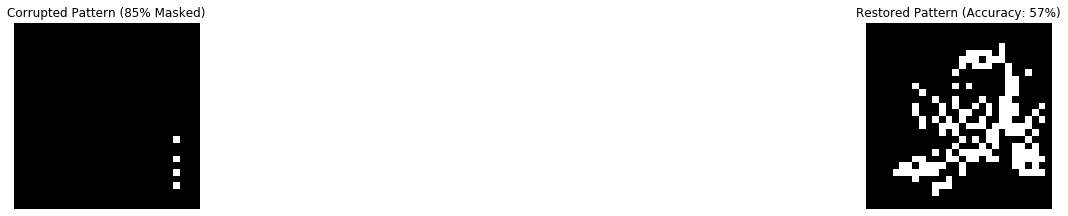

However, what happens if we corrupt the images by masking them? As seen below, the accuracy of the restored image gets worse as we corrupt it further.

Imperfect retrieval of images could be due to nearing or exceeding storage capacity. To ensure that retrieval of patterns is free of patterns, number of images trained by the Hopfield Network should not be above the storage capacity of:

On the other hand, for the retrieval of patterns with a small percentage of errors, the storage capacity can be said to be:

If the number of patterns stored is a lot lower than the storage capacity, then the error does not lie with the lack of storage capacity. Instead, it is highly likely caused by images in the training dataset being strongly correlated to each other. In this regard, Modern Hopfield Networks are better.