This is a PyTorch implementation of our paper.

@inproceedings{wang2023mosaic,

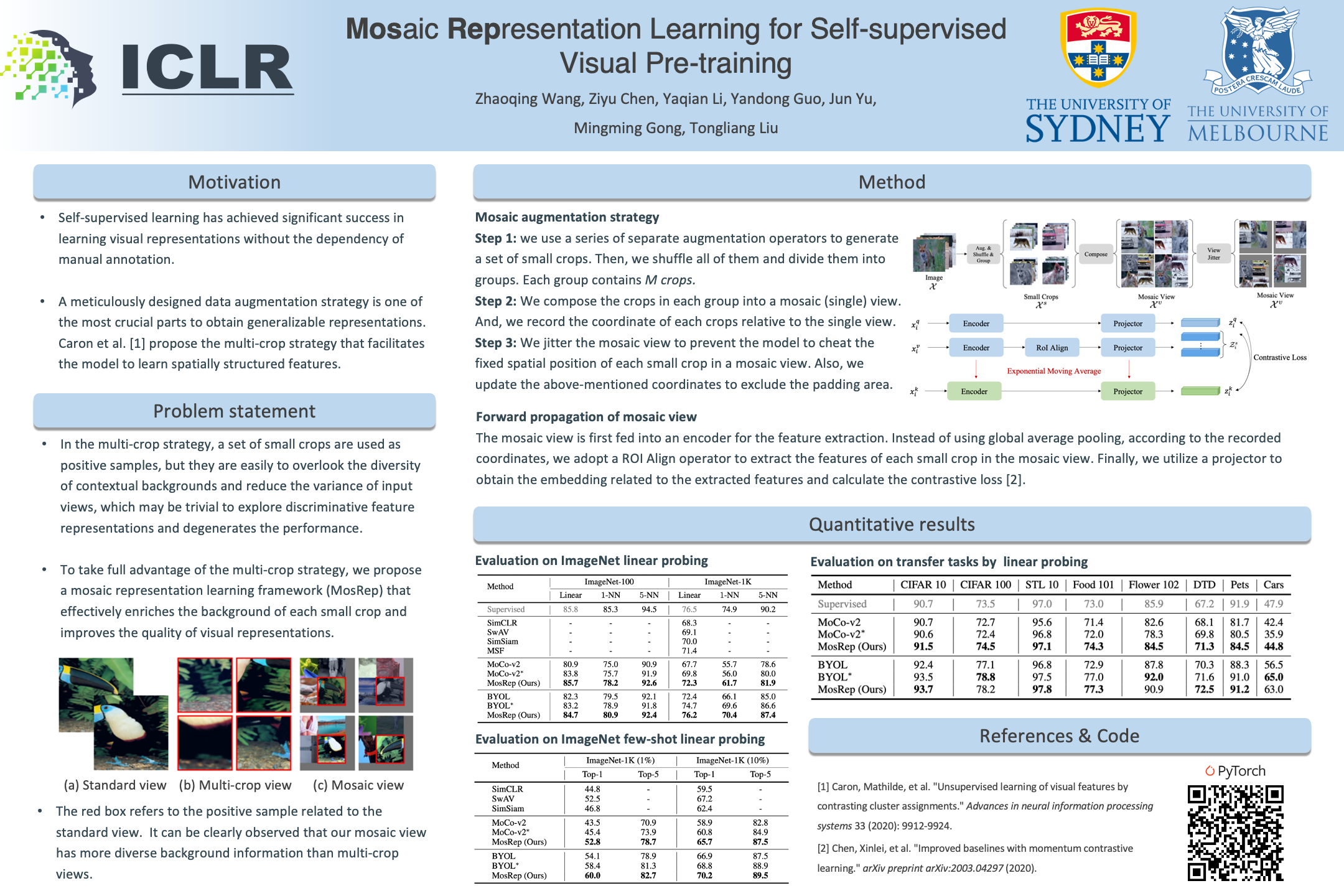

title={Mosaic Representation Learning for Self-supervised Visual Pre-training},

author={Wang, Zhaoqing and Chen, Ziyu and Li, Yaqian and Guo, Yandong and Yu, Jun and Gong, Mingming and Liu, Tongliang},

booktitle={The Eleventh International Conference on Learning Representations},

year={2023}

}

- Python >= 3.7.12

- PyTorch >= 1.10.2

- torchvision >= 0.11.3

Install PyTorch and ImageNet dataset following the official PyTorch ImageNet training code.

For other dependencies, please run:

pip install -r requirements.txt

We use webdataset to speed up our training process. To convert the ImageNet dataset into webdataset format, please run:

python imagenet2wds.py -i $IMAGENET_DIR -o $OUTPUT_FOLDER

This implementation only supports multi-gpu, DistributedDataParallel training, which is faster and simpler; single-gpu or DataParallel training is not supported.

To do unsupervised pre-training of a ResNet-50 model on ImageNet in an 8-gpu machine, please run:

# MoCo-v2

bash pretrain_moco.sh

# MosRep

bash pretrain_mosrep.sh

With a pre-trained model, to train a supervised linear classifier on frozen features/weights in an 8-gpu machine, please run:

# MoCo-v2

bash linear_moco.sh

# MosRep

bash linear_mosrep.sh

Our pre-trained ResNet-50 models can be downloaded as following:

| Method | Input view | Epochs | Linear Probing Top-1 acc. | Model |

|---|---|---|---|---|

| MoCo v2 | 2x224 | 200 | 67.7 | download |

| MoCo v2 | 2x224 + 4x112 | 200 | 69.8 | download |

| MosRep | 2x224 + 4x112 | 200 | 72.3 | download |

This project is under the MIT license. See the LICENSE file for more details.