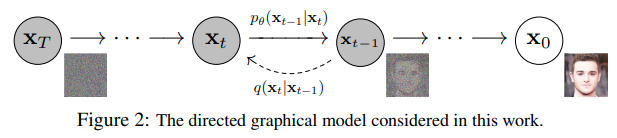

Implementation of Denoising Diffusion Probabilistic Models in PyTorch. It is a new approach to generative modeling that may have the potential to rival GANs. It uses denoising score matching to estimate the gradient of the data distribution, followed by Langevin sampling to sample from the true distribution.

This implementation was transcribed from the official Tensorflow version here

Youtube AI Educators - Yannic Kilcher | AI Coffeebreak with Letitia | Outlier

Annotated code by Research Scientists / Engineers from 🤗 Huggingface

Update: Turns out none of the technicalities really matters at all | "Cold Diffusion" paper

$ pip install denoising_diffusion_pytorchimport torch

from denoising_diffusion_pytorch import Unet, GaussianDiffusion

model = Unet(

dim = 64,

dim_mults = (1, 2, 4, 8)

)

diffusion = GaussianDiffusion(

model,

image_size = 128,

timesteps = 1000, # number of steps

loss_type = 'l1' # L1 or L2

)

training_images = torch.randn(8, 3, 128, 128) # images are normalized from 0 to 1

loss = diffusion(training_images)

loss.backward()

# after a lot of training

sampled_images = diffusion.sample(batch_size = 4)

sampled_images.shape # (4, 3, 128, 128)Or, if you simply want to pass in a folder name and the desired image dimensions, you can use the Trainer class to easily train a model.

from denoising_diffusion_pytorch import Unet, GaussianDiffusion

from denoising_diffusion_pytorch.utils import Trainer

model = Unet(

dim=64,

dim_mults=(1, 2, 4, 8)

).cuda()

diffusion = GaussianDiffusion(

model,

image_size=128,

timesteps=1000, # number of steps

sampling_timesteps=250,

# number of sampling timesteps (using ddim for faster inference [see citation for ddim paper])

loss_type='l1' # L1 or L2

).cuda()

trainer = Trainer(

diffusion,

'path/to/your/images',

train_batch_size=32,

train_lr=8e-5,

train_num_steps=700000, # total training steps

gradient_accumulate_every=2, # gradient accumulation steps

ema_decay=0.995, # exponential moving average decay

amp=True # turn on mixed precision

)

trainer.train()Samples and model checkpoints will be logged to ./results periodically

The Trainer class is now equipped with 🤗 Accelerator. You can easily do multi-gpu training in two steps using their accelerate CLI

At the project root directory, where the training script is, run

$ accelerate configThen, in the same directory

$ accelerate launch train.py@inproceedings{NEURIPS2020_4c5bcfec,

author = {Ho, Jonathan and Jain, Ajay and Abbeel, Pieter},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M.F. Balcan and H. Lin},

pages = {6840--6851},

publisher = {Curran Associates, Inc.},

title = {Denoising Diffusion Probabilistic Models},

url = {https://proceedings.neurips.cc/paper/2020/file/4c5bcfec8584af0d967f1ab10179ca4b-Paper.pdf},

volume = {33},

year = {2020}

}@InProceedings{pmlr-v139-nichol21a,

title = {Improved Denoising Diffusion Probabilistic Models},

author = {Nichol, Alexander Quinn and Dhariwal, Prafulla},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {8162--8171},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/nichol21a/nichol21a.pdf},

url = {https://proceedings.mlr.press/v139/nichol21a.html},

}@inproceedings{kingma2021on,

title = {On Density Estimation with Diffusion Models},

author = {Diederik P Kingma and Tim Salimans and Ben Poole and Jonathan Ho},

booktitle = {Advances in Neural Information Processing Systems},

editor = {A. Beygelzimer and Y. Dauphin and P. Liang and J. Wortman Vaughan},

year = {2021},

url = {https://openreview.net/forum?id=2LdBqxc1Yv}

}@article{Choi2022PerceptionPT,

title = {Perception Prioritized Training of Diffusion Models},

author = {Jooyoung Choi and Jungbeom Lee and Chaehun Shin and Sungwon Kim and Hyunwoo J. Kim and Sung-Hoon Yoon},

journal = {ArXiv},

year = {2022},

volume = {abs/2204.00227}

}@article{Karras2022ElucidatingTD,

title = {Elucidating the Design Space of Diffusion-Based Generative Models},

author = {Tero Karras and Miika Aittala and Timo Aila and Samuli Laine},

journal = {ArXiv},

year = {2022},

volume = {abs/2206.00364}

}@article{Song2021DenoisingDI,

title = {Denoising Diffusion Implicit Models},

author = {Jiaming Song and Chenlin Meng and Stefano Ermon},

journal = {ArXiv},

year = {2021},

volume = {abs/2010.02502}

}@misc{chen2022analog,

title = {Analog Bits: Generating Discrete Data using Diffusion Models with Self-Conditioning},

author = {Ting Chen and Ruixiang Zhang and Geoffrey Hinton},

year = {2022},

eprint = {2208.04202},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}@article{Qiao2019WeightS,

title = {Weight Standardization},

author = {Siyuan Qiao and Huiyu Wang and Chenxi Liu and Wei Shen and Alan Loddon Yuille},

journal = {ArXiv},

year = {2019},

volume = {abs/1903.10520}

}