Artificial Inteligence and Machine Learning have already stirred up excitement in the human society. AI is growing up fast and will reach human levels no sooner. The key to artificial intelligence has always been representation. Every major player is working on this technology of artificial intelligence, as of now it's begin...... But the day is no far off when artificial intelligence will become a necessity and dependency for every human being.

If you are also fascinated by the buzz of ML/AI in the fourth industrial revolutioan then congragulation this site will surely help you!

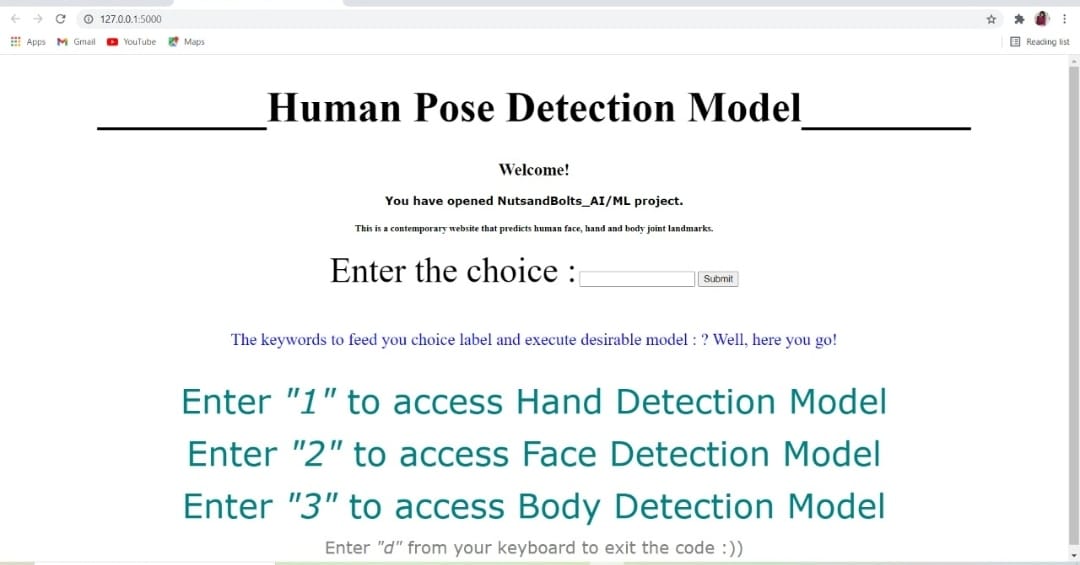

NutsandBolts_AI/ML is a deep learning based artificial neural network project that has been designed to successfully detect and trace human body joint landmarks with an accuracy of 0.5. This project is developed using Pycharm IDE. The model is further generated as a rest app using flask. The complete code is written in python and HTML using the in-built modules namely: Opencv-python and Mediapipe.

To understand the working of a deep learning model analogous to biological nervous system.

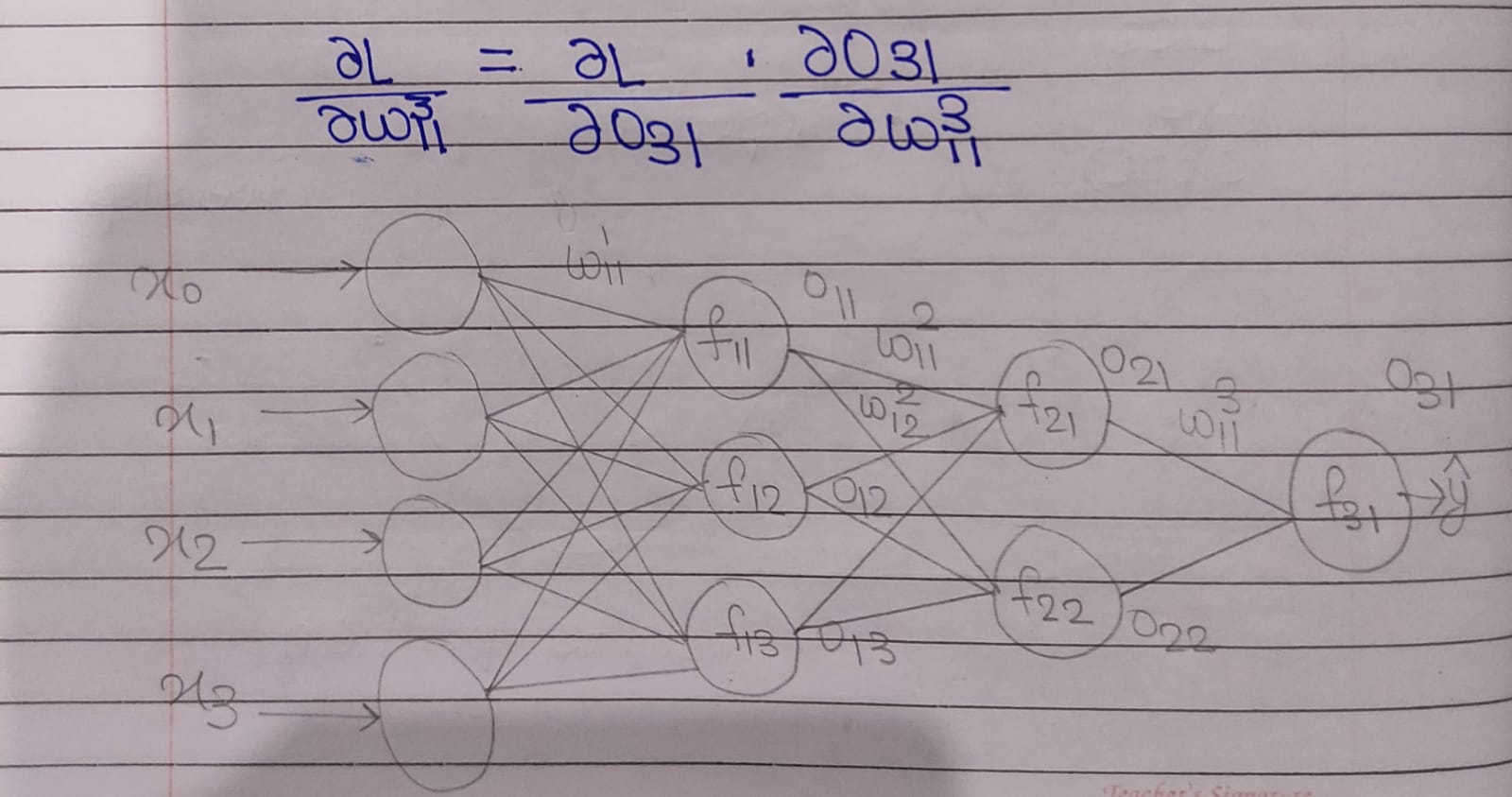

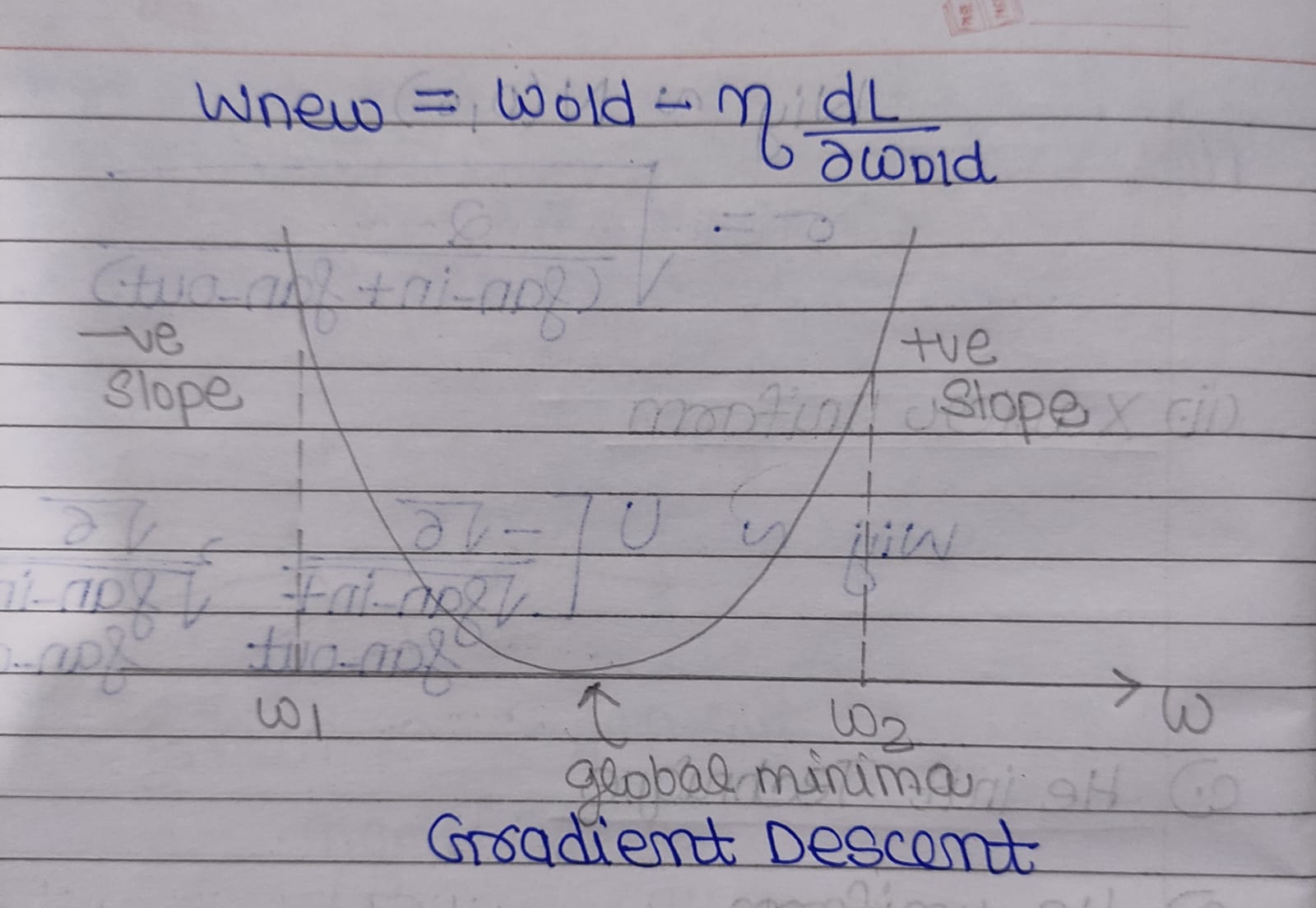

ARTIFICIAL_NEURAL_NETWORKS: Artificail neural networks(ANN) are called heart of the deep learning algorithms. The ANNs are a junction of several node layers. The first layer is called Input layer and the final layer is called the output layer while remaining all layers are called as hidden layers. The hidden layers determine the depth of neural network. Each hidden layer is dependent on the previous hidden layer through a parameter called weight. We use several techniques to generate these weights for each layer and they are calculated depending on the number of inputs to the respective hidden layer. Each value feed to a node is multiplied with respective weights and summationed. The resultant quantity is manipulated by activation functions to standardize the result in a given range. The various optimizer functions are relu, leaky relu, adam, adagrad and sigmoid. The value generated by the output layer is called "y hat" and is used to calculate loss. This complete execution is called forward propogation.

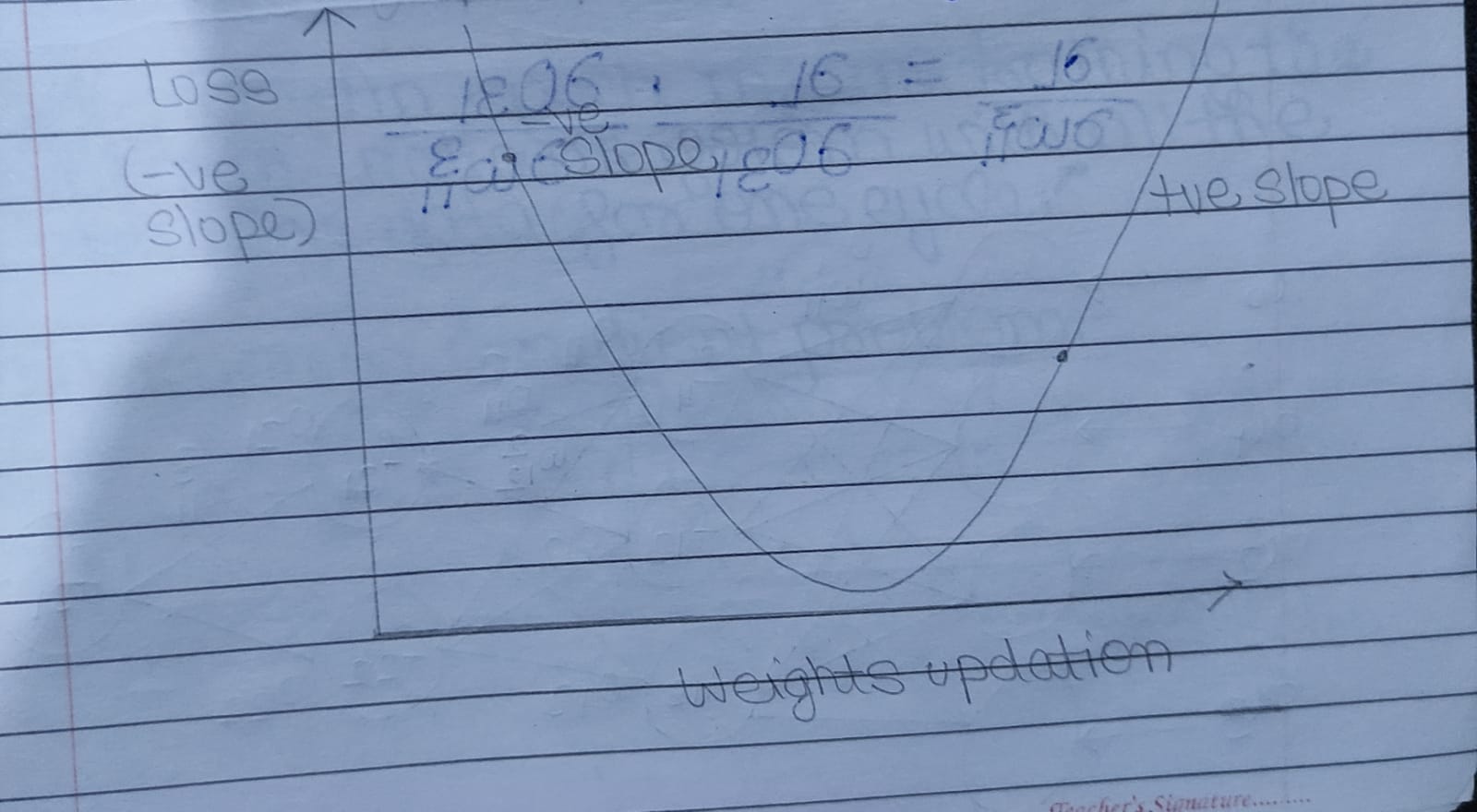

Once the loss is generated we use optimizer functions to adjust weights to reduce the output of loss function. This process of adjusting weights to match the magnitude of y hat and predicted y is called backward propogation. A complete execution of forward and backwrd propogation is called an epoch. The network run several epoch cycles to find best possible values for weights.

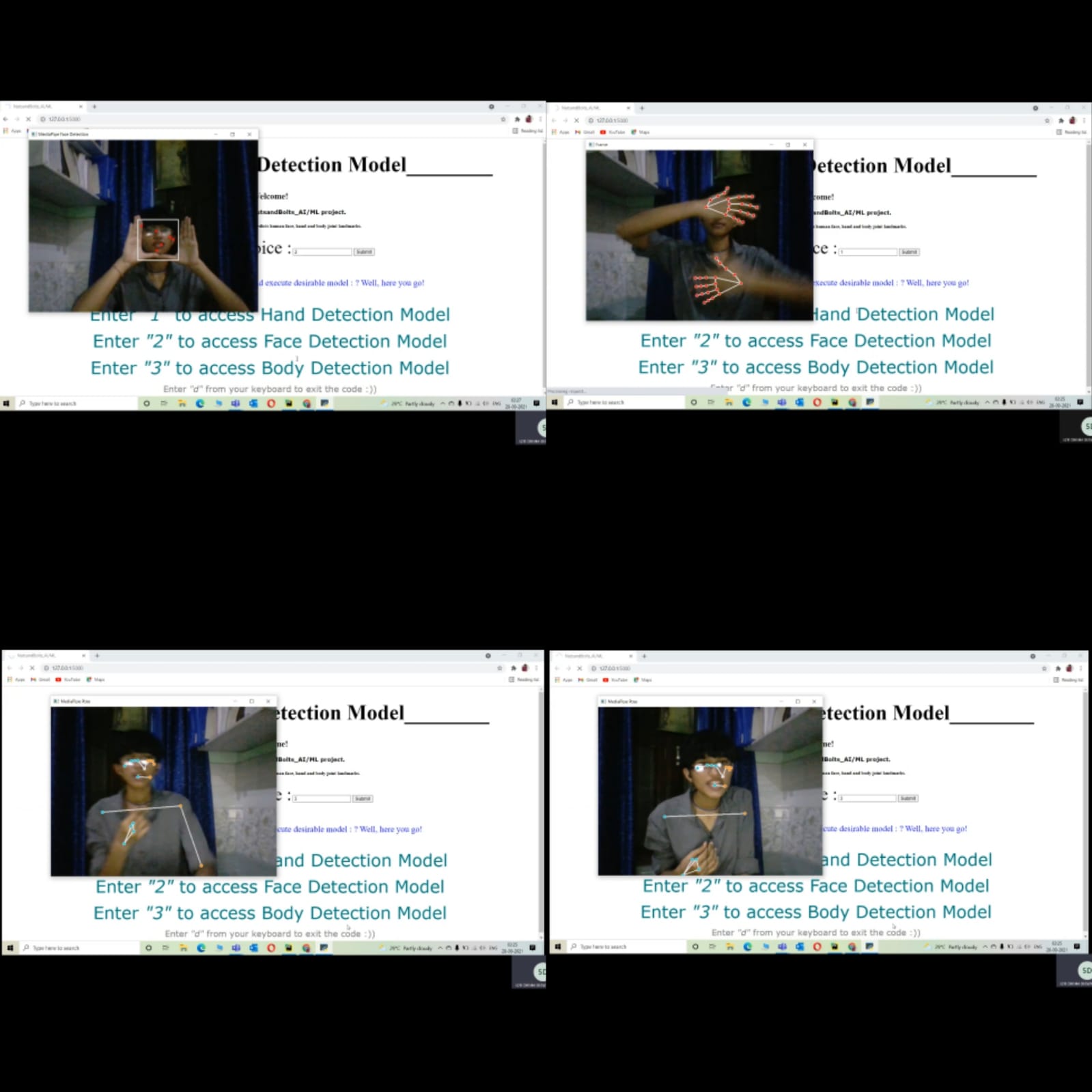

Python in-build modules mediapipe and open-cv are used to write the code for the following AI and neural network based model. Mediapipe is a frmawork for building multimodal cross platforms through applied ML pipelines. Mediapipe solutions are readily available trained ANNs that can be deployed and executed through few lines of code. Opencv is a platform that provides a real-time computer vision and supports model execution for ML and AI based projects.

The model is a menu driven function that takes user input to detect specified landmarks. The input feed is accepted from a localhost website and saved in the form of a python dictionary in the webpage pass.html. There are only three valid user inputs to run the model else the model returns error! A user can enter "1" for Hand prediction. By default the model can predict two hands at a time. To predict face box and landmarks user is supposed to enter "2" and press submit label. Similary, "3" will execute pose predictions. The project takes live input through webcam created using opencv and generates live output. To escape or terminate the code user is expected to pass "d" key from the keyboard and will be directed to "/results" with a successful execution response.

We hope this project was helpful in developing better understanding on analogy among biological neural network and artificial neural networks.

Q1.) What is Deep Learning?

Deep learning is a specialised field of machine learning that relies on training of deep ANN usin a large data set such as images or texts. ANN are the information processing models inspired by the human brain. Artificial neural networks or simulated neural networks are the heart of the deep learning algorithms. These are the structures used in the solving problems in image processing, speech recognition and natural learning processing. ANN works by mathematically mimicking the human brain and connecting multiple artificial neurons in multi-layered fashion. The more hidden layers added to the network, the deeper the network gets.

Q2.) What differentiates DL from ML?

ML Process- a.) Selecting the model to train b.) Manually performing feature extraction DL Process- a.) Select the architecture of the network b.) Features are automatically extracted by feeding in the trainging data aloong with the target class.

Q3.) How neural networks works?

Each layer of neural networks is assigned with weights and the input of next consecutive layer is directly dependent upon the weights provided.

y= ΣWiXi

Hidden layers provides an active function Z that transforms the value in the required scale.

Q4.) What are the activation functions?

Activation functions decides whether a neuron should be activated or not by calculating weighted sum and further adding bias with it. The purpose oof the activation function is to introduce non-linearity into the output of the neuron.

Q4.) What is a bias? A bias vector is an additional set of weights in a neural network that requires no input, and thus it corresponds to the output of an artificial neural network when it has zero input.

Q5.) What is forward propogation? It is a way to move from the input layer to the output layer in the neural network.

Q5.) What is backward propogation? The process of moving right to left that is from the output layer to the input layer in the neural network is called backwasrd propogation.

Q6.) What is an epoch? An epoch means training the neural with all the training data for one cycle.

#Types of Activation Functions 1.) Sigmoid Functions

The sigmoid function transforms a given magnitude in range 0 to 1 and it is used in logistic regression to predict.

Advantages of the sigmoid functions: a.) Data normalized between 0 to 1. b.) Prevents jump in output values. c.) Solves exponential functions faster.

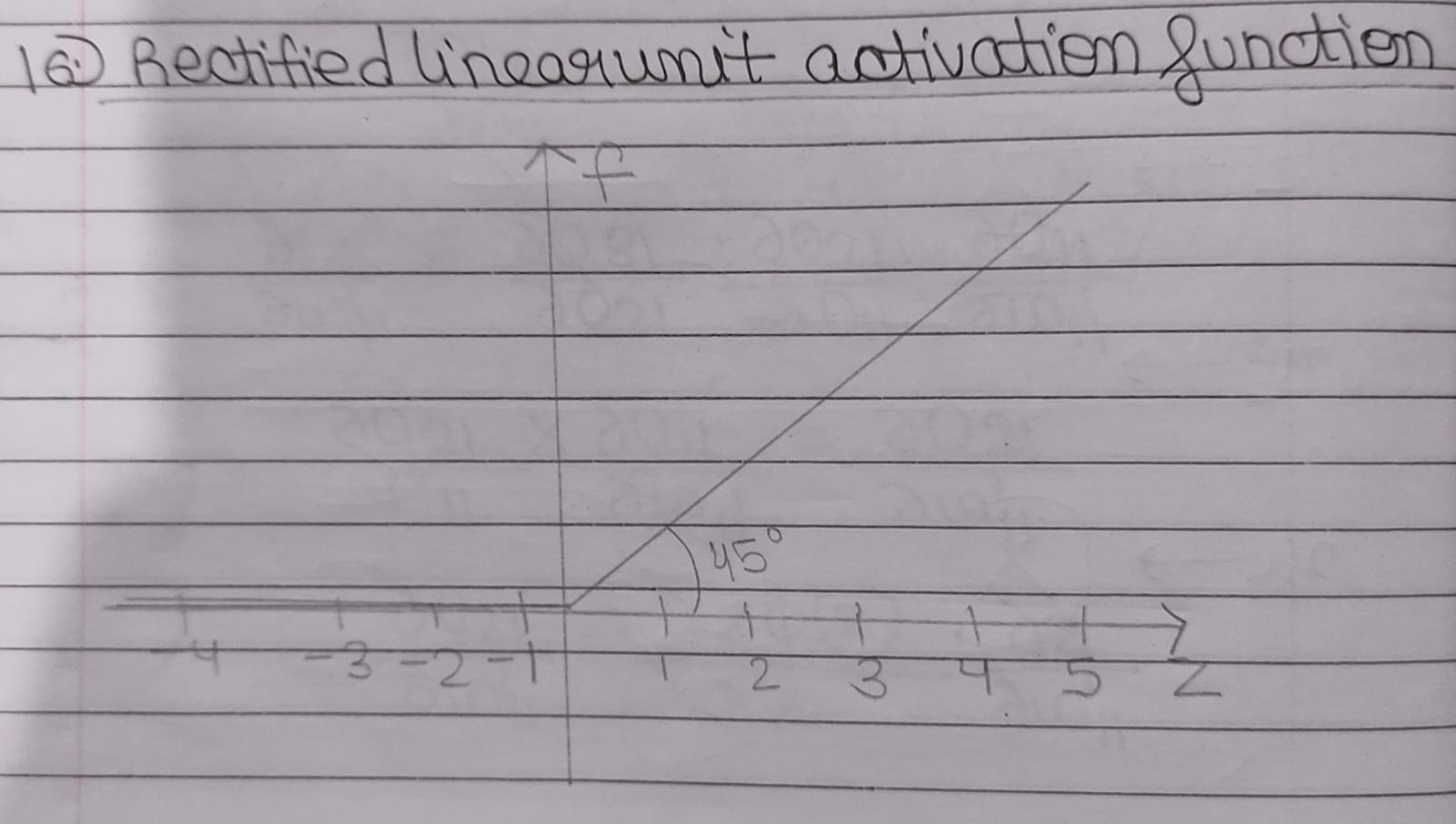

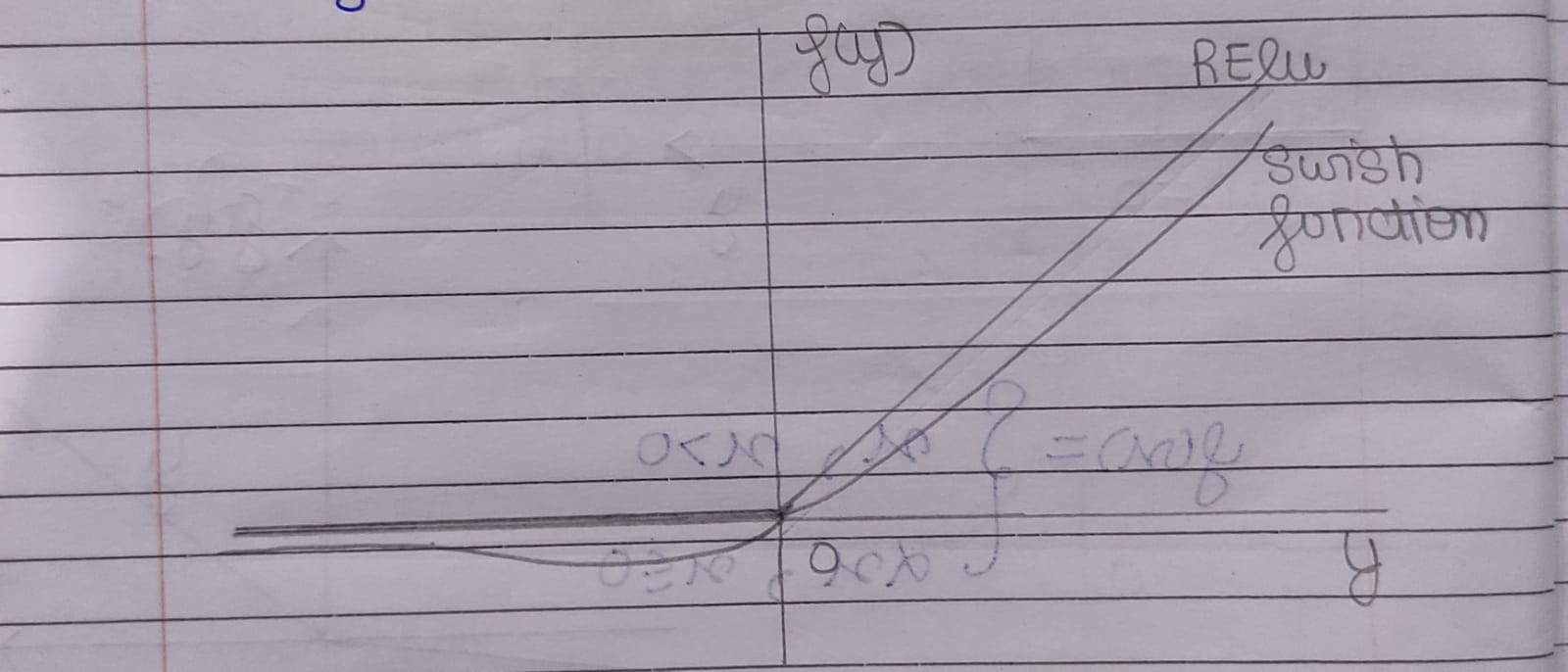

2.) Rel Activation Function

This function classes the values in positive first quadrant.

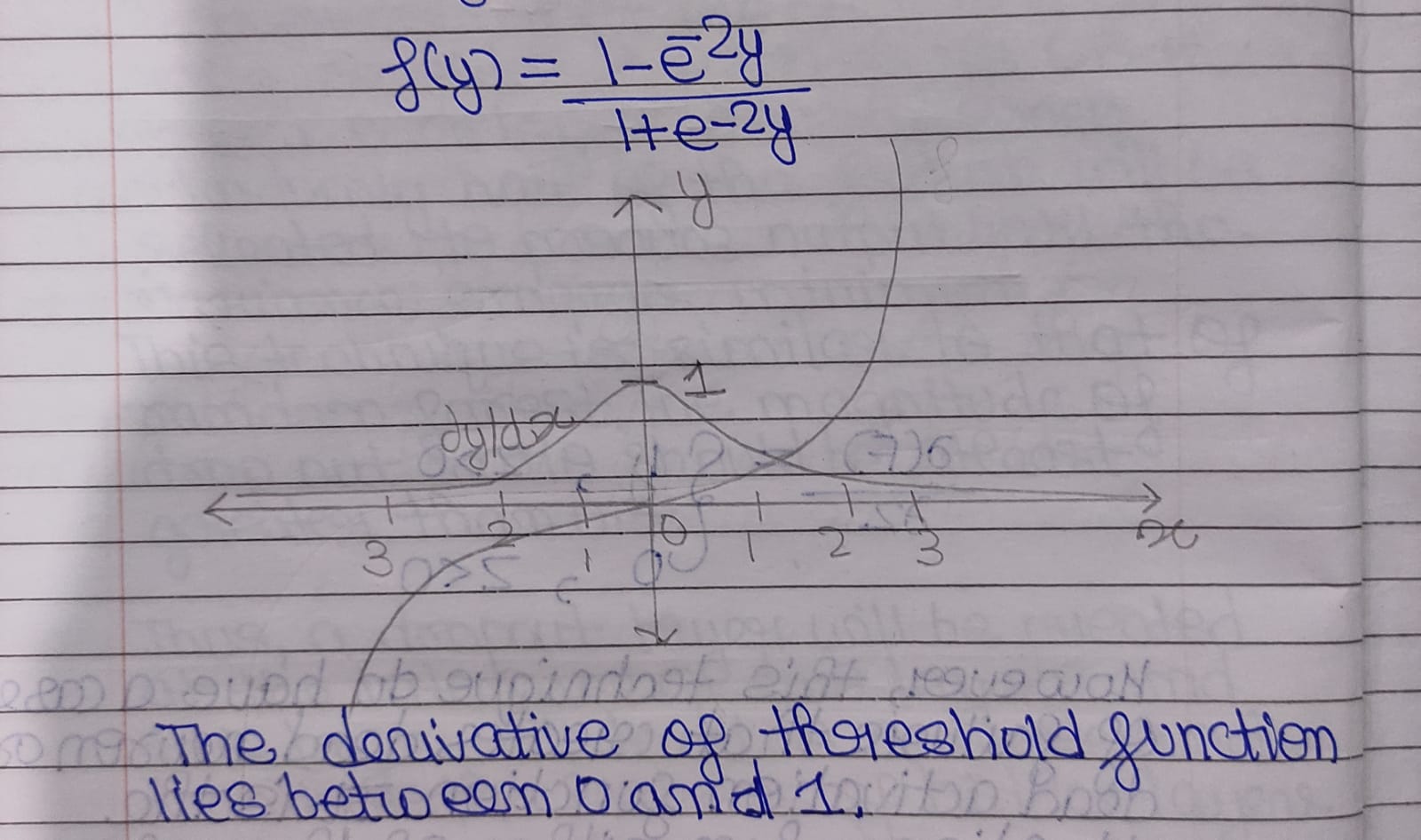

3.) Threshold Functions

The threshold function is also one of the activation function. The derivative of threshold function lies between 0 and 1.

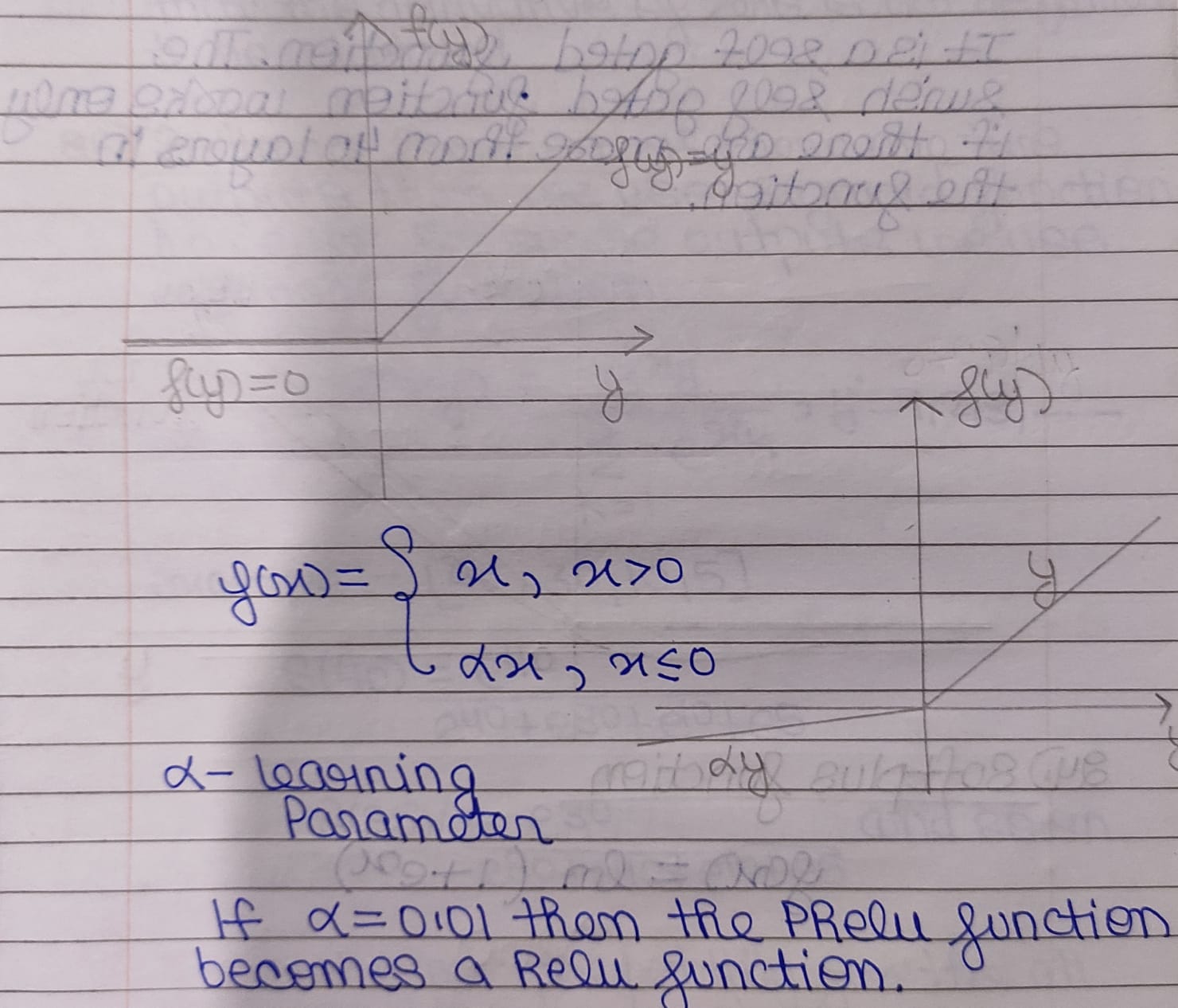

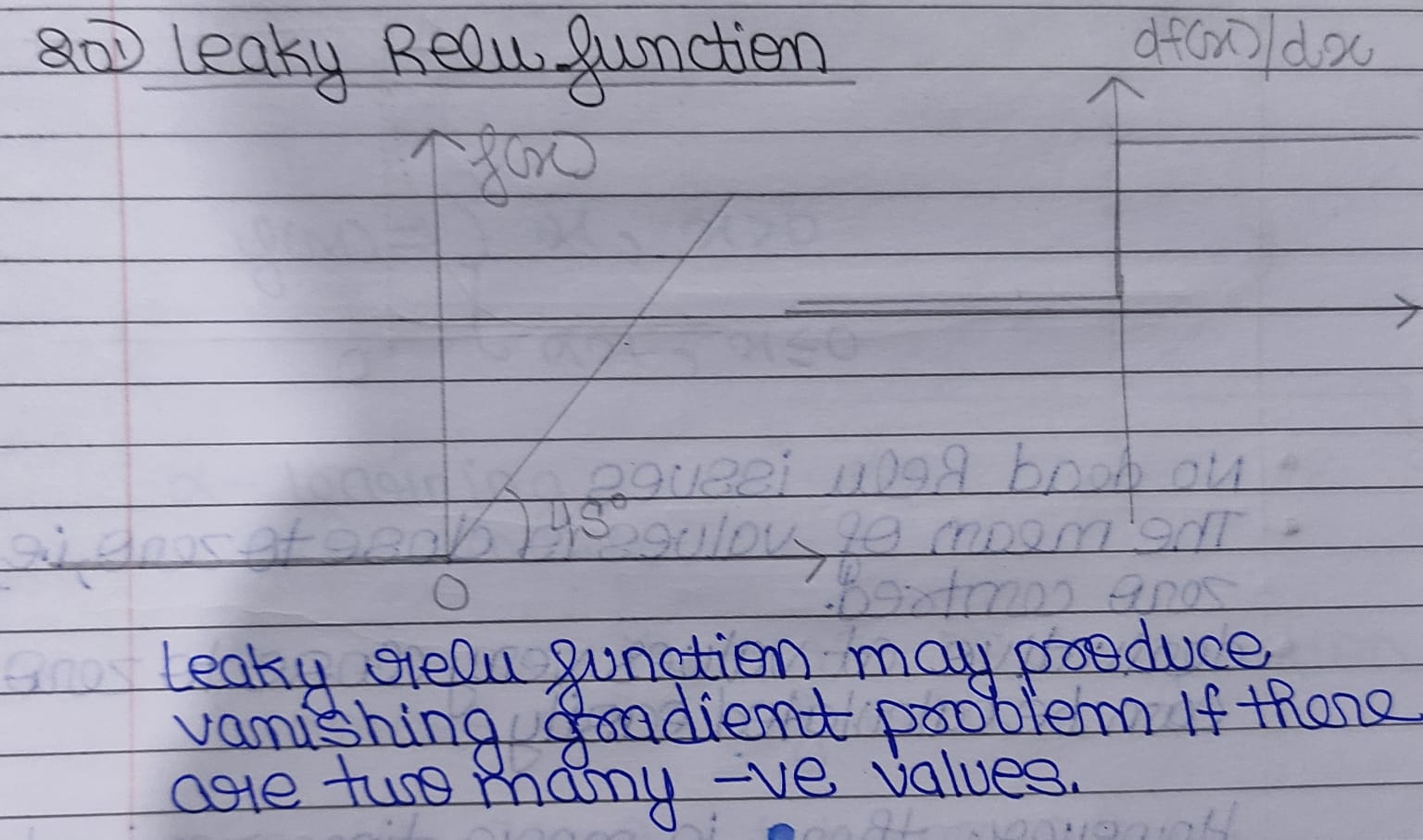

4.) Leaky Relu Function

Leaky Relu Function may produce vanishing gradient problems if there are two many -ve values.

5.) Exponential Linear Unit(ELU) Functions

7.) Swish's Function

It is a self gated function.

Here is the front end of our restapp!

Some useful visual results for readers to the relate the output of model!

Output of website once the code execution is stopped!

Thank you very much! :)

Team: NUTSandBOLTS MEMBERS: Diksha Deswal, Prabhash Kumar and Himanshu Singh

X____________________________________X________________________________________X