This is the official repo of our work "Pensieve", a training free method to mitigate visual hallucination in Multi-modal LLMs.

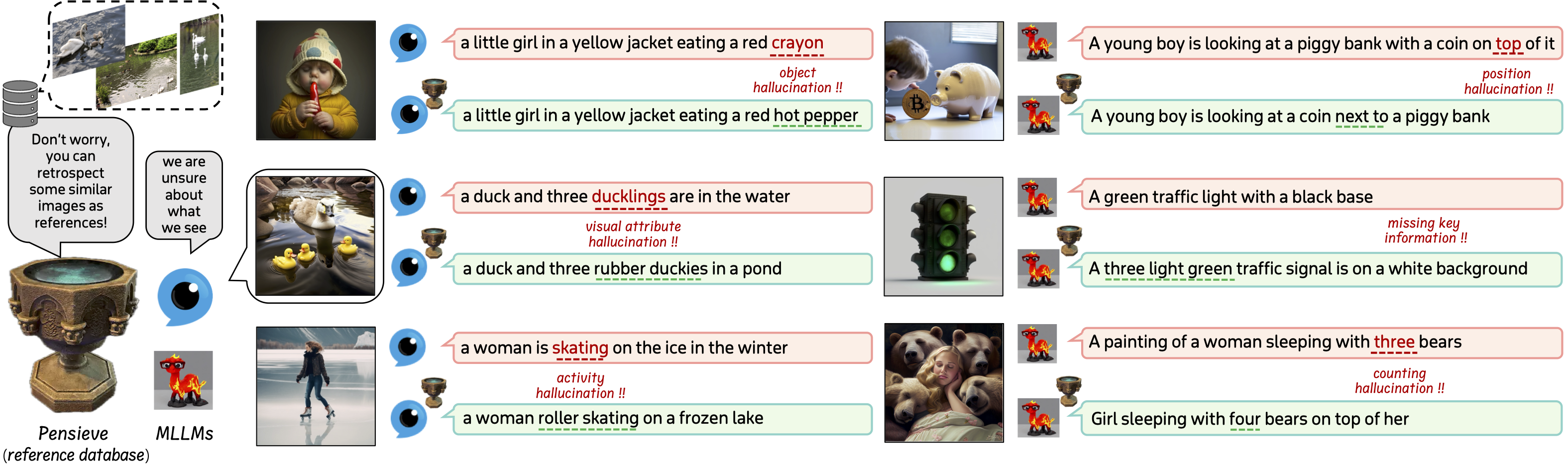

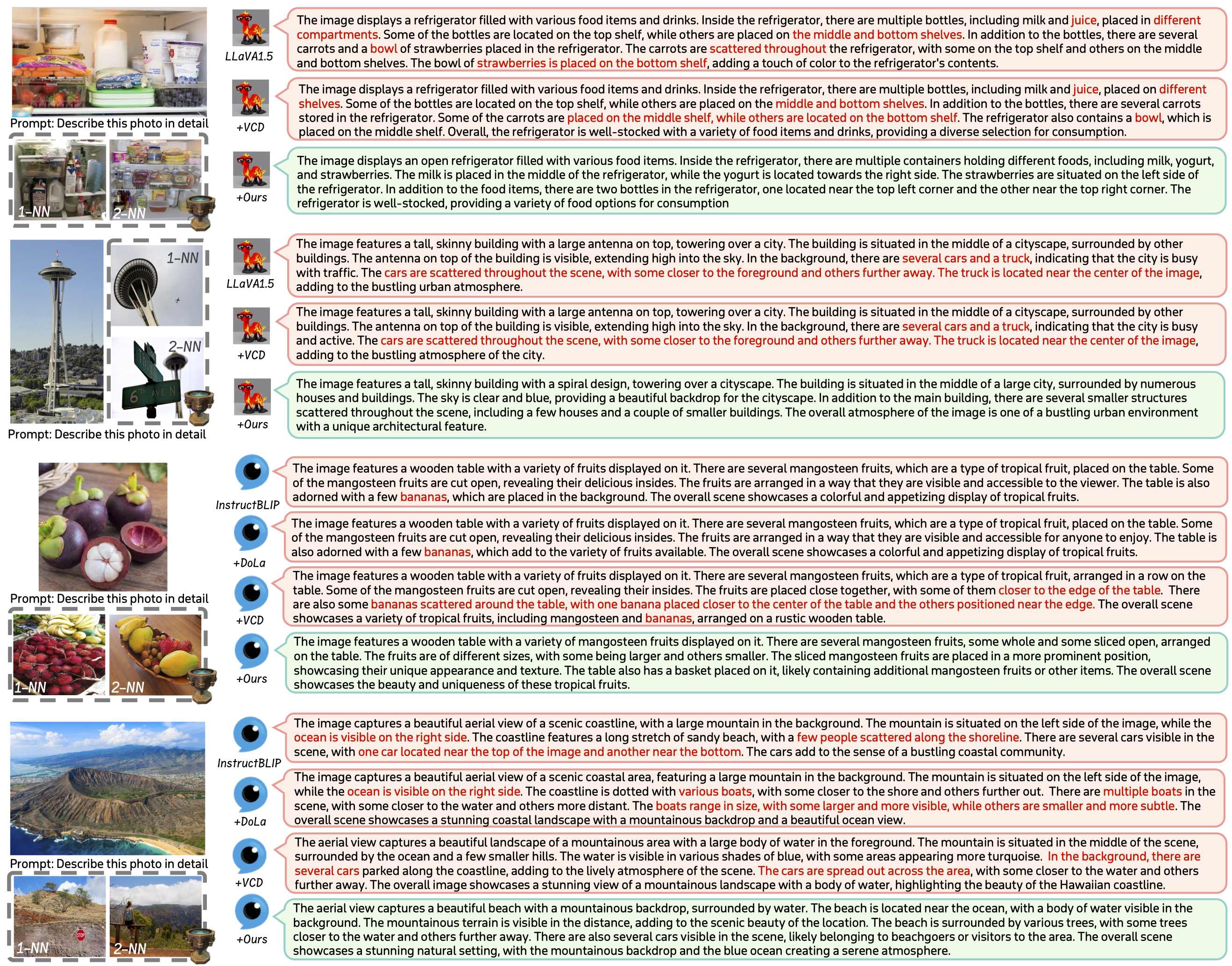

Visual Hallucination refers to the issue that MLLMs inaccurately describe the visual inputs, generating conflicting or fabricated content that diverges from the provided image, as well as neglecting crucial visual details (see the above examples in the image). This issue hinders MLLMs' deployment in safety-critical applications (such as autonomous driving, clinic scenario, etc.).

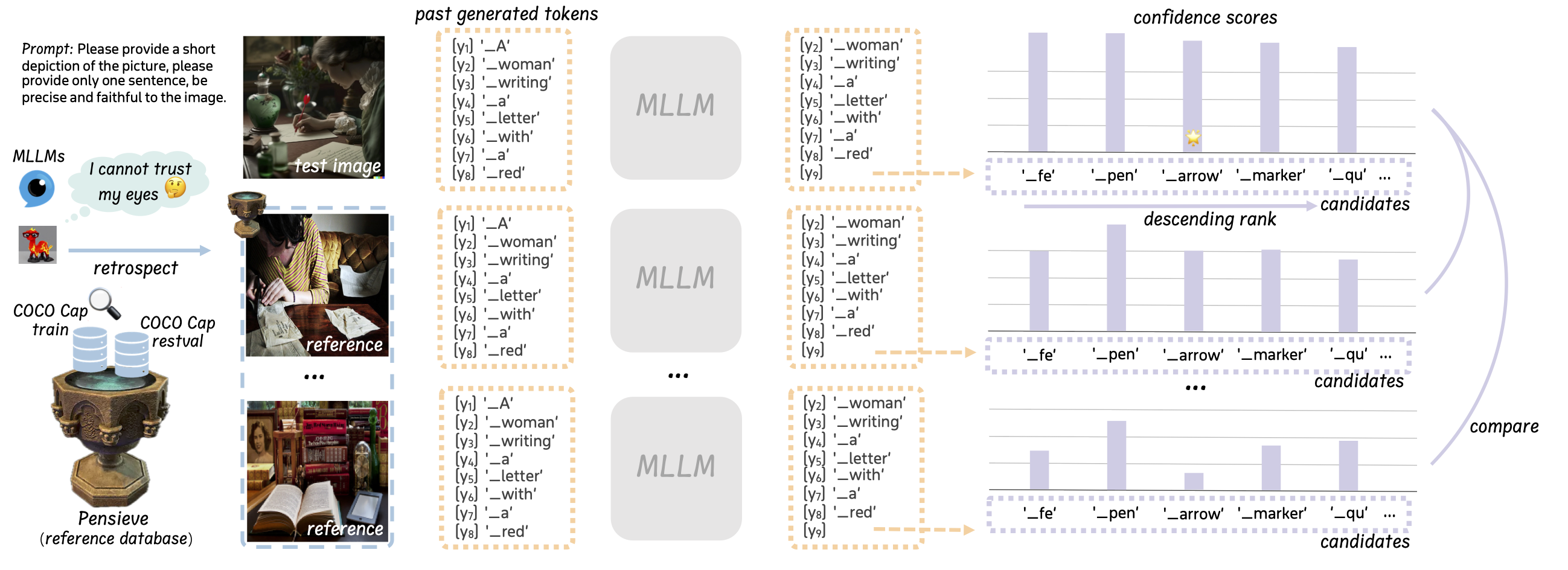

Our investigation on visual hallucination suggests a different perspective: the MLLMs might not be entirely oblivious to accurate visual cues when they hallucinate. Inspired by this, we try to elicit accurate image descriptions from a non-blind MLLM, which is a crucial initial step in mitigating visual hallucination. We make our source code for visual hallucination analysis, reference database construction, and our proposed decoding strategy publicly available in this repo.

- [2024-05-04]: 📝📝 We release the retrieved image filenames corresponding to each test sample in our experiments.

- [2024-05-04]: 🌈🌈 We release code for integrating Pensieve with beam search.

- [2024-04-27]: 🧑🏻💻👩🏼💻 Our code is released.

- [2024-03-21]: 🎉🎉 Our Paper is available on Arxiv.

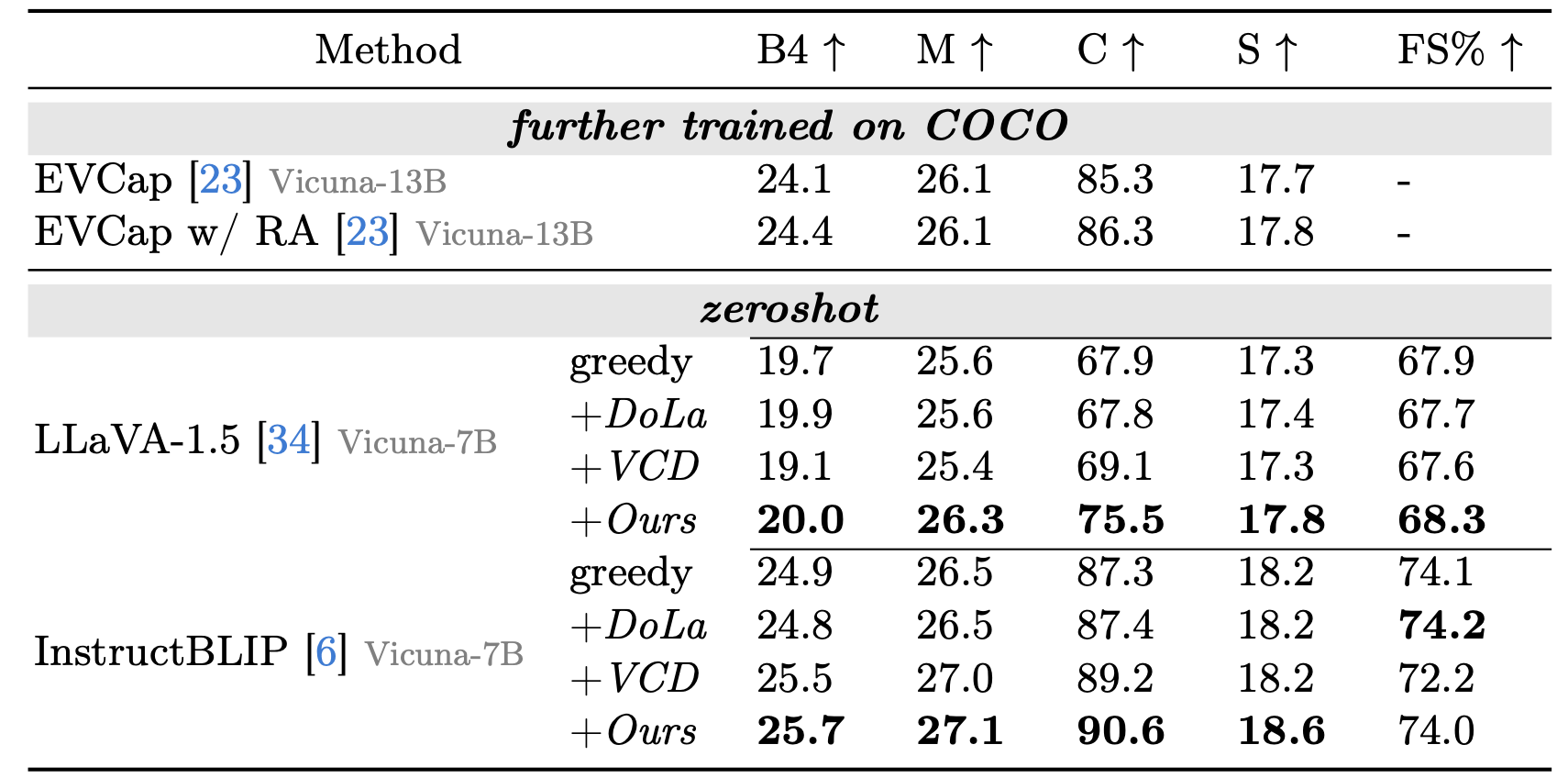

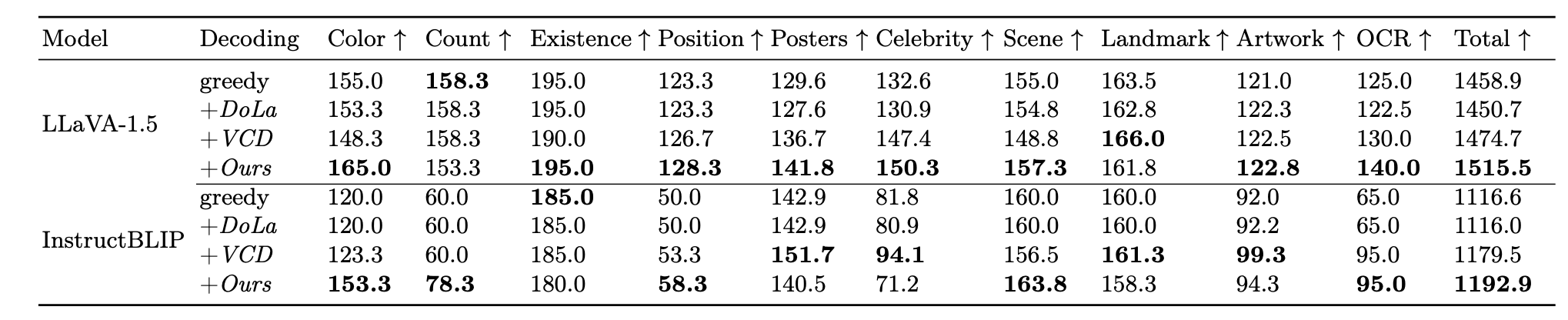

- We introduce Pensieve, a plug-and-play and training-free method to mitigate visual hallucination and enhance the specificity of image descriptions.

- During inference, MLLMs are enabled to retrospect relevant images as references from a pre-built non-parametric vector database.

- Contrasting with the visual references (resembling the "spot-the-difference" game) can help MLLMs distinguish accurate visual cues.

We use PyTorch and the Huggingface 🤗 Transformers Library. We acknowledge their great contributions!

conda create -yn pensieve python=3.9

conda activate pensieve

git clone https://github.com/DingchenYang99/Pensieve.git

cd Pensieve

pip install -r requirements.txtWe use the COCO Caption dataset to build our reference dataset. You may DIY your own reference dataset with your in-door data as well.

- Download the Karpathy splits caption annotation

dataset_coco.jsonfrom here, and assign/path/to/your/dataset_coco.jsonin/Pensieve/source/rag/build_index.py. - Download COCO images (train, val and test, 2017) from here. Unzip the images and assign

/path/to/your/coco/images/in inference scripts.

- Download the Whoops dataset from here, and assign

/path/to/your/whoops/in inference scripts.

- Download the MME dataset from here, and assign

/path/to/your/mme/in inference scripts.

- Question files are in

/Pensieve/source/data/POPE/

- Download the LLaVA-Bench in the Wild from here, and assign

/path/to/your/llava-bench/in inference scripts.

- Prepare LLaVA-1.5 from here, and assign

/path/to/your/llava-v1.5-7bin inference scripts. - Prepare InstructBLIP follow their instruction. We use Vicuna7b-v1.1. Assign

/path/to/your/vicuna-7b-v1.1inPensieve/source/lavis/configs/models/blip2/blip2_instruct_vicuna7b.yaml. - Prepare Bert from here and assign

/path/to/your/bert-base-uncased/in/Pensieve/source/lavis/models/blip2_models/blip2.py - OFA models will be downloaded automatically from Modelscope when running

/Pensieve/source/faithscore/calculate_whoops_faithscore.py - Prepare CLIP-ViT from here, DINOv2-ViT from here and assign

/path/to/your/clip/dir/&/path/to/your/.cache/torch/hub/facebookresearch/dinov2/in/Pensieve/source/rag/build_index.pyand/Pensieve/source/rag/retrieve_neighbours.py

We pre-retrieve visual references before MLLMs' inference stage.

- run the script

/Pensieve/source/rag/build_index.pyto build the reference database. - run the script

/Pensieve/source/rag/retrieve_neighbours.pyto retrieve references for each samples in all test datasets. - The file paths of retrieved visual references are written into a

jsonfile saved to/Pensieve/source/rag/q_nn_files/, which are loaded in before inference. We also provide our pre-retrieved results in this folder, which may be helpful for a quick start.

- Prepare the coco caption evaluation suite from here for image captioning metrics.

- Prepare the FaithScore for visual hallucination evaluation. Note: we don not use LLaVA-1.5 for evaluation as we are accessing visual hallucination for LLaVA-1.5-7B.

- Inference scripts can be found at

/Pensieve/source/evaluation. - To reproduce the results of our analysis for visual hallucination, set

save_logitstoTruein/Pensieve/source/evaluation/whoops_caption_llava_rancd.py. This will save all predicted logit distributions for each decoded token. Then, run/Pensieve/source/evaluation/eval_whoops_visual_influence.pyand/Pensieve/source/evaluation/eval_whoops_nns_influence.pyto see whether MLLMs are blind amidst visual hallucination, and analogous visual hallucinations among similar images. For results visualization, you may use/Pensieve/source/util/plot_per_token.ipynb. - You can get quantitative results on POPE with

/Pensieve/source/evaluation/calculate_pope.py, and results on MME with/Pensieve/source/evaluation/calculate_mme.py.

Our project is built upon VCD. We sincerely acknowledge the great contribution of the following works:

- VCD: Mitigating Object Hallucinations in Large Vision-Language Models through Visual Contrastive Decoding

- DOLA: Decoding by Contrasting Layers Improves Factuality in Large Language Models

- FaithScore: Evaluating Hallucinations in Large Vision-Language Models

- LLaVA-1.5: Improved Baselines with Visual Instruction Tuning

- InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning

If you find our project useful, please consider citing our paper:

@article{yang2024pensieve,

title={Pensieve: Retrospect-then-Compare Mitigates Visual Hallucination},

author={Yang, Dingchen and Cao, Bowen and Chen, Guang and Jiang, Changjun},

journal={arXiv preprint arXiv:2403.14401},

year={2024}

}