This repo contains the official implementation of our paper:

Mining Cross-Person Cues for Body-Part Interactiveness Learning in HOI Detection (ECCV 2022)

Xiaoqian Wu*, Yong-Lu Li*, Xinpeng Liu, Junyi Zhang, Yuzhe Wu, and Cewu Lu [Paper] [Supplementary Material] [arXiv] (links upcoming)

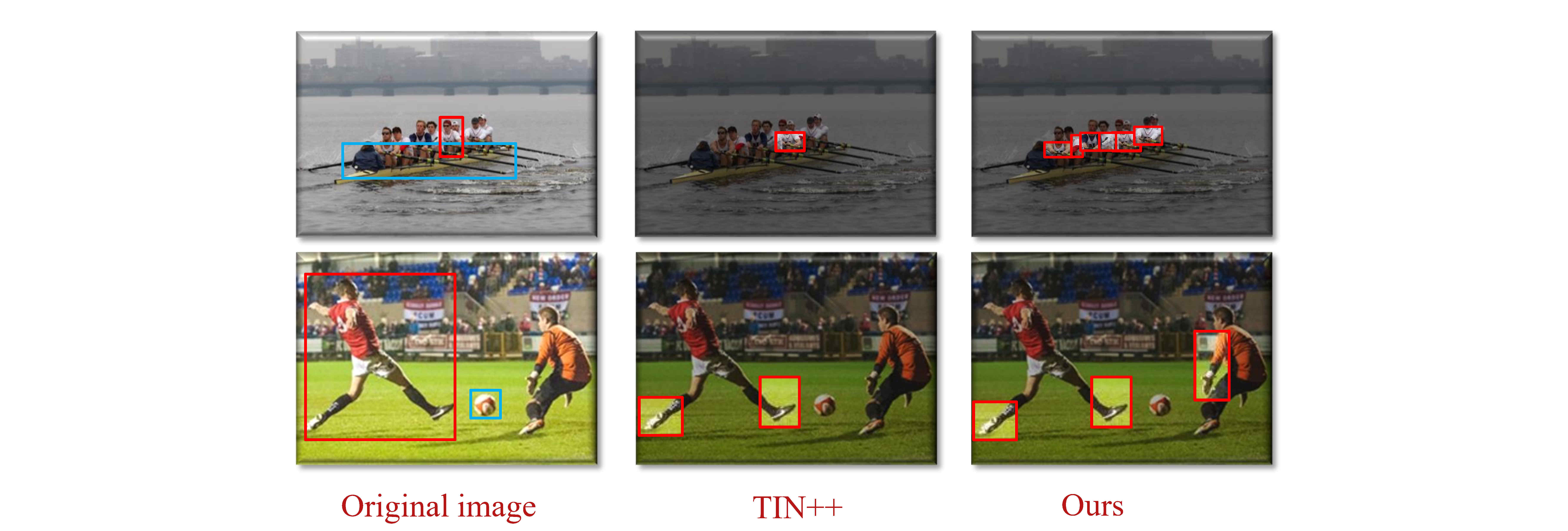

In this paper, we focus on learning human body-part interactiveness from a previously overlooked global perspective. We construct body-part saliency maps to mine informative cues from not only the targeted person, but also other persons in the image.

python==3.9

pytorch==1.9

torchvision==0.10.1

For HICO-DET, download the pre-calculated pose keypoint files here, and put them into data folder. They are used for body-part saliency map calculation.

HICO-DET dataset can be downloaded here. After finishing downloading, unpack hico_20160224_det.tar.gz into data folder. We use the annotation files provided by the PPDM authors. The annotation files can be downloaded from here.

For training, download the COCO pre-trained DETR here and put it into params folder.

python -m torch.distributed.launch --nproc_per_node=4 main.py --config_path configs/interactiveness_train.yml

python -m torch.distributed.launch --nproc_per_node=4 main.py --config_path configs/interactiveness_eval.yml

- The pretrained model and result file

- Auxiliary benchmark code

Upcoming