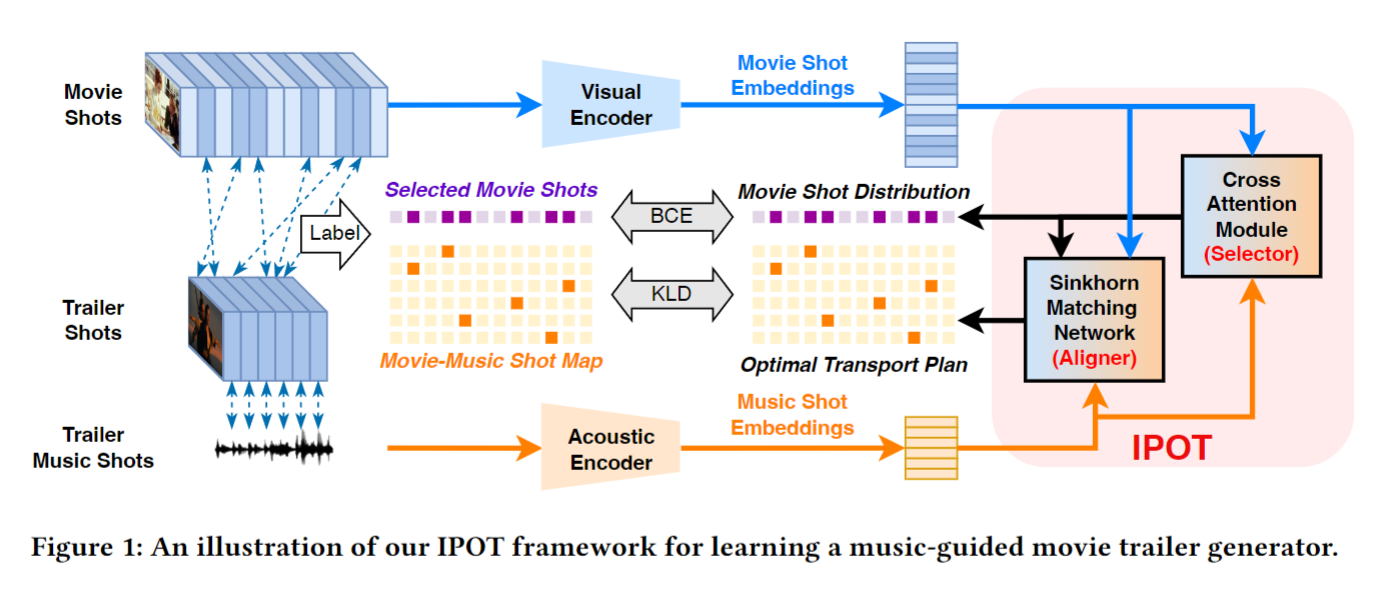

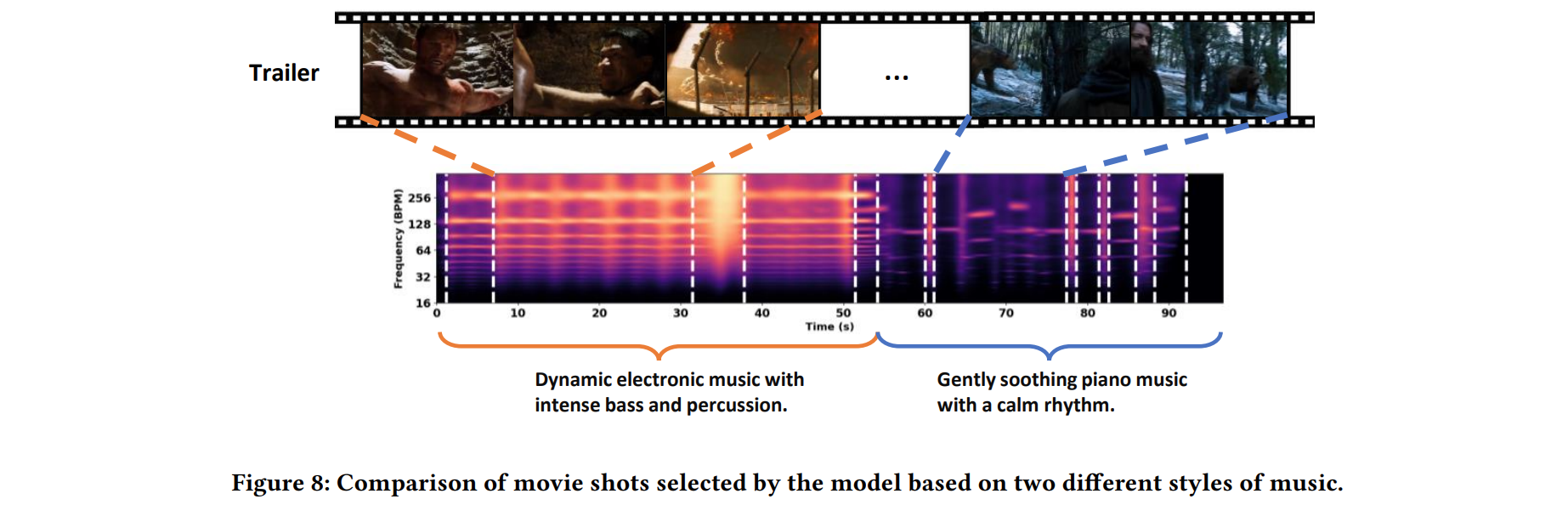

TL;DR: Given a raw video and a piece of music, we can generate an audio-visual coherent and appealing video trailer/montage.

.

├── dataset

│ ├── CMTD

| | ├── training dataset

| | | ├── audio_shot_embs (npy format, segmented audio shots)

| | | ├── movie_shot_embs (npy format, segmented movie shots)

| | | ├── trailer_shot_embs (npy format, segmented trailer shots)

| | | └── audio_movie_alignments (json format, alignment relation of audio and movie shot indices)

| | ├── test dataset

| | | ├── audio_shot_embs (npy format, segmented audio shots)

| | | ├── movie_shot_embs (npy format, segmented movie shots)

| | | ├── scene_test_movies (json format, test movie shot duration information)

| | | └── ruptures_audio_segmentation.json (json format, test audio shot duration information)

| | ├── network_500.net

| | ├── metadata.json

| | └── tp_annotation.json

│ └── MV

| ├── audio_shot_embs (npy format, segmented music shots)

| └── movie_shot_embs (npy format, segmented video shots)

├── alignment

├── feature_extratction

├── segmentation

└── utils

- python=3.8.19

- pytorch=2.3.0+cu121

- numpy=1.24.1

- matplotlib=3.7.5

- scikit-learn=1.3.2

- scipy=1.10.1

- sk-video=1.1.10

- ffmpeg=1.4

Or create the environment by:

pip install -r requirement.txt

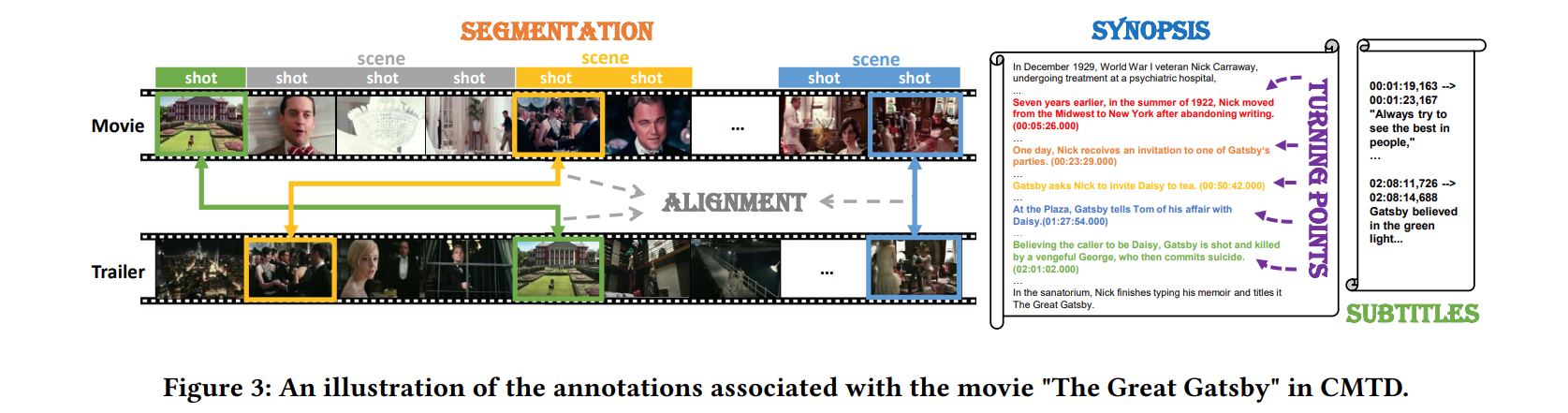

We construct a new public comprehensive movie-trailer dataset (CMTD) for movie trailer generation and future video understanding tasks. We train and evaluate various trailer generators on this dataset. Please download the CMTD dataset from these links: CMTD. We also provide a music video dataset (MV) for pre-training process. Please download the MV dataset from these links: MV, MV videos are a subset of SymMV dataset.

It is worth noting that due to movie copyright issues, we cannot provide the original movies. The dataset only provides the visual and acoustic features extracted by ImageBind after we segmented the movie shot and audio shot using BaSSL.

We provide the trained model network_500.net under the CMTD dataset folder.

We use BaSSL to split each movie into movie shots and scenes, the codes can be found in ./segmentation/scene_segmentation_bassl.py.

If you want to perform shot segmentation on your local video, please be aware of modifying the path for reading the video and the path for saving the segmentation results in the code.

movie_dataset_base = '' # video data directory

movies = os.listdir(movie_dataset_base)

save_scene_dir_base = '' # save directory of scene json files

finished_files = os.listdir(save_scene_dir_base)

During the training phase, in order to obtain aligned movie shots and audio shots from each official trailer, we segment the official trailer audio according to the duration of the movie shots.

The codes can be found in ./segmentation/seg_audio_based_on_shots.py.

If you want to perform audio segmentation based on your movies shot segmentation, please be aware of modifying the path for reading the audio and the path for saving the segmentation results in the code.

seg_json = dict() # save the segmentation info of audio

base = ''

save_seg_json_name = 'xxx.json'

save_bar_base = ""

scene_trailer_base = ""

audio_base = ""

We use Ruptures to split music into movie shots and scenes, the codes can be found in ./segmentation/scene_segmentation_ruptures.py.

If you want to perform shot segmentation on your local audio, please be aware of modifying the path for reading the audio and the path for saving the segmentation results in the code.

audio_file_path = '' # music data path

save_result_base = '' # save segmentation result

During testing phase, given a movie and a piece of music, we use BaSSL to segment the movie shots and Ruptures to segment the music shots.

We use ImageBind to extract visual features of movie shots and acoustic features of audio shots, the codes can be found in ./feature_extraction/.

After downloading and placing the dataset correctly, you can use the codes to train the model:

python train.py

After processing your own movies or downloading and placing data in test dataset correctly, you can use and modify the codes to generate the trailer:

python trailer_generator.py

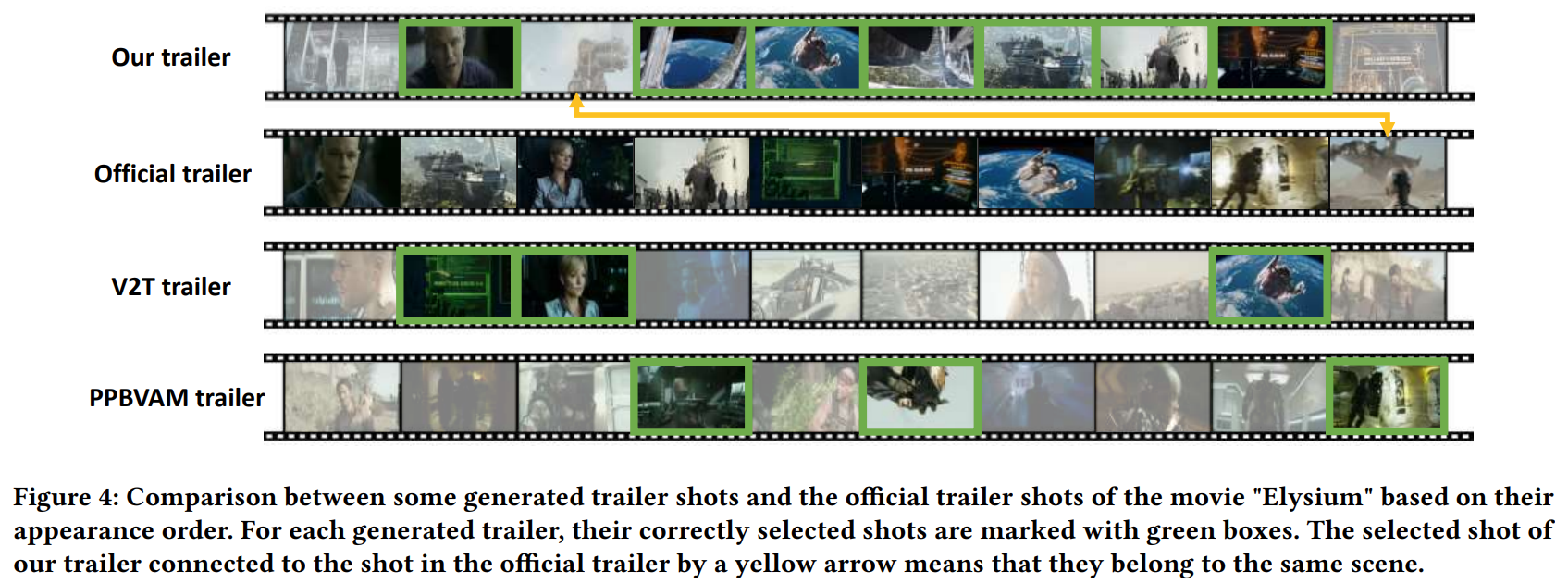

More results can be found in the project page !

When given a long video (e.g., a full movie, video_name.mp4) and a piece of music (e.g., audio_name.wav),

- Resize the input video to 320p, and generate the intra-frame coding version of the input video to make the segmented movie shots more accurate.

python ./utils/intra_video_ffmpeg.py; python ./utils/rescale_movies_ffmpeg.py

- Segment the input 320p video into movie shots through BaSSL.

python ./segmentation/scene_segmentation_bassl.py

- Segment the input music into music shots through ruptures.

python ./segmentation/audio_segmentation_ruptures.py

- Encode the movie shots into shot-level visual embeddings through ImageBind.

python ./feature_extraction/extract_video_embs.py

- Encode the music shots into shot-level acoustic embeddings through ImageBind.

python ./feature_extraction/extract_audio_embs.py

- With the processed embeddings, we can just run

python trailer_generator.pyto generate the personalized trailers.

Note: the (4) and (5) steps, the python files should be placed at the ImageBind repo, e.g., at './ImageBind/' directory.

You can also just run the provided run.py to achieve one-step inference (including all above six steps).

python run.py --input_video_path video_path --input_audio_path audio_path

Please cite our paper if you use this code or CMTD and MV dataset:

@article{wang2024inverse,

title={An Inverse Partial Optimal Transport Framework for Music-guided Movie Trailer Generation},

author={Wang, Yutong and Zhu, Sidan and Xu, Hongteng and Luo, Dixin},

journal={arXiv preprint arXiv:2407.19456},

year={2024}

}