If you started running KoBo Toolbox using a version of kobo-docker from before to 2016.10.13, actions that recreate the kobocat container (including docker-compose up ... under some circumstances) will result in losing access to user media files (e.g. responses to photo questions). Safely stored media files can be found in kobo-docker/.vols/kobocat_media_uploads/.

Files that were not safely stored can be found inside Docker volumes as well as in your current and any previous kobocat containers. One quick way of finding these is directly searching the Docker root directory. The root directory can be found by running docker info and looking for the "Docker Root Dir" entry (not to be confused with the storage driver's plain "Root Dir").

Once this is noted, you can docker-compose stop and search for potentially-missed media attachment directories with something like sudo find ${YOUR_DOCKER_ROOT_DIR}/ -name attachments. These attachment directories will be of the format .../${SOME_KOBO_USER}/attachments and you will want to back up each entire KoBo user's directory (the parent of the attachments directory) for safe keeping, then move/merge them under .vols/kobocat_media_uploads/, creating that directory if it doesn't exist and taking care not to overwrite any newer files present there if it does exist. Once this is done, clear out the old container and any attached volumes with docker-compose rm -v kobocat, then git pull the latest kobo-docker code, docker-compose pull the latest images for kobocat and the rest, and your media files will be safely stored from there on.

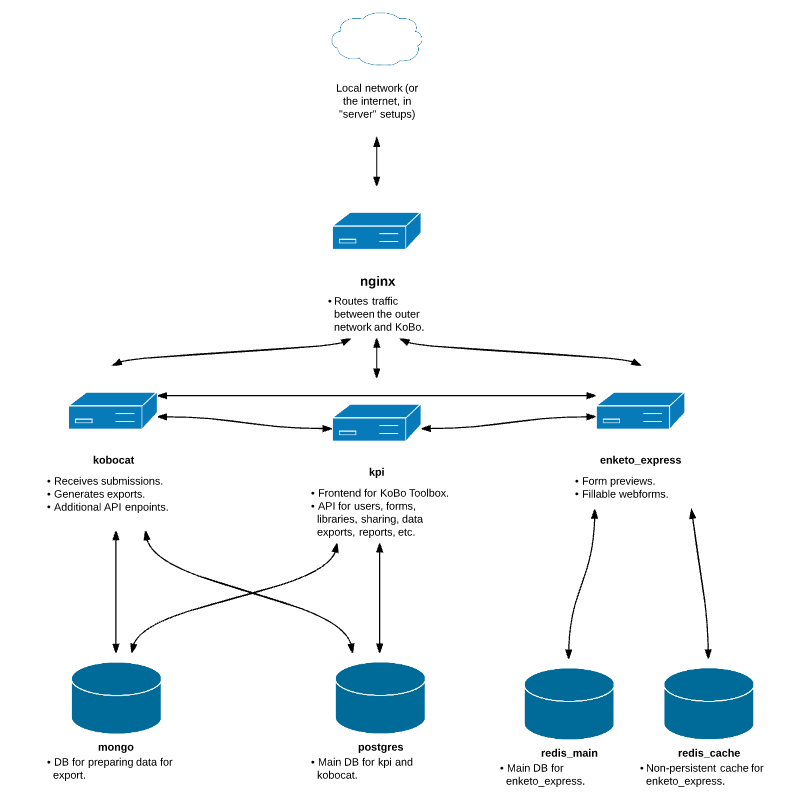

kobo-docker is used to run a copy of the KoBo Toolbox survey data collection platform on a machine of your choosing. It relies on Docker to separate the different parts of KoBo into different containers (which can be thought of as lighter-weight virtual machines) and Docker Compose to configure, run, and connect those containers. Below is a diagram (made with Lucidchart) of the containers that make up a running kobo-docker system and their connections:

-

The first decision to make is whether your

kobo-dockerinstance will use secure (HTTPS) or insecure (plain HTTP) communications when interacting with clients. While secure communications are obviously desirable, the requirements imposed by the public key cryptographic system underpinning HTTPS add a considerable degree of complexity to the initial setup (we are in the process of eventually simplifying this withletsencrypt). In contrast, setups using plain HTTP can be suitable in some cases where security threats are unlikely, such as for use strictly within a secure private network. To emphasize the difference between the two types of setup, they are referred to herein as server (HTTPS) and local (HTTP). -

Clone this repository, retaining the directory name

kobo-docker. -

Install Docker Compose for Linux on x86-64. Power users on Mac OS X and Windows can try the new Docker beta for those platforms, but there are known issues with filesystem syncing on those platforms.

-

Decide whether you want to create an HTTP-only local instance of KoBo Toolbox, or a HTTPS publicly-accessible server instance. Local instances will use

docker-compose.local.ymlandenvfile.local.txt, whereas server instances will usedocker-compose.server.ymlandenvfile.server.txtinstead.

NOTE: For server instances, you are expected to meet the usual basic requirements of serving over HTTPS. That is, public (not local-only) DNS records for the domain and subdomains as specified inenvfile.server.txt, as well as a CA-signed (not self-signed) wildcard (or SAN) SSL certificate+key pair valid for those subdomains, and some basic knowledge of Nginx server administration and the use of SSL. -

Based on your desired instance type, create a symlink named

docker-compose.ymlto eitherdocker-compose.local.ymlordocker-compose.server.yml(e.g.ln -s docker-compose.local.yml docker-compose.yml). Alternatively, you can skip this step and explicitly prefix all Docker Compose commands as follows:docker-compose -f docker-compose.local.yml .... -

Pull the latest images from Docker Hub:

docker-compose pull. Note: Pulling updated images doesn't remove the old ones, so if your drive is filling up, try removing outdated images with e.g.docker rmi. -

Edit the appropriate environment file for your instance type,

envfile.local.txtorenvfile.server.txt, filling in all mandatory variables, and optional variables as needed. -

Optionally enable additional settings for your Google Analytics token, S3 bucket, e-mail settings, etc. by editing the files in

envfiles/. -

Server-only steps:

-

Make a

secretsdirectory in the project root and copy the SSL certificate and key files tosecrets/ssl.crtandsecrets/ssl.keyrespectively. The certificate and key are expected to use exactly these filenames and must comprise either a wildcard or SAN certificate+key pair which are valid for the domain and subdomains specified inenvfile.server.txt. -

If testing on a server that is not publicly accessible at the subdomains you've specified in

envfile.server.txt, put an entry in your host machine's/etc/hostsfile for each of the three subdomains you entered to reroute such requests to your machine's address (e.g.192.168.1.123 kf-local.kobotoolbox.org). Also, uncomment and customize theextra_hostsdirectives indocker-compose.server.yml. This can also be necessary in situations where

-

-

Build any images you've chosen to manually override:

docker-compose build. -

Start the server:

docker-compose up -d(or without the-doption to run in the foreground).

11 - Bugfix. # FORM DEPLOYMENT BUG PLEASE BE ADVICED - AS FROM 10/04/2018: (should be removed when fixed)

If you start the kobo-docker framework the first time you have to set the permission of the media-folder correctly.

otherwise form deployment as well as project creation will fail:

Please run the following command inside your kobo-docker folder:

docker-compose exec kobocat chown -R wsgi /srv/src/kobocat

- Container output can be followed with

docker-compose logs -f. For an individual container, logs can be followed by using the container name from yourdocker-compose.ymlwith e.g.docker-compose logs -f enketo_express.

"Local" setup users can now reach KoBo Toolbox at http://${HOST_ADDRESS}:${KPI_PUBLIC_PORT} (substituting in the values entered in envfile.local.txt), while "server" setups can be reached at https://${KOBOFORM_PUBLIC_SUBDOMAIN}.${PUBLIC_DOMAIN_NAME} (similarly substituting from envfile.server.txt). Be sure to periodically update your containers, especially nginx, for security updates by pulling new changes from this kobo-docker repo then running e.g. docker-compose pull && docker-compose up -d.

Automatic, periodic backups of KoBoCAT media, MongoDB, and Postgres can be individually enabled by uncommenting (and optionally customizing) the *_BACKUP_SCHEDULE variables in your envfile. When enabled, timestamped backups will be placed in backups/kobocat, backups/mongo, and backups/postgres, respectively. Redis backups are currently not generated, but the redis_main DB file is updated every 5 minutes and can always be found in .vols/redis_main_data/.

Backups can also be manually triggered when kobo-docker is running by executing the the following commands:

docker exec -it kobodocker_kobocat_1 /srv/src/kobocat/docker/backup_media.bash

docker exec -it kobodocker_mongo_1 /srv/backup_mongo.bash

docker exec -it kobodocker_postgres_1 /srv/backup_postgres.bash

You can confirm that your containers are running with docker ps. To inspect the log output from the containers, execute docker-compose logs -f or for a specific container use e.g. docker-compose logs -f redis_main.

The documentation for Docker can be found at https://docs.docker.com.

Developers can use PyDev's remote, graphical Python debugger to debug Python/Django code. To enable for the kpi container:

- Specify the mapping(s) between target Python source/library paths on the debugging machine to the locations of those files/directories inside the container by customizing and uncommenting the

KPI_PATH_FROM_ECLIPSE_TO_PYTHON_PAIRSvariable inenvfiles/kpi.txt. - Share the source directory of the PyDev remote debugger plugin into the container by customizing (taking care to note the actual location of the version-numbered directory) and uncommenting the relevant

volumesentry in yourdocker-compose.yml. - To ensure PyDev shows you the same version of the code as is being run in the container, share your live version of any target Python source/library files/directories into the container by customizing and uncommenting the relevant

volumesentry in yourdocker-compose.yml. - Start the PyDev remote debugger server and ensure that no firewall or other settings will prevent the containers from connecting to your debugging machine at the reported port.

- Breakpoints can be inserted with e.g.

import pydevd; pydevd.settrace('${DEBUGGING_MACHINE_IP}').

Remote debugging in the kobocat container can be accomplished in a similar manner.