BionicGPT is an on-premise replacement for ChatGPT, offering the advantages of Generative AI while maintaining strict data confidentiality

BionicGPT can run on your laptop or scale into the data center.

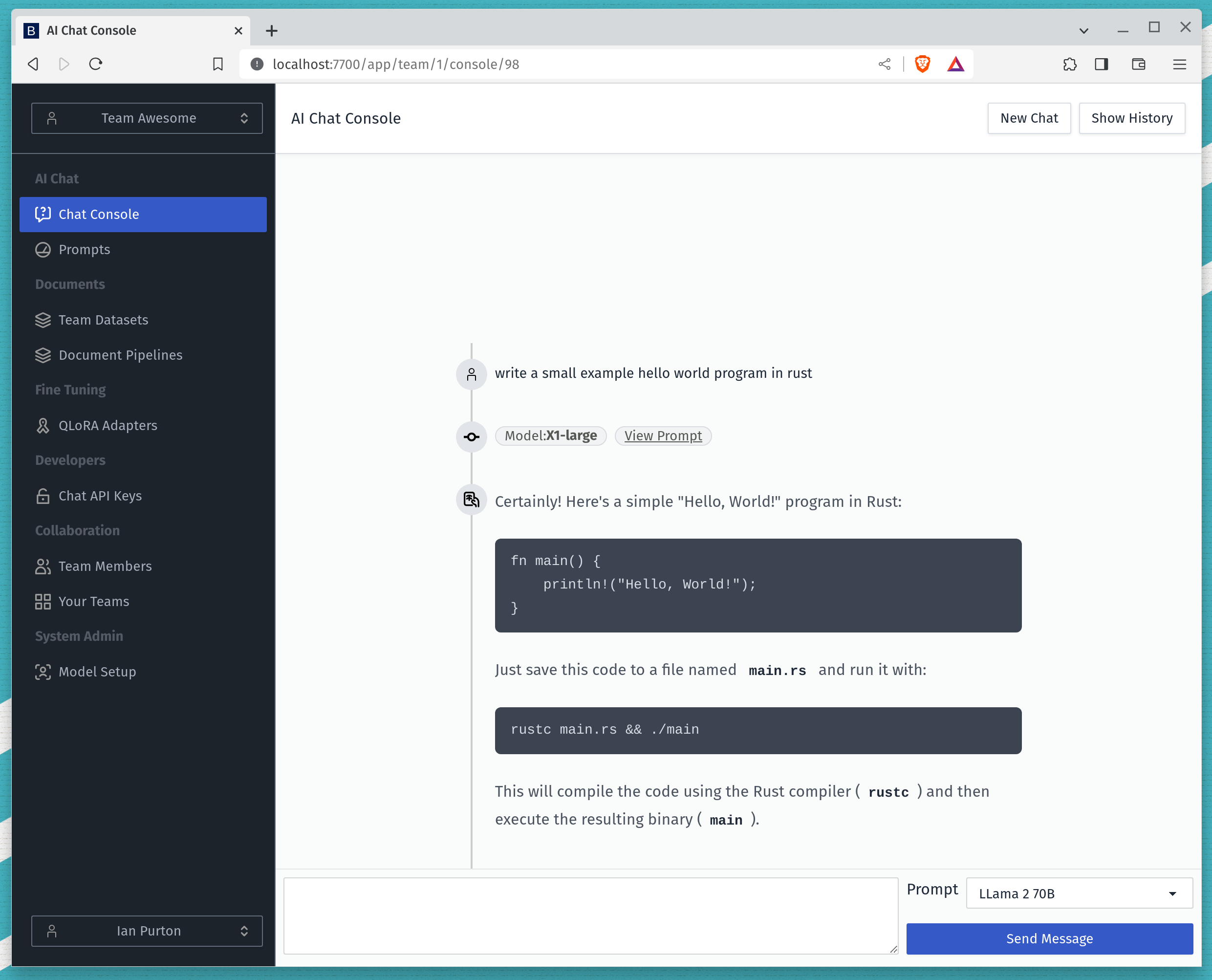

- Text Generation

- Connect to Open AI compatible API's i.e. LocalAI

- Select different prompts

- Syntax highlighting for code

- Image Generation

- Connect to stable diffusion

- Authentication

- Email/Password sign in and registration

- SSO

- Teams

- Invite Team Members

- Manage the teams you belong to

- Create new teams

- Switch between teams

- RBAC

- Document Management

- Document Upload

- Allow user to create datasets

- UI for datasets table

- Turn documents into 1K batches and generate embeddings

- OCR for document upload

- Document Pipelines

- Allow user to upload docs via API to datasets

- Process documents and create chunks and embeddings

- Retrieval Augmented Generation

- Parse text out of documents

- Generate Embeddings and store in pgVector

- Add Embeddings to the prompt using similarity search

- Prompt Management

- Create and Edit prompts on a per team basis

- Associate prompts with datasets

- Model Management

- Create/update default prompt fo a model

- Set model location URL.

- Switchable LLM backends.

- Associate models with a command i.e. /image

- Guardrails

- Figure out a strategy

- API

- Create per team API keys

- Attach keys to a prompt

- Revoke keys

- Fine Tuning

- QLORA adapters

- System Admin

- Usage Statistics

- Audit Trail

- Set API limits

- Deployment

- Docker compose so people can test quickly.

- Kubernetes deployment strategy.

- Kubernetes bionicgpt.yaml

- Hardware recommendations.

See the open issues for a full list of proposed features (and known issues).

Download our docker-compose.yml file and run docker-compose up access the user interface on http://localhost:7800/auth/sign_up

curl -O https://raw.githubusercontent.com/purton-tech/bionicgpt/main/docker-compose.ymlThis has been tested on an AMD 2700x with 16GB of ram. The included llama-2-7b-chat model runs on CPU only.

Warning - The images in this docker-compose are large due to having the model weights pre-loaded for convenience.

BionicGPT is optimized to run on Kubernetes and implements the full pipeline of LLM fine tuning from data acquisition to user interface.